|

|

| HUMAN EAR | ||

| QUESTIONS OR COMMENTS | ||

|

AUTHOR: | Barry D. Jacobson |

| E-MAIL: | bdj@MIT.EDU | |

| COURSE: | HST | |

| CLASS/YEAR: | G | |

MAIN FUNCTIONAL REQUIREMENT: Transduce acoustic energy into electrochemical nerve impulses that can be processed by the brain.

DESIGN PARAMETER: Ear

GEOMETRY/STRUCTURE:

The main structures of the peripheral auditory system are the outer ear, middle ear, and inner ear. The inner ear is connected to the central nervous system via the auditory nerve.

INTRODUCTION:

One of the most amazing functional groups in the body is the auditory system. We often take for granted the gift of hearing, and can’t imagine what life would be like without the ability to communicate with others or to enjoy music and all the other sounds in our environment. However, in order to enable us to hear and interpret those sounds, there are an enormous number of tasks that the auditory system must perform, as we will see. It far surpasses any existing sound reproduction system around. No artificial intelligence based system built to date can interpret sounds with the accuracy that the auditory system can.

SPECIFICATIONS:

The dynamic range of the auditory system, which is the interval between the softest and loudest sounds that the ear can hear, is more than 120 decibels. The decibel is the log of the ratio of two quantities multiplied by 10. This means that the ear can hear sounds whose strength lies anywhere within a range of over 12 orders of magnitude. The ear is sensitive enough that it can detect sounds which are so weak that the air molecules move less than the diameter of an atom! But yet it is also able to handle much louder sounds without overloading and saturating ("maxing out") which would cause undesirable distortion. This is accomplished by means of an automatic gain control system (AGC) which attenuates the response to louder sounds.

In terms of frequency, the human ear can hear sounds as low as 20 Hz all the way up to 20,000 Hz.

We will also see that there is exquisite temporal synchronization (control of timing) in the auditory system which makes it possible for the brain to detect slight differences in sound propagation times. Bats use this temporal precision to echolocate their prey. They actually have a fully functional sonar system which measures the elapsed time between an emitted stimulus and its returned echo to precisely locate a fast moving object and identify what it is. They can measure the timing of sounds to an accuracy of 10 nanoseconds. This allows them to capture a flying moth in about a 10th of a second, despite the fact that the moth can hear the bat and attempt evasive action. Humans, while not able to echolocate, make use of minute differences in the time of arrival of a sound in one ear as compared to the other in order to gauge its direction, as we will see.

DOMINANT PHYSICS AND EXPLANATION OF HOW IT WORKS/ IS USED:

We will briefly describe the major components of the ear. Many of the details are involved, and there is much that has not yet been worked out by scientists.

The ear has three main parts. They are called the outer, middle, and inner ears.

The outer ear consists of the organ on the side of our heads that we usually call simply "the ear". (The scientifically accurate name for this structure is the pinna.) Also included in the outer ear is the ear canal. This is the hollow tube that leads from the pinna into the head. It terminates in the eardrum which is technically known as the tympanic membrane. The purpose of the external ear is to transmit sounds from the outside world into the more internal parts of the auditory system. While one can simply think of the pinna and ear canal as a simple funnel for collecting sounds, in reality they perform some important functions. The pinna has various ridges and folds that act to reflect and absorb certain frequency components of the sound wave . Because the pinna is not circularly symmetric, sounds which come from different directions will have slightly different spectral characteristics. (This means that certain frequencies will be slightly louder or softer depending on the direction they enter the ear.) As a result, sounds which come from above our heads seem slightly different than sounds coming from below. This allows us to localize (pinpoint the direction of) a sound source. We therefore immediately look up when someone calls us from an upper story window.

The ear canal also plays a role in shaping the spectrum of incoming sounds (emphasizing certain frequencies and attenuating others). It does this in a manner similar to an organ pipe where certain wavelengths tend to reflect back in such a manner so as to cause constructive interference which acts to strengthen the sound. Others frequencies reflect back in a way which causes destructive interference and are thereby weakened. So the net result is that some signal processing already occurs in the outer ear.

At the inner end of the ear canal is the eardrum which is a small membrane about 1 cm in diameter. Its purpose is to seal off the delicate organs of the inner parts of the auditory system so that foreign matter and bacteria don’t enter which could otherwise clog the system and cause harmful infections, as well. However, it is designed to efficiently transmit sound across it.

|

| Figure 1 |

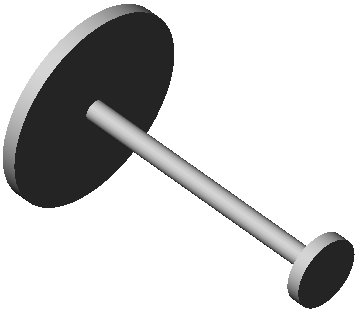

The middle ear begins at the inner surface of the eardrum. It is connected to a chain of three small bones called the ossicles. Their names are the malleus (hammer) incus (anvil) and stapes (stirrup) since they resemble those objects in shape. Their purpose is to act as a mechanical transformer. The reason for this is that sound waves are vibrations of air molecules. However, the organ which performs the actual transduction (conversion of acoustic energy into electrochemical impulses) is a fluid filled bony coil called the cochlea. Because air is much less dense than liquid, and is also more compressible, most of the energy of the sound wave would simply be reflected back into the ear canal. A rough analogy would be throwing a rubber ball at the sidewalk. Most of the energy simply is reflected in the bounce of the ball back to the thrower. Very little is transmitted to the massive earth. In order to efficiently transmit sound from air into liquid, a lever system is needed to help the move the fluid. The middle bone acts as a sort of pivot for the malleus which is attached to the eardrum, and the stapes which is attached to the cochlea. The two lever arms have different lengths giving a mechanical advantage. See figure 1. In addition, the areas of those surfaces of the bones that are in contact with the eardrum and with the cochlea are unequal, as in a hydraulic lift, giving an additional mechanical advantage. This is illustrated in figure 2. In engineering terms, this process is called impedance transformation. It is analogous to the transformers found on indoor TV antennas which match 300 Ohm flat, twin lead TV cable to the 75 Ohm coaxial cable which many modern TVs and VCRs accept. Since the electrical characteristics of the two types of cable are different, if one simply tried to solder one type of cable to another, much of the signal would reflect back and not enter the TV.

|

| Figure 2 |

The inner ear refers to the cochlea which is a helically shaped bony structure that resembles a snail in appearance. It is the most complicated part of the auditory system, and papers are continually being published in an attempt to elucidate its complex workings. Basically, it has three parallel fluid filled channels that coil the around the axis of the cochlea. If we were to hypothetically unwind the cochlea it would look something like figure 3. The last of the middle ear bones, the stapes, acts like a piston, and pushes on the fluid in the first channel, the scala vestibuli, through an opening in the base of the cochlea called the oval window, setting up a pressure wave. The pressure wave propagates in this fluid which is called perilymph toward the end of the cochlea which is called the apex. At the apex, the scala vestibuli connects through an opening called the helicotrema to the third channel which is called the scala tympani. The scala tympani acts as a return path for the pressure wave back towards the base of the cochlea. There is a flexible termination at the basal end of this channel called the round window which bulges in and out with the flow of fluid to allow the wave to flow unimpeded. Otherwise, the incompressible fluid would not have any freedom to cause much motion.

|

| Figure 3 |

The partition that separates the third channel, the scala tympani from the middle channel, the scala media is called the cochlear partition or the basilar membrane. This membrane bounces up and down in response to the pressure wave like a toy boat floating in a bathtub in response to a push on the bath water. Located on this membrane is a structure known as the organ of Corti which contains the sound sensing cells. These cells are called hair cells because they have thin bundles of fibers called stereocilia that protrude from their top. As the basilar membrane moves up and down, the stereocilia are pushed back and forth at an angle against a small roof that overhangs the organ of Corti called the tectorial membrane.

The insides of the hair cells are normally at about a –40 millivolt potential with respect to the normal extracellular (outside the cells) potential of the body. This is called their resting potential. They achieve this by pumping positive ions like sodium (Na+) out of the cell, as do many cells in the body, leaving a net negative charge. But the tops of the hair cells are located in the middle channel, the scala media, which contains a special fluid called endolymph that is potassium (K+) rich and has a +80 millivolt positive charge. This is thought to be generated by special cells in a region on the side of the scala media known as the stria vascularis. The net result of all this is that effectively this creates a battery, as it were, whose positive terminal is connected to the outside of the upper surface of the hair cell, and whose negative terminal is inside the hair cell. When the stereocilia move back and forth, small pressure gated ion channels in the hair cell membrane open which let K+ ions flow into the hair cells from the endolymph. They rush in because the strongly negative potential of the hair cells attracts positive ions. This tends to neutralize some of the negative charge, and brings the potential up towards zero, a process known as depolarization.

On the bottom and sides of the hair cells there are inputs to the auditory nerve which are separated from the hair cell by a small gap called a synapse. When depolarization occurs, special voltage sensitive calcium (Ca 2+) channels open in the hair cell, triggered by the voltage rise and let calcium into the cell. The calcium causes the hair cell to release a quantity of a special chemical called a neurotransmitter that stimulates the auditory nerve to fire. (It does this by forcing the nerve to open up its own ion channels at the synapse which raises the voltage in the nerve fiber. This triggers still other adjacent voltage sensitive channels in the fiber to open which results in a domino effect that causes a wave of depolarization to propagate along the entire nerve.)

This is a brief description of the transduction mechanism. It turns out that there are actually two types of hair cells, the inner hair cells and the outer hair cells. What we have described is the action of the inner hair cells. Outer hair cells are thought to act as amplifiers, not as transducers. They do this by stretching and contracting as the pressure wave passes near by. This pushes the tectorial membrane up and down with more force than would be achieved by the fluid alone. Recall that the tectorial membrane is the roof over the hair cells against (or in close proximity to) which the stereocilia move.

An important property of the basilar membrane is that each region is tuned to a particular frequency. The basal end is tuned to higher frequencies. That means that the point of maximum vibration for higher frequency sounds is at the base. As a high frequency pressure wave enters, it vibrates with a maximum amplitude at a point near the base, and quickly dies out as the wave continues inward. Lower frequency sounds continue on inward until their maximum point of vibration, and quickly die out after that point. Still lower frequency sounds produce maximum vibration at points close to the apex. Therefore, the nerve fibers which are located near the base contain the higher frequency components of the sound, while the fibers located near the apex contain the lower frequencies components of the sound. In this manner, the cochlea acts as a spectrum analyzer, separating different frequencies of sound from each other. It is thought that the outer hair cells contribute to the sharpness of this tuning, with each cell selectively elongating and contracting only in response to its favorite frequency, and not others. The frequency at which a fiber responds best is called its characteristic frequency (CF).

Space doesn’t permit a detailed description of the processing that occurs in the auditory nerve and its connections in the brain. There are many complex circuits and interconnections between the various auditory centers of the central nervous system. In addition, not only does the ear send impulses to the brain, but the brain sends impulses to the ear, as well. This is an example of feedback. It is not fully understood at the present time the exact role of such feedback. One opinion is that the feedback serves as an AGC system to cut down the gain of loud sounds, thus making them easier to hear, and less likely to cause damage. (Overly loud sounds are known to destroy the delicate structures of the auditory system.)

Of necessity, we will give just a short overview of auditory signal processing. Briefly, the louder the sound component at a particular frequency, the more frequently the nerve impulses (spikes) are fired in the fiber whose CF (characteristic frequency) is at that particular frequency. Nerve impulses are thought not to vary in amplitude. They are rather like a digital signal. Either a 1 or 0 is transmitted, but nothing in between. The amplitude of the sound is encoded in the rate of firing. The frequency of a sound is encoded by which particular fiber is responding (known as place coding). However, the auditory nerve has been found to have another interesting property. For low frequency signals, the firing pattern of a fiber is synchronized to the sound waveform. This means that during certain portions of the waveform there is a high probability of firing a spike, and during others, a low probability. This is known as phase locking. The stronger the component, the better it phase locks. The net result is that by plotting the firing pattern using a statistical method called a period histogram, one can observe the waveshape of the sound. So in addition to rate and place coding, there is synchrony coding, as well. It is not fully understood how and why all these coding methods are used.

One final fascinating phenomenon is the interaction of the two ears in interpreting sound. A big advantage to having two ears is the ability to accurately localize sound. There are two cues that the brain uses in doing this. The first is, as we mentioned earlier, the fact that the time of arrival of sounds in the two ears is slightly different. The closer ear receives the sound slightly earlier. The brain is sensitive to differences in time of arrival of as small as 10 microseconds, and can use this to pinpoint the location of the sound. The second cue is the fact that sounds arrive at slightly different amplitudes in the two ears. This is because the head causes an acoustic shadow which attenuates a sound coming from the opposite direction. By comparing the amplitude of the sounds in the two ears, the location of the source can be identified.

But if this is true, then how do we localize indoors? Sounds bounce numerous times off walls, ceilings, floors and other objects which would totally confuse the brain. It turns out that there is a precedence effect by which the brain only pays attention to the first wavefront that reaches it. All the subsequent echoes are ignored for the purpose of localization.

LIMITING PHYSICS

The performance of the ear at the low end of its dynamic range is limited by internal noise. All signal processing systems have some internal sources of noise due to random fluctuations in various parameters or components of the system. If a signal is too weak, the auditory system can't separate it from these random variations. The high end of the dynamic range is limited by the physical ability of its components to withstand large forces due to the intense vibrations caused by loud sounds.

The frequency response and temporal (time-related) characteristics of the auditory system are limited by the speed at which various steps of the transduction process can occur. There are various mechanical, electrical and chemical bottlenecks that can potentially slow things. Different species have different limits, and can hear much higher pitches than humans can. This is due to variation in the sizes and shapes of various structures in the auditory system among different species.

PLOTS/GRAPHS/TABLES:

None Submitted

CONCLUSION:

We have seen a glimpse of the complex processes that occur in the auditory system and the need for further research. Scientists and engineers are continually trying to study and imitate the workings of the auditory system to learn better methods of audio signal processing, and to help those whose hearing is impaired. Recent advances in cochlear implants whereby special electrodes are surgically inserted into the cochlea to stimulate the auditory nerve electrically have proven to be a promising treatment for profoundly deaf individuals. Much work in refining these systems is currently in progress at many research centers.

FURTHER READING:

There are many books and articles on hearing. The authors favorite is From Sound to Synapse by C. Daniel Geisler, Oxford 1998.

REFERENCES/MORE INFORMATION:

Web site of the Speech and Hearing Program of the Harvard-MIT Division of Health Sciences and Technology: web7.mit.edu/HSTSHS/www.

Animations of various processes in hearing can be found at: www.neurophys.wisc.edu/animations.

Other animations and further information can be found at the web site of the Association for Research in Otolaryngology www.aro.org.