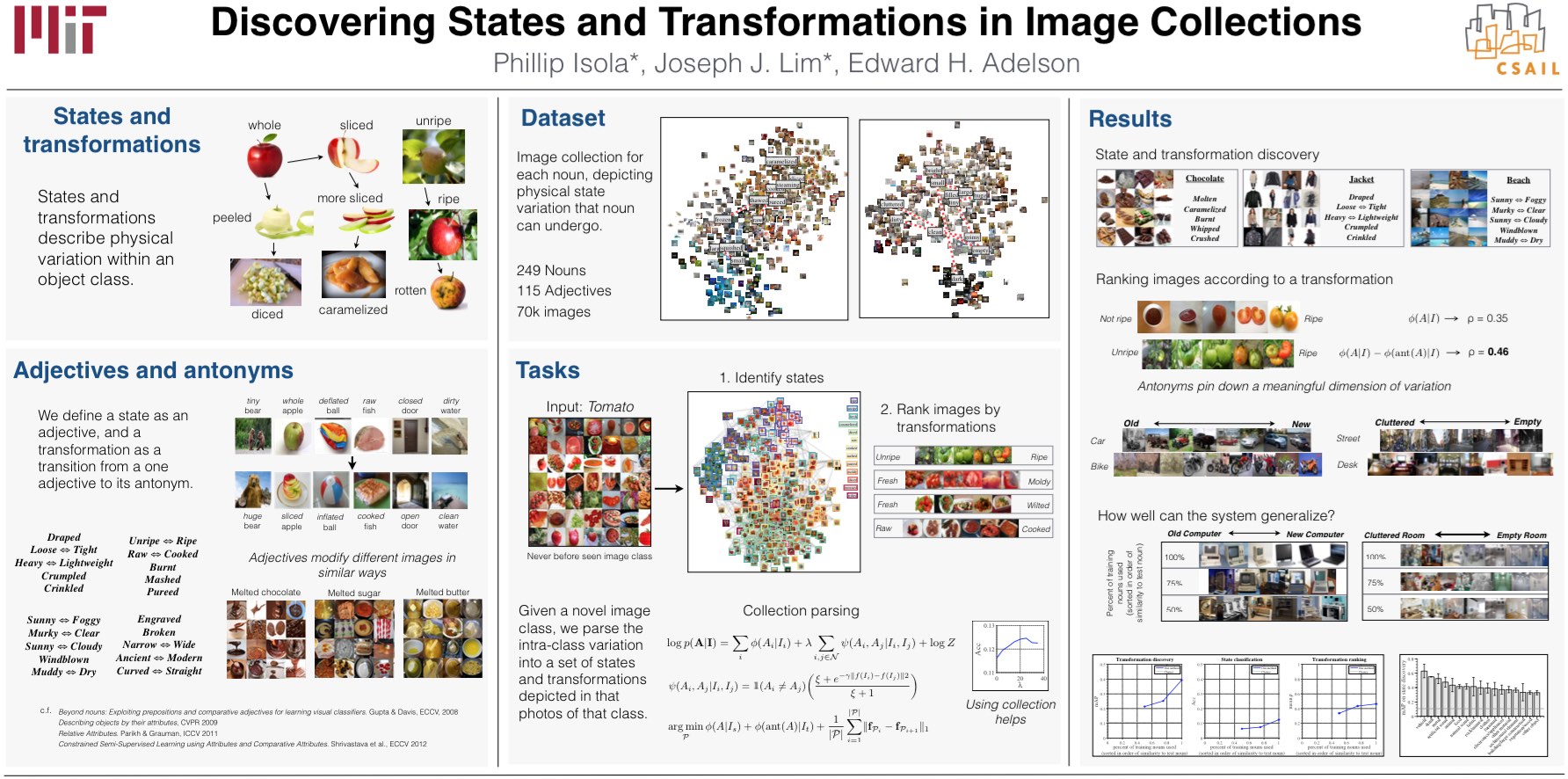

Discovering States and Transformations in Image Collections

Phillip Isola*, Joseph J. Lim*, and Edward H. Adelson* = equal contribution

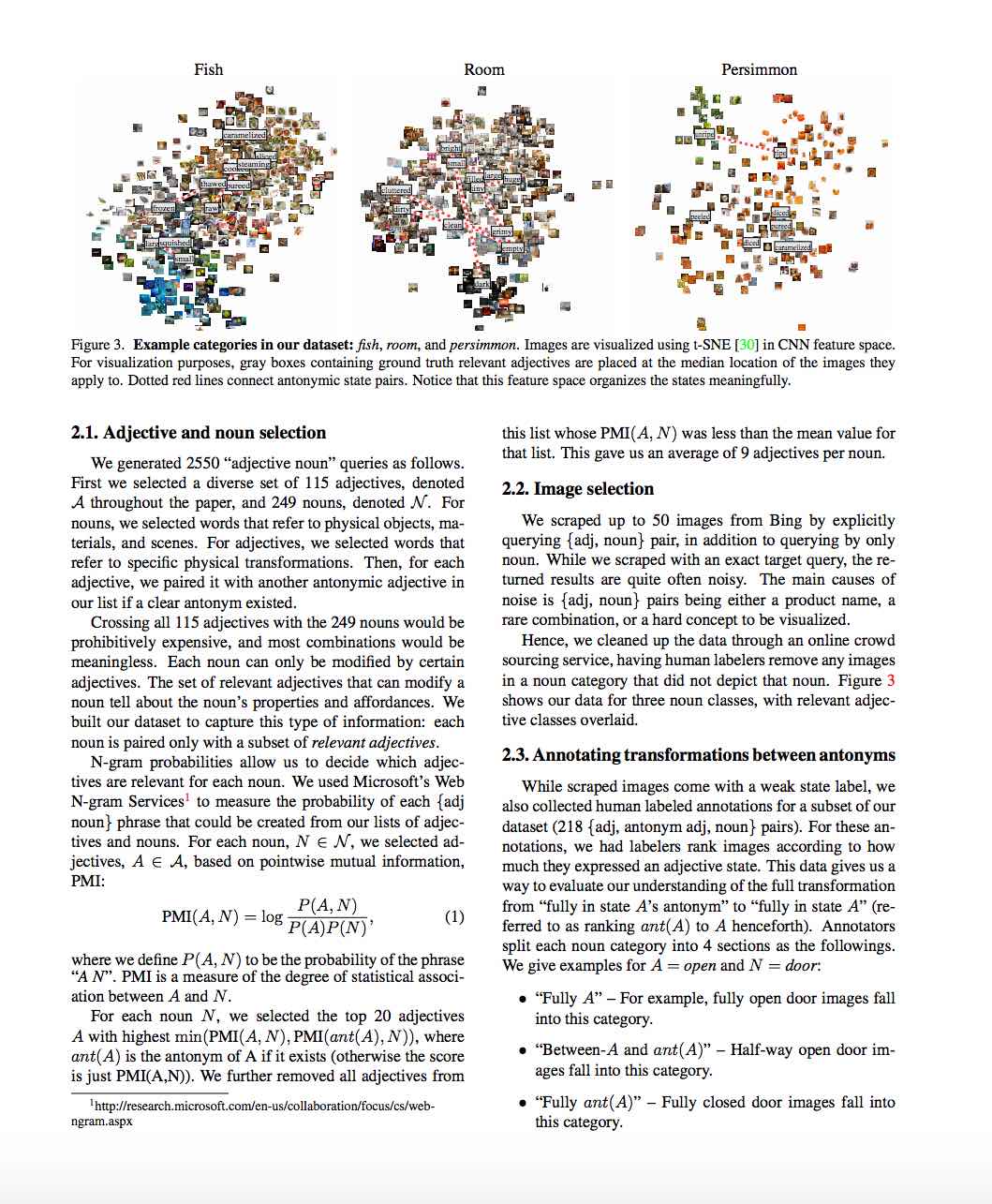

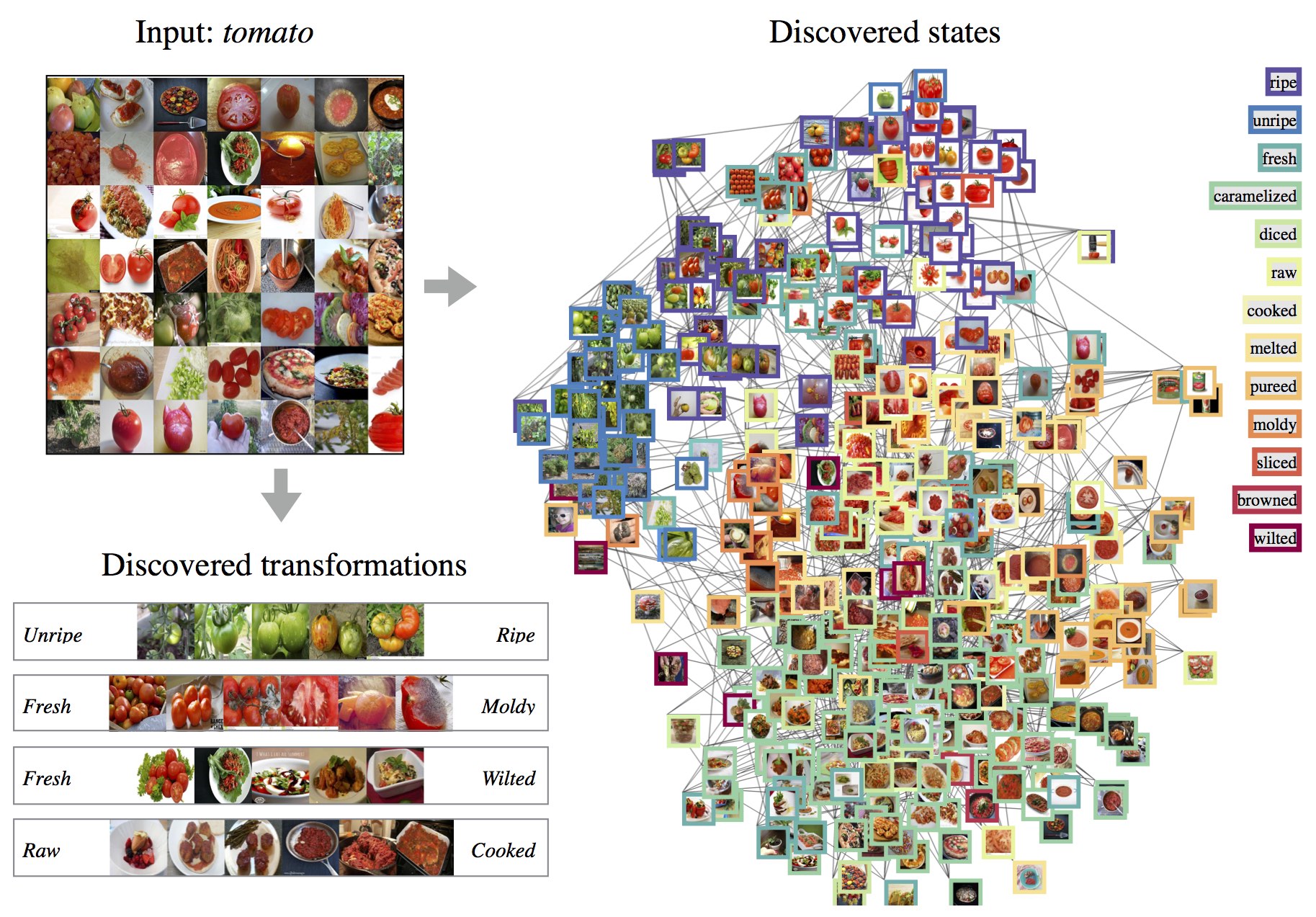

Example input and automatic output of our system: Given a collection of images from one category (top-left, subset of collection shown), we are able to parse the collection into a set of states (right). In addition, we discover how the images transform between antonymic pairs of states (bottom-left).

Objects in visual scenes come in a rich variety of transformed states. A few classes of transformation have been heavily studied in computer vision: mostly simple, parametric changes in color and geometry. However, transformations in the physical world occur in many more flavors, and they come with semantic meaning: e.g., bending, folding, aging, etc. The transformations an object can undergo tell us about its physical and functional properties. In this paper, we introduce a dataset of objects, scenes, and materials, each of which is found in a variety of transformed states. Given a novel collection of images, we show how to explain the collection in terms of the states and transformations it depicts. Our system works by generalizing across object classes: states and transformations learned on one set of objects are used to interpret the image collection for an entirely new object class.

-

Download dataset

63,440 images depicting 245 nouns modified by a total of 115 adjectives. Each individual noun is only modified by ~9 adjectives it affords.

Poster

Bibtex

@inproceedings{StatesAndTransformations,

author="Phillip Isola and Joseph J. Lim and Edward H. Adelson",

title="Discovering States and Transformations in Image Collections",

booktitle="CVPR",

year="2015"

}