Scene Collaging: Analysis and Synthesis of Natural Images with Semantic Layers

Phillip Isola and Ce Liu

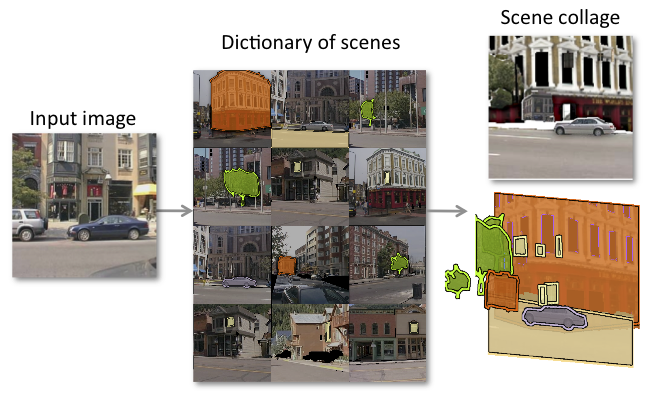

We parse an input image (left) by recombining elements of a labeled dictionary of scenes (middle) to form a collage (right).

Abstract

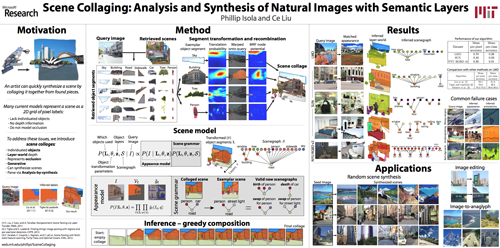

To quickly synthesize complex scenes, digital artists often collage together visual elements from multiple sources: for example, mountains from New Zealand behind a Scottish castle with wisps of Saharan sand in front. In this paper, we propose to use a similar process in order to parse a scene. We model a scene as a collage of warped, layered objects sampled from labeled, reference images. Each object is related to the rest by a set of support constraints. Scene parsing is achieved through analysis-by-synthesis. Starting with a dataset of labeled exemplar scenes, we retrieve a dictionary of candidate object segments that match a query image. We then combine elements of this set into a ``scene collage" that explains the query image. Beyond just assigning object labels to pixels, scene collaging produces a lot more information such as the number of each type of object in the scene, how they support one another, the ordinal depth of each object, and, to some degree, occluded content. We exploit this representation for several applications: image editing, random scene synthesis, and image-to-anaglyph.

Paper

Isola, P. and Liu, C. Scene Collaging: Analysis and Synthesis of Natural Images with Semantic Layers.ICCV, 2013.

Supplemental materials

Poster

Results

Demo of image-to-2.5D

Full results on LabelMe Outdoor Dataset

Bibtex

@inproceedings{SceneCollaging,

author="Phillip Isola and Ce Liu",

title="Scene Collaging: Analysis and Synthesis of Natural Images with Semantic Layers",

booktitle="ICCV",

year="2013"

}