Flexible and Adaptive Spoken Language and Multimodal Interfaces (FASiL)

Michael Cody, Fred Cummins, Eva Maguire, Erin Panttaja, David Reitter, Nathalie Richardet, Stefanie Richter, Wei Zhu

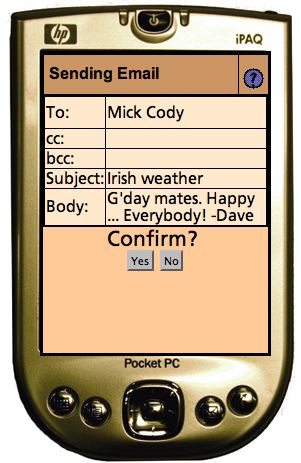

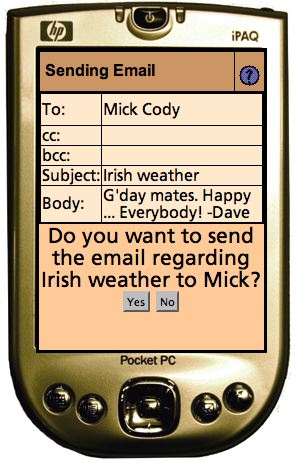

Voice: Send now? Voice: Send the e-mail about Aussie Weather to Mick now? Voice: Send the e-mail about Aussie Weather to Mick now? |

Multimodal user interfacesImagine a friendly user interface that adapts dynamically and automatically to you and the situation you are in. Multimodal communication enables us to identify objects on a screen with a finger while engaging in a conversational natural-language interaction with the machine. Our underlying models account for a variety of multimodal interactions. They form the user interface of the future. FASiL will produce a natural language and mixed-initiative Virtual Personal Assistant incorporating multimodal input and output. Empirical studies guide the research.

FASiL is connected to several projects:

CollaborationsFor FASiL, eight European partners cooperate in research and development in a two-year project funded by the European Union Commission. We are proud to collaborate with a range of partners: University of Sheffield, Cap Gemini Ernst & Young (Sweden), Portugal Telecom Inovação, ScanSoft (Germany / U.S.), Vox Generation (U.K.), Royal National Institute of the Blind (U.K.), Royal National Institute for Deaf People (U.K.).

ContactContact at Media Lab Europe: David Reitter (reitter at mle.media.mit.edu) |