What we do

✨ The SPARK Lab works at the cutting edge of robotics and autonomous systems research for air, space, and ground applications.

The lab develops the algorithmic foundations of robotics through the innovative design, rigorous analysis, and real-world testing of algorithms for single and multi-robot systems.

A major goal of the lab is to enable human-level perception, world understanding, and navigation on mobile platforms (micro aerial vehicles, self-driving vehicles, ground robots, augmented reality). Core areas of expertise include nonlinear estimation, numerical and distributed optimization, probabilistic inference, graph theory, and computer vision.

News 📰

Research highlights 🔬

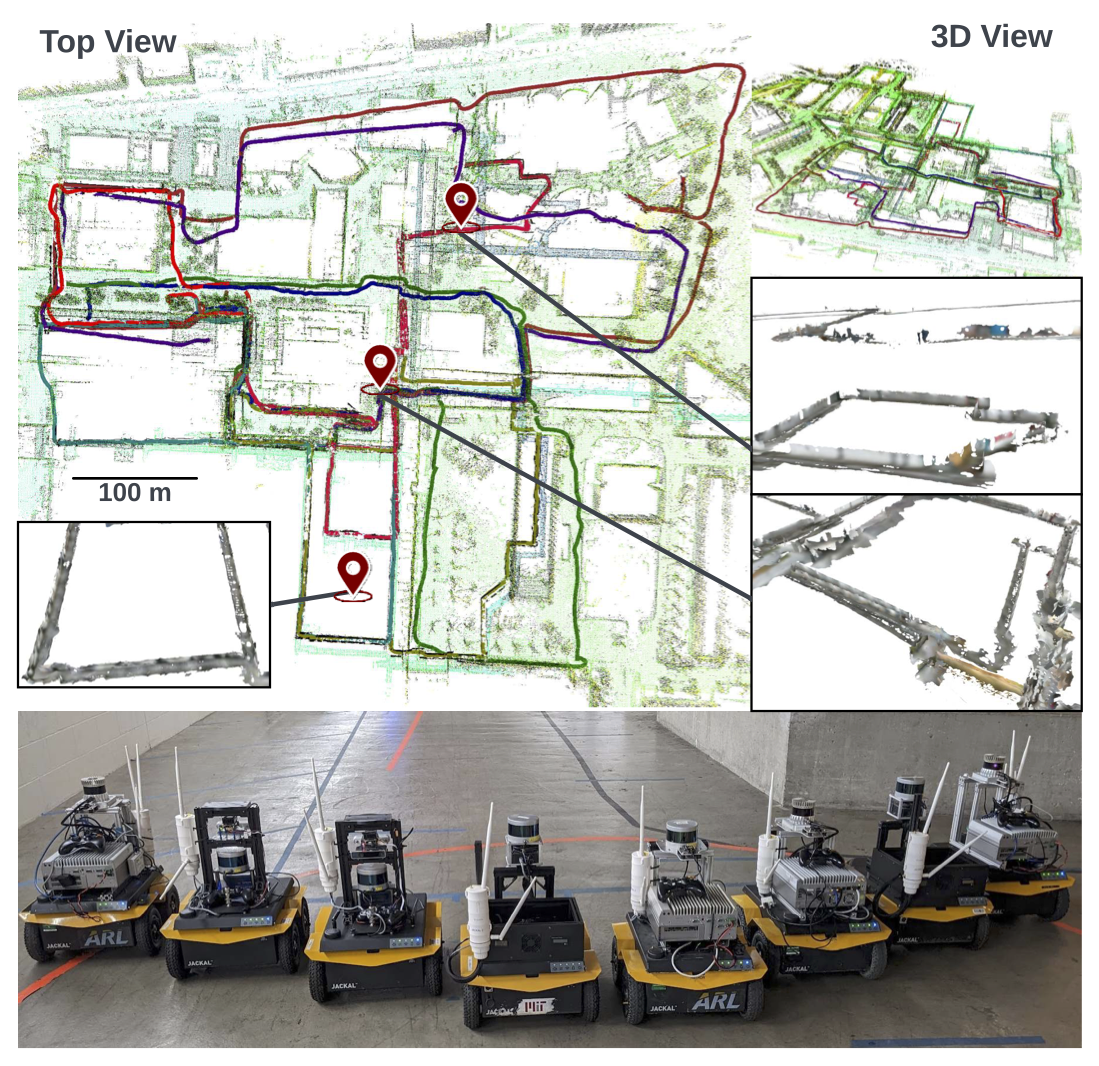

Kimera-Multi: Robust, Distributed, Dense Metric-Semantic SLAM for Multi-Robot Systems

The paper (Tian et al., 2022) presents the first approach for distributed multi-robot dense metric-semantic mapping. In particular, we present Kimera-Multi, a multi-robot system that (i) is robust to spurious loop closures resulting from incorrect place recognition, (ii) is fully distributed and only...

Read More

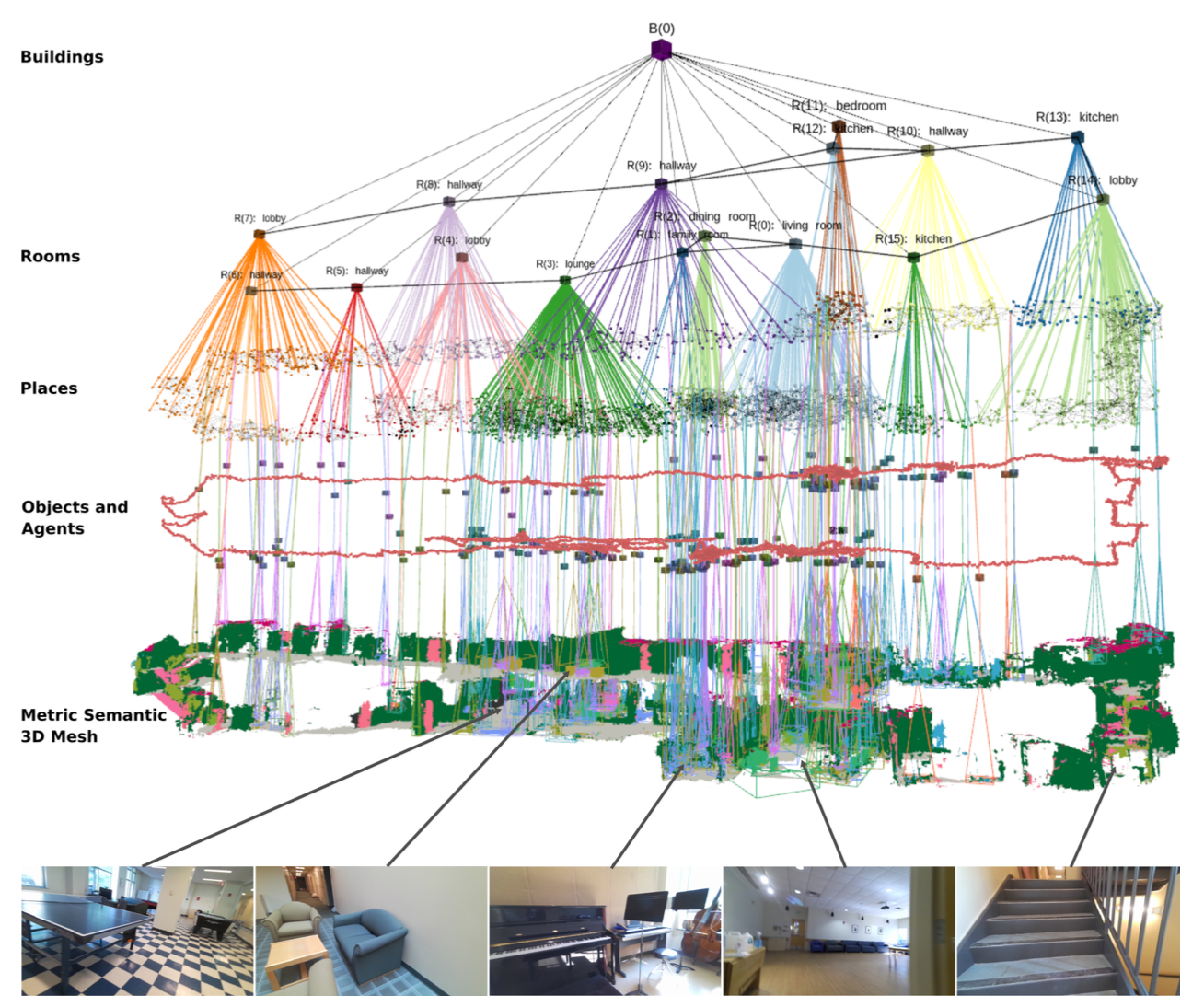

Foundations of Spatial Perception for Robotics: Hierarchical Representations and Real-Time Systems

The paper (missing reference) lays the foundations of hierarchical metric-semantic map representations for robotics. While hierarchical representations have been popular in robotics since its inception, the paper shows that these representations (i) lead to graphs with small treewidth (hence enabling efficient inference), (ii) allow combining...

Read More

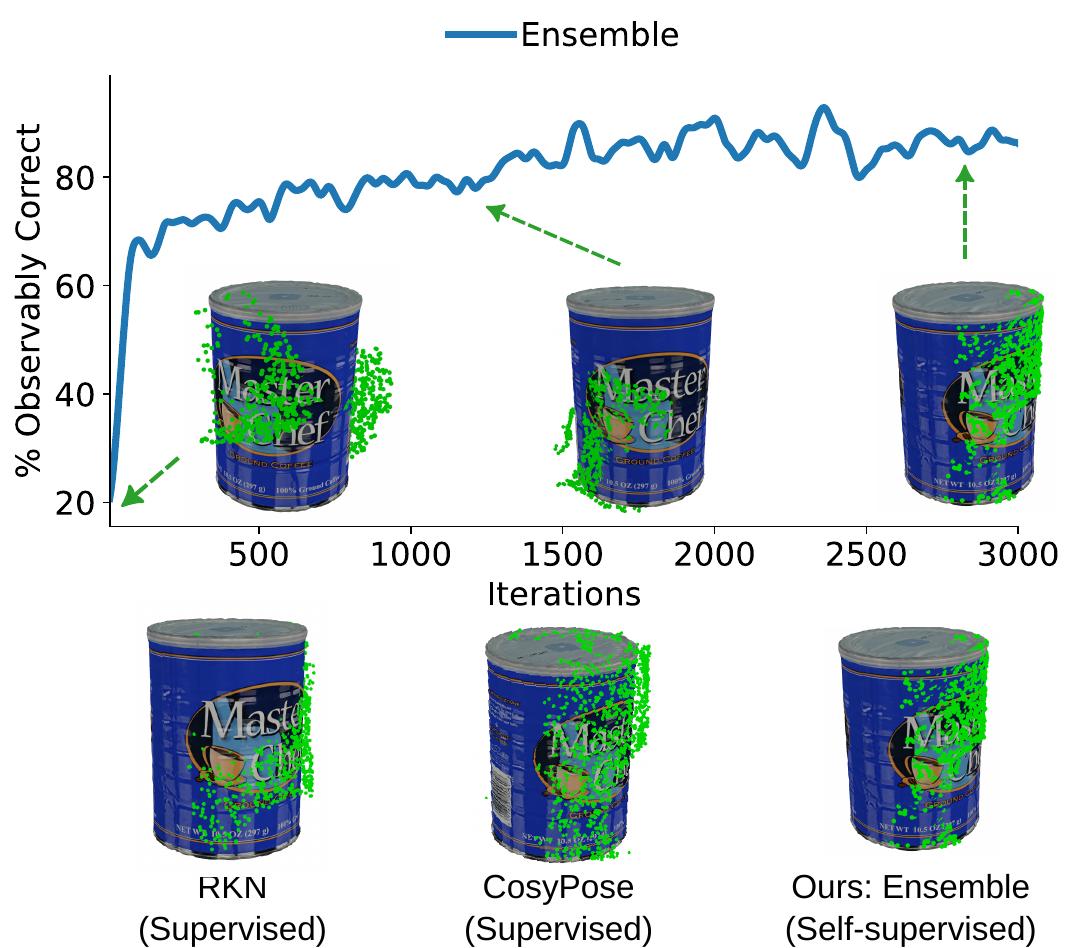

A Correct-and-Certify Approach to Self-Supervise Object Pose Estimators via Ensemble Self-Training

We propose an ensemble self-training architecture that uses the robust corrector to refine the output of each pose estimator; then, it evaluates the quality of the outputs using observable correctness certificates; finally, it uses the observably correct outputs for further training, without requiring external supervision....

Read More