Reading 22: Mutual Exclusion

Software in 6.031

Objectives

Introduction

Recall that race conditions arise when multiple concurrent computations share access (reading and writing) to the same mutable data at the same time. This is unsafe, because the correctness of the program may depend on accidents of timing of the concurrent operations.

There are three general ways to solve the problem:

This reading discusses all three ways, but focuses mostly on the last one, mutual exclusion. We will look at these techniques in several contexts of concurrent computation:

Bank account example

As a running example, let’s use a simple bank account type:

/** Represents a mutable bank account, with an integer balance in dollars. */

interface BankAccount {

/** deposit $1 into the account */

deposit():void;

/** withdraw $1 from the account */

withdraw():void;

/** account balance, an integer number of dollars, possibly negative */

readonly balance: number;

}We will define different implementations of the BankAccount type that may store the account balance in different ways, depending on the context.

As a simulation of concurrent computations accessing the same mutable data, we’ll go back to our cash machine example. A single global bank account will be shared by multiple cash machines. Each cash machine repeatedly deposits a dollar and withdraws a dollar. Here is the (non-concurrent) version of the code:

const account:BankAccount = new BankAccount(200);

// each cash machine runs a bunch of transactions on the single shared account

const NUMBER_OF_TRANSACTIONS = 10;

function cashMachine() {

console.log('started with balance', account.balance)

for (let i = 0; i < NUMBER_OF_TRANSACTIONS; ++i) {

account.deposit(); // put a dollar in

account.withdraw(); // take a dollar out

}

console.log('finished with balance', account.balance);

}

// run a bunch of cash machines

const NUMBER_OF_CASH_MACHINES = 5;

for (let i = 0; i < NUMBER_OF_CASH_MACHINES; ++i) {

cashMachine();

}Since a cash machine withdraws each dollar right after depositing it, we should expect at the end of the day that there was no effect on the account balance – and indeed that’s the case when we run this simple straight-line code, since it has no concurrency at the moment.

Each call to cashMachine() runs to completion before the next call to cashMachine() starts:

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200Asynchronous functions

Let’s start by looking at a fortunately rosy case: asynchronous functions.

Suppose we change cashMachine() into an async function:

async function cashMachine():Promise<void> {

console.log('started with balance', account.balance)

for (let i = 0; i < NUMBER_OF_TRANSACTIONS; ++i) {

account.deposit(); // put a dollar in

account.withdraw(); // take a dollar out

}

console.log('finished with balance', account.balance);

}Here’s what we see when we run it:

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200Nothing actually changed! The code still behaves like the synchronous cashMachine() function did. This is because the body of cashMachine() doesn’t contain any await expression, and await is the only place that an asynchronous function can give up control in the middle of its execution. So each cashMachine() still runs to completion, and returns, before the next cashMachine() is even called.

Let’s make the asynchronous function more interesting.

Suppose the cashMachine() function is simulating a person at a cash machine, doing these transactions.

We’ll put in some random delays to simulate human variability:

async function cashMachine():Promise<void> {

console.log('started with balance', account.balance)

for (let i = 0; i < NUMBER_OF_TRANSACTIONS; ++i) {

await timeout(Math.random()*100);

account.deposit(); // put a dollar in

await timeout(Math.random()*100);

account.withdraw(); // take a dollar out

}

console.log('finished with balance', account.balance);

}Now we see some more interesting execution traces, for example:

started with balance 200

started with balance 200

started with balance 200

started with balance 200

started with balance 200

finished with balance 203

finished with balance 203

finished with balance 201

finished with balance 201

finished with balance 200Some of the balances in this trace are not 200, which means those balances were printed while one or more of the cash machines were in the middle of their deposit/withdrawal action sequence. That’s not necessarily an error. We should expect the account balance to fluctuate while these transactions are being made. The essential correctness question is: at the end of the day, after all the cash machines are done, do we still have $200 in the account? In this trace, that’s true: the last line shows $200 at the end.

So the asynchronous calls to cashMachine() are now interleaving with each other, so the key question becomes: is there a race condition?

Is there a particular interleaving that might leave the account balance at some value different than $200? Or that might throw an exception?

The answer is no, the code is safe from bad interleavings, and the reason is that an async function can only lose control at an await.

JavaScript runs asynchronous functions using only a single thread of control, which has to switch back and forth among the active concurrent computations.

For async functions, those switches happen only when the function reaches an await.

When the function is in between awaits, it has sole control, and it cannot be interrupted or interleaved with another asynchronous function.

For the bank account example, that means each cash machine’s critical deposit() and withdraw() operations never run at the same time as another cash machine’s:

await timeout(Math.random()*100); // <=== might switch to another cash machine here

account.deposit(); // <=== runs without interruption

await timeout(Math.random()*100); // <=== might switch to another cash machine here

account.withdraw(); // <=== runs without interruption… so we never find ourselves in the situation that caused bad interleavings.

Workers

Now let’s look at the bank-account example in the context of Workers, JavaScript’s lightweight process abstraction.

We’ll start each cash machine as a fresh worker:

// run a bunch of cash machines as Workers

const NUMBER_OF_CASH_MACHINES = 5;

for (let i = 0; i < NUMBER_OF_CASH_MACHINES; ++i) {

new Worker('./cash-machine-worker.js');

}where cash-machine-worker.js just does this:

cashMachine();What happens when we run this? Here’s an example trace:

started with balance 200

finished with balance 200

started with balance 200

finished with balance 200

started with balance 200

started with balance 200

started with balance 200

finished with balance 200

finished with balance 200

finished with balance 200There is clearly interleaving happening here; the Workers are indeed running concurrently, and starting and finishing independently. But the balances are suspicious. Why are they always 200? Why don’t we ever see fluctuating balances, like we saw with the asynchronous function using random delays?

To shed light, let’s change our cash machine transactions so that they only deposit, and never withdraw:

account.deposit(); // put a dollar in

// account.withdraw(); // but don't take a dollar out, this timeWe should now expect the account to end the day with $200 plus the total number of $1 deposits that were done, which is the number of cash machines (5) times the number of transactions per machine (10). And that’s what the synchronous (non-concurrent) version of the code now does:

started with balance 200

finished with balance 210

started with balance 210

finished with balance 220

started with balance 220

finished with balance 230

started with balance 230

finished with balance 240

started with balance 240

finished with balance 250But the worker version does something different:

started with balance 200

finished with balance 210

started with balance 200

finished with balance 210

started with balance 200

started with balance 200

started with balance 200

finished with balance 210

finished with balance 210

finished with balance 210Why?

Filesystem bank account

The new Workers we created do not share the bank account object. Mutable objects are confined within the worker where they were created. So there’s no risk of a race condition – but we also aren’t doing what we wanted, which is to have a single bank account that all the cash machines are transacting.

Workers are more suited to message-passing concurrency, so message-passing would be a more appropriate way for them to use the BankAccount type, as we will see in the next reading.

But to continue to explore the issues with shared-memory concurrency, let’s change our bank account so that it uses mutable data that is shared by workers, and other processes as well: the filesystem.

Here is an implementation of BankAccount that keeps the account balance in a text file, so that different workers can share access to the file.

The type has internal operations for reading and writing the file:

private writeBalance(balance:number):void {

fs.writeFileSync(this.filename, balance.toString());

}

private readBalance():number {

const fileData = fs.readFileSync(this.filename).toString();

return parseInt(fileData);

}And then the deposit, withdraw, and balance operations use these read and write methods:

public deposit():void {

let balance:number = this.readBalance();

balance = balance + 1;

this.writeBalance(balance);

}

public withdraw():void {

let balance:number = this.readBalance();

balance = balance - 1;

this.writeBalance(balance);

}

public get balance():number {

return this.readBalance();

}Using the synchronous (non-concurrent) cash machine code that makes deposits only, this file-backed account works as expected, increasing the account by a total of $50:

started with balance 200

finished with balance 210

started with balance 210

finished with balance 220

started with balance 220

finished with balance 230

started with balance 230

finished with balance 240

started with balance 240

finished with balance 250But the worker version of the code does not work as hoped; it usually finishes with something less than $250 in the account:

started with balance 200

started with balance 200

started with balance 201

started with balance 203

started with balance 201

finished with balance 203

finished with balance 210

finished with balance 216

finished with balance 218

finished with balance 219The workers are interleaving, money is disappearing from the account, and we get a different answer every time we run it. Now we have a race condition.

Asynchronous methods

It turns out we can experience this race condition in the asynchronous-function version of our code as well, if deposit and withdraw are asynchronous methods.

The file-manipulation code above used readFileSync and writeFileSync, which are synchronous functions.

But in the asynchronous-function model, we should write this code using the asynchronous versions of these functions, which return promises:

private async writeBalance(balance:number):Promise<void> {

return fs.writeFile(this.filename, balance.toString());

}

private async readBalance():Promise<number> {

const fileData = ( await fs.readFile(this.filename) ).toString();

return parseInt(fileData);

}That means the methods of the bank account also must become asynchronous:

public async deposit():Promise<void> {

let balance:number = await this.readBalance();

balance = balance + 1;

await this.writeBalance(balance);

}

public async withdraw():Promise<void> {

let balance:number = await this.readBalance();

balance = balance - 1;

await this.writeBalance(balance);

}

public get balance():Promise<number> {

return this.readBalance();

}Now we have the potential for a race condition, because the critical mutator methods deposit and withdraw contain await expressions where they might lose control, and interleave with other deposits or withdrawals. And indeed, if we run the code to deposit a total of $50 from 5 different asynchronous cashMachine() functions, we see the same kind of bad behavior that the worker-version was seeing, for example:

started with balance 200

started with balance 200

started with balance 200

started with balance 200

started with balance 200

finished with balance 212

finished with balance 212

finished with balance 212

finished with balance 212

finished with balance 213The asynchronous filesystem bank account suffers from a race condition.

Mutual exclusion with locks

The correctness of a concurrent program should not depend on accidents of timing.

Since race conditions caused by concurrent manipulation of shared mutable data are disastrous bugs — hard to discover, hard to reproduce, hard to debug — we need a way for concurrent modules that share mutable data to synchronize with each other.

Locks are one synchronization technique. A lock is an abstraction that allows at most one concurrent computation (such as a thread, or process, or worker, or asynchronous function) to own it at a time. Holding a lock is how one thread informs other threads: “I’m working with this thing, don’t touch it right now.”

acquireallows a thread to take ownership of a lock. If a thread tries to acquire a lock currently owned by another thread, it blocks until the other thread releases the lock. At that point, it will contend with any other threads that are trying to acquire the lock. At most one thread can own the lock at a time.releaserelinquishes ownership of the lock, allowing another thread to take ownership of it.

Blocking means, in general, that a thread waits (without doing further work) until an event occurs.

The await operator blocks waiting for a promise to fulfill.

Synchronous I/O operations like readFileSync() and writeFileSync() block until the file operation is done.

An acquire(l) on thread 1 will block if another thread (say thread 2) is holding lock l.

The event it waits for is thread 2 performing release(l).

At that point, if thread 1 can acquire l, it continues running its code, with ownership of the lock.

It is possible that another thread (say thread 3) was also blocked on acquire(l).

If so, either thread 1 or 3 (the winner is nondeterministic) will take the lock l and continue.

The other will continue to block, waiting for release(l) again.

Bank account example

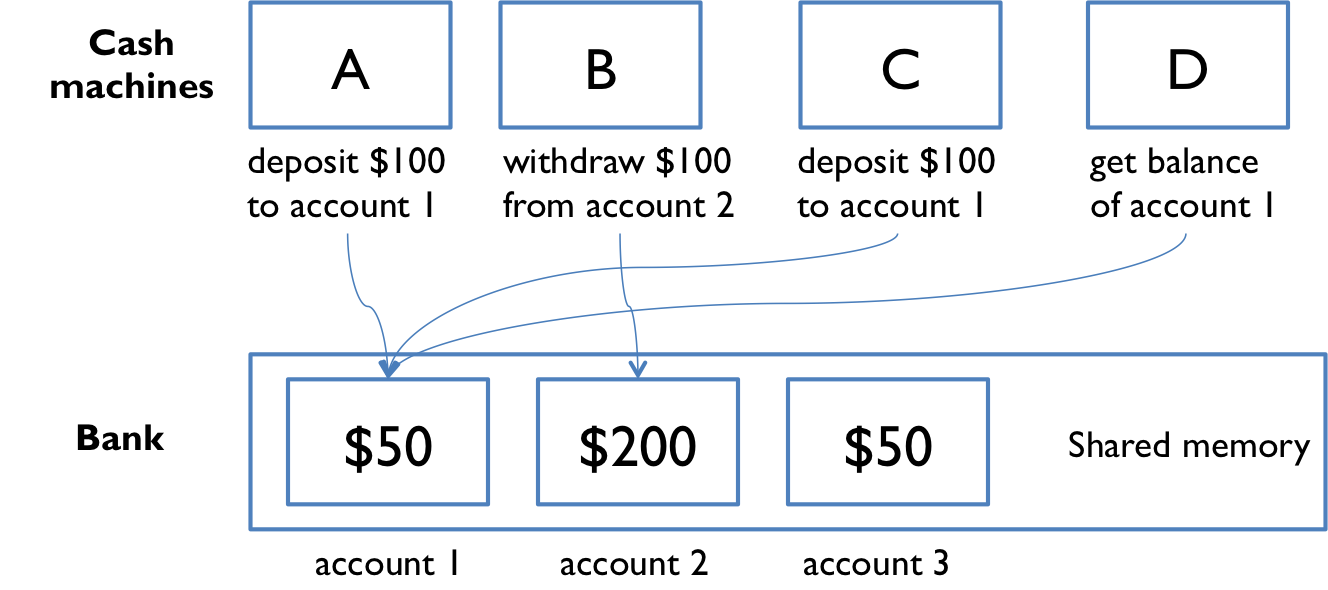

Our first example of shared memory concurrency was a bank with cash machines. The diagram from that example is on the right.

The bank has several cash machines, all of which can read and write the same account objects in memory.

Of course, without any coordination between concurrent reads and writes to the account balances, things went horribly wrong.

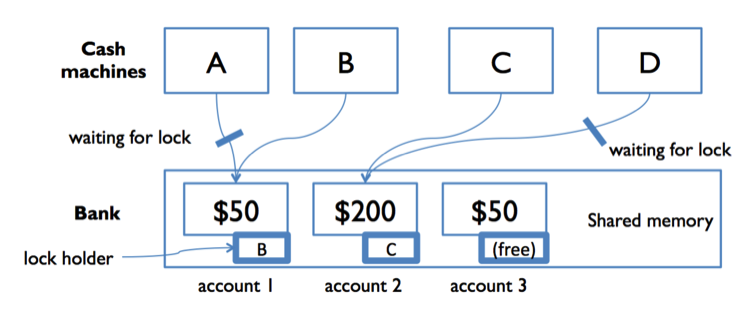

To solve this problem using locks, we can add a lock that protects each bank account. Now, before they can access or update an account balance, cash machines must first acquire the lock on that account.

In the diagram to the right, both A and B are trying to access account 1. Suppose B acquires the lock first. Then A must wait to read or write the balance until B finishes and releases the lock. This ensures that A and B are synchronized, but another cash machine C is able to run independently on a different account (because that account is protected by a different lock).

An important thing to understand about locking in general is that it’s a convention — a protocol for good behavior with a shared memory object. All participating threads with access to the same shared memory object have to carefully acquire and release the appropriate lock. If a badly-written client fails to acquire or release the right lock, then the system isn’t actually threadsafe.

Deadlock

When used properly and carefully, locks can prevent race conditions.

But then another problem rears its ugly head.

Because the use of locks requires threads to wait (acquire blocks when another thread is holding the lock), it’s possible to get into a situation where two threads are waiting for each other — and hence neither can make progress.

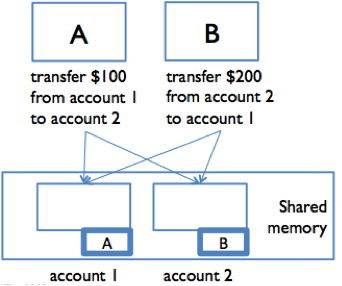

In the figure to the right, suppose A and B are making simultaneous transfers between two accounts in our bank.

A transfer between accounts needs to lock both accounts, so that money can’t disappear from the system. A and B each acquire the lock on their respective “from” account first: A acquires the lock on account 1, and B acquires the lock on account 2. Now, each must acquire the lock on their “to” account: so A is waiting for B to release the account 2 lock, and B is waiting for A to release the account 1 lock. Stalemate! A and B are frozen in a “deadly embrace,” and accounts are locked up.

Deadlock occurs when concurrent modules are stuck waiting for each other to do something. A deadlock may involve more than two modules, e.g., A may be waiting for B, which is waiting for C, which is waiting for A. None of them can make progress. The essential feature of deadlock is a cycle of dependencies like this.

You can also have deadlock without using any locks. For example, a message-passing system can experience deadlock when message buffers fill up. If a client fills up the server’s buffer with requests, and then blocks waiting to add another request, the server may then fill up the client’s buffer with results and then block itself. So the client is waiting for the server, and the server waiting for the client, and neither can make progress until the other one does. Again, deadlock ensues.

Locks in Java

Locks are so commonly-used in Java that they are a built-in language feature.

In Java, every object has a lock implicitly associated with it — a String, an array, an ArrayList, and every class you create, all of their object instances have a lock.

Even a humble Object has a lock, so bare Objects are often used for explicit locking:

Object lock = new Object();You can’t call acquire and release on Java’s intrinsic locks, however.

Instead you use the synchronized statement to acquire the lock for the duration of a statement block:

synchronized (lock) { // thread blocks here until lock is free

// now this thread has the lock

balance = balance + 1;

} // exiting the block releases the lockSynchronized regions like this provide mutual exclusion: only one thread at a time can be in a synchronized region guarded by a given object’s lock. In other words, you are back in sequential programming world, with only one thread running at a time, at least with respect to other synchronized regions that refer to the same object.

Locks guard access to data

Locks are used to guard a shared data variable, like the account balance shown here. If all accesses to a data variable are guarded (surrounded by a synchronized block) by the same lock object, then those accesses will be guaranteed to be atomic — uninterrupted by other threads.

Locks only provide mutual exclusion with other threads that acquire the same lock. All accesses to a data variable must be guarded by the same lock. You might guard an entire collection of variables behind a single lock, but all modules must agree on which lock they will all acquire and release.

Because every object in Java has a lock implicitly associated with it, you might think that owning an object’s lock would automatically prevent other threads from accessing that object.

That is not the case.

When a thread t acquires an object’s lock using synchronized (obj) { ... }, it does one thing and one thing only: it prevents other threads from entering their own synchronized(expression) block, where expression refers to the same object as obj, until thread t finishes its synchronized block.

That’s it.

Even while t is in its synchronized block, another thread can dangerously mutate the object, simply by neglecting to use synchronized itself.

In order to use an object lock for synchronization, you have to explicitly and carefully guard every such access with an appropriate synchronized block or method keyword.

Monitor pattern in Java

When you are writing methods of a class, the most convenient lock is the object instance itself, i.e. this.

As a simple approach, we can guard the entire rep of a class by wrapping all accesses to the rep inside synchronized (this).

/** Represents a mutable bank account, with an integer balance in dollars. Safe for concurrency. */

public class SimpleAccount implements BankAccount {

private int balance;

public SimpleAccount (int balance) {

synchronized (this) {

this.balance = balance;

}

}

public void deposit() {

synchronized (this) {

this.balance += 1;

}

}

public void withdraw() {

synchronized (this) {

this.balance -= 1;

}

}

public int getBalance() {

synchronized (this) {

return this.balance;

}

}

}

Note the very careful discipline here.

Every method is guarded with the lock — even apparently small and trivial ones like getBalance().

This is because reads must be guarded as well as writes — if reads are left unguarded, then they may be able to see the rep in a partially-modified state.

This approach is called the monitor pattern. A monitor is a class whose methods are mutually exclusive, so that only one thread can be inside an instance of the class at a time.

Java provides some syntactic sugar that helps with the monitor pattern.

If you add the keyword synchronized to a method signature, then Java will act as if you wrote synchronized (this) around the method body.

So the code below is an equivalent way to implement the synchronized SimpleAccount:

/** Represents a mutable bank account, with an integer balance in dollars. Safe for concurrency. */

public class SimpleAccount implements BankAccount {

private int balance;

public SimpleAccount (int balance) {

this.balance = balance;

}

public synchronized void deposit() {

this.balance += 1;

}

public synchronized void withdraw() {

this.balance -= 1;

}

public synchronized int getBalance() {

return this.balance;

}

}

Notice that the SimpleAccount constructor doesn’t have a synchronized keyword.

Java actually forbids it, syntactically, because an object under construction is expected to be confined to a single thread until it has returned from its constructor.

So synchronizing constructors should be unnecessary.

You can still synchronize a constructor by wrapping its body in a synchronized(this) block, if you wish.

reading exercises

Locks in TypeScript

Let’s look at how our filesystem bank account can use a lock to protect itself from concurrent access.

Locks are not built into the JavaScript library or runtime system like they are built into Java, so let’s use a third-party package, await-lock.

This package provides a type AwaitLock with the usual two operations, acquire and release.

The acquire operation happens to be called acquireAsync() to remind programmers that it is an asynchronous method, returning a promise that must be awaited before the lock is fully acquired.

To use this lock type in the monitor pattern, the bank account class first needs to create a lock for itself, and store it as a private instance variable:

private lock: AwaitLock = new AwaitLock();Then every public method of the bank account acquires and releases the lock:

public async deposit():Promise<void> {

await this.lock.acquireAsync();

try {

let balance:number = await this.readBalance();

balance = balance + 1;

await this.writeBalance(balance);

} finally {

this.lock.release();

}

}

public async withdraw():Promise<void> {

await this.lock.acquireAsync();

try {

let balance:number = await this.readBalance();

balance = balance - 1;

await this.writeBalance(balance);

} finally {

this.lock.release();

}

}Locking discipline

A locking discipline is a strategy for ensuring that code using locks is safe for concurrency. We must satisfy two conditions:

If an invariant involves multiple pieces of shared mutable data (which might even be in different objects), then all the data involved must be guarded by the same lock. Once the lock has been acquired in order to mutate the data, the invariant must be reestablished before releasing the lock.

The monitor pattern as used here satisfies both rules. All the shared mutable data in the rep — which the rep invariant depends on — are guarded by the same lock.

Deadlock rears its ugly head

The locking approach to solving concurrency problems is powerful, but it introduces additional blocking into the program. Threads must sometimes wait for other threads to release locks before they can proceed. And that raises the possibility of deadlock.

As we saw above, deadlock happens when threads acquire multiple locks at the same time, and two threads end up blocked while holding locks that they are each waiting for the other to release. The monitor pattern unfortunately makes this fairly easy to do.

Here’s an example, which we’ll look at in Java because it can express the monitor pattern more concisely. Suppose we’re modeling the social network of a series of books:

public class Wizard {

private final String name;

private final Set<Wizard> friends;

// Rep invariant:

// friend links are bidirectional:

// for all f in friends, f.friends contains this

// Concurrency argument:

// threadsafe by monitor pattern: all accesses to rep

// are guarded by this object's lock

public Wizard(String name) {

this.name = name;

this.friends = new HashSet<Wizard>();

}

public synchronized boolean isFriendsWith(Wizard that) {

return this.friends.contains(that);

}

public synchronized void friend(Wizard that) {

if (friends.add(that)) {

that.friend(this);

}

}

public synchronized void defriend(Wizard that) {

if (friends.remove(that)) {

that.defriend(this);

}

}

}Let’s create a couple of wizards:

Wizard harry = new Wizard("Harry Potter");

Wizard snape = new Wizard("Severus Snape");And then think about what happens when two independent threads are repeatedly running:

// thread A // thread B

while (true) { while (true) {

harry.friend(snape); snape.friend(harry);

harry.defriend(snape); snape.defriend(harry);

} }We will deadlock very rapidly.

Here’s why.

Suppose thread A is about to execute harry.friend(snape), and thread B is about to execute snape.friend(harry).

- Thread A acquires the lock on

harry(because the friend method is synchronized). - Then thread B acquires the lock on

snape(for the same reason). - They both update their individual reps independently, and then try to call

friend()on the other object — which requires them to acquire the lock on the other object.

So A is holding Harry and waiting for Snape, and B is holding Snape and waiting for Harry.

Both threads are stuck in friend(), so neither one will ever manage to exit the synchronized region and release the lock to the other.

This is a classic deadly embrace.

The program simply stops.

The essence of the problem is acquiring multiple locks, and holding some of the locks while waiting for another lock to become free.

Notice that it is possible for thread A and thread B to interleave such that deadlock does not occur: perhaps thread A acquires and releases both locks before thread B has enough time to acquire the first one. If the locks involved in a deadlock are also involved in a race condition — and very often they are — then the deadlock will be just as difficult to reproduce or debug.

Deadlock solution 1: lock ordering

One way to prevent deadlock is to put an ordering on the locks that need to be acquired simultaneously, and ensuring that all code acquires the locks in that order.

Here’s what the code might look like:

public void friend(Wizard that) {

Wizard first, second;

if (this.name.compareTo(that.name) < 0) {

first = this; second = that;

} else {

first = that; second = this;

}

synchronized (first) {

synchronized (second) {

if (friends.add(that)) {

that.friend(this);

}

}

}

}(Note that the decision to order the locks alphabetically by the person’s name would work fine for the situation of this Harry Potter book, but it wouldn’t work in a real life social network. Why not? What would be better to use for lock ordering than the name?)

Although lock ordering is useful (particularly in code like operating system kernels), it has a number of drawbacks in practice.

- First, it’s not modular — the code has to know about all the locks in the system, or at least in its subsystem.

- Second, it may be difficult or impossible for the code to know exactly which of those locks it will need before it even acquires the first one. It may need to do some computation to figure it out. Think about doing a depth-first search on the social network graph, for example — how would you know which nodes need to be locked, before you’ve even started looking for them?

Deadlock solution 2: coarse-grained locking

A more common approach than lock ordering, particularly for application programming (as opposed to operating system or device driver programming), is to use coarser locking — use a single lock to guard many object instances, or even a whole subsystem of a program.

For example, we might have a single lock for an entire social network, and have all the operations on any of its constituent parts synchronize on that lock.

In the code below, all Wizards belong to a Castle, and we just use that Castle object’s lock to synchronize:

public class Wizard {

private final Castle castle;

private final String name;

private final Set<Wizard> friends;

...

public void friend(Wizard that) {

synchronized (castle) {

if (this.friends.add(that)) {

that.friend(this);

}

}

}

}Coarse-grained locks can have a significant performance penalty. If you guard a large pile of mutable data with a single lock, then you’re giving up the ability to access any of that data concurrently. In the worst case, having a single lock protecting everything, your program might be essentially sequential — only one thread is allowed to make progress at a time.

reading exercises

In the code below three threads 1, 2, and 3 are trying to acquire locks on objects alpha, beta, and gamma.

This system is susceptible to deadlock.

For each of the scenarios below, determine whether the system is in deadlock if the threads are currently on the indicated lines of code.

Scenario A

Thread 1 inside using alpha

Thread 2 blocked on synchronized (alpha)

Thread 3 finished

(missing explanation)

Scenario B

Thread 1 finished

Thread 2 blocked on synchronized (beta)

Thread 3 blocked on 2nd synchronized (gamma)

(missing explanation)

Thread 1 running synchronized (beta)

Thread 2 blocked on synchronized (gamma)

Thread 3 blocked on 1st synchronized (gamma)

(missing explanation)

Thread 1 blocked on synchronized (beta)

Thread 2 finished

Thread 3 blocked on 2nd synchronized (gamma)

(missing explanation)

Concurrency in practice

Goals

Now is a good time to pop up a level and look at what we’re doing. Recall that our primary goals are to create software that is safe from bugs, easy to understand, and ready for change.

Building concurrent software is clearly a challenge for all three of these goals. We can break the issues into two general classes. When we ask whether a concurrent program is safe from bugs, we care about two properties:

Safety. Does the concurrent program satisfy its invariants and its specifications? Races in accessing mutable data threaten safety. Safety asks the question: can you prove that some bad thing never happens?

Liveness. Does the program keep running and eventually do what you want, or does it get stuck somewhere waiting forever for events that will never happen? Can you prove that some good thing eventually happens?

Deadlocks threaten liveness. Liveness may also require fairness, which means that concurrent modules are given processing capacity to make progress on their computations. Fairness is mostly a matter for the operating system’s thread scheduler, but you can influence it (for good or for ill) by setting thread priorities.

Strategies

What strategies are typically followed in real programs?

Library data structures either use no synchronization (to offer high performance to single-threaded clients, while leaving it to multithreaded clients to add locking on top) or the monitor pattern.

Mutable data structures with many parts typically use either coarse-grained locking or thread confinement. Most graphical user interface toolkits follow one of these approaches, because a graphical user interface is basically a big mutable tree of mutable objects. Java Swing, the graphical user interface toolkit, uses thread confinement. Only a single dedicated thread is allowed to access Swing’s tree. Other threads have to pass messages to that dedicated thread in order to access the tree.

Search often uses immutable datatypes. Our Boolean formula satisfiability search would be easy to make multithreaded, because all the datatypes involved were immutable. There would be no risk of either races or deadlocks.

Operating systems often use fine-grained locks in order to get high performance, and use lock ordering to deal with deadlock problems.

We’ve omitted one important approach to mutable shared data because it’s outside the scope of this course, but it’s worth mentioning: a database. Database systems are widely used for distributed client/server systems like web applications. Databases avoid race conditions using transactions, which are similar to synchronized regions in that their effects are atomic, but they don’t have to acquire locks, though a transaction may fail and be rolled back if it turns out that a race occurred. Databases can also manage locks, and handle locking order automatically.

Summary

Producing a concurrent program that is safe from bugs, easy to understand, and ready for change requires careful thinking. Heisenbugs will skitter away as soon as you try to pin them down, so debugging simply isn’t an effective way to achieve correct threadsafe code. And threads can interleave their operations in so many different ways that you will never be able to test even a small fraction of all possible executions.

Acquiring a lock allows a thread to have exclusive access to the data guarded by that lock, forcing other threads to block — as long as those threads are also trying to acquire that same lock.

The monitor pattern guards the rep of a datatype with a single lock that is acquired by every method.

Blocking caused by acquiring multiple locks creates the possibility of deadlock.