Laboratory for Computer Science

The principal goal of the Laboratory for Computer Science (LCS) is to conduct research on all aspects of computer science (CS) and information technology (IT) and to achieve societal impact with our research results. Founded in 1963 under the name Project MAC, it was renamed the Laboratory for Computer Science in 1974. Over the last four decades, LCS members and alumni have been instrumental in the development of many CS and IT innovations, including time-shared computing, ARPANet, the internet, the Ethernet, the World Wide Web, Rivest, Shamir, and Adleman public-key encryption, and many more.

As an interdepartmental laboratory in the School of Engineering at MIT, LCS brings together faculty, researchers, and students in a broad program of study, research, and experimentation. It is organized into 24 research groups, an administrative unit, and a computer service support unit. The laboratory's membership comprises just over 500 people, including 105 faculty and research staff, 288 graduate students, 47 undergraduate students, 50 visitors, affiliates, and postdoctoral associates and fellows, and 33 support and administrative staff. The academic affiliation of most of the LCS faculty and students is with the Department of Electrical Engineering and Computer Science. Our research is sponsored by the US government—primarily the Defense Advanced Research Projects Agency and the National Science Foundation—and by many industrial sources, including the Nippon Telegraph and Telephone Corporation (NTT) and the Oxygen Alliance. Since 1994, LCS has been the principal host of the World Wide Web Consortium (W3C) of nearly 500 organizations that helps set the standard of a continuously evolving World Wide Web.

The laboratory's current research falls into four principal categories: computer systems, theory of computation, human-computer interactions, and computer science and biology.

In the area of computer systems, we wish to understand principles and develop technologies for the architecture and use of highly scaleable information infrastructures that interconnect human-operated and autonomous computers. This area encompasses research in networks, architecture, and software. Research in networks and systems increasingly addresses issues in connection with mobile and context-aware networking and the development of high-performance, practical software systems for parallel and distributed environments. We are creating architectural innovations by directly compiling applications onto programmable hardware, by providing software-controlled architectures for low energy, through better cache management, and through easier hardware design and verification. Software research is directed toward improving the performance, reliability, availability, and security of computer software by improving the methods used to create it.

In the area of the theory of computation, we study the theoretical underpinnings of computer science and information technology, including algorithms, cryptography and information security, complexity theory, distributed systems, and supercomputing technology. As a result, theoretical work permeates our research efforts in other areas. The laboratory expends a great deal of effort in theoretical computer science because its impact upon our world is expected to continue its past record of improving our understanding and pursuit of new frontiers with new models, concepts, methods, and algorithms.

In the human-computer interactions area, our technical goals are to understand and construct programs and machines that have greater and more useful sensory and cognitive capabilities so that they may communicate with people toward useful ends. The two principal areas of our focus are spoken dialogue systems between people and machines and graphics systems used predominantly for output. We are also exploring the role computer science can play in facilitating better patient-centered health care delivery.

In the computer science and biology area, we are interested in exploring opportunities at the boundary of biology and computer science. On the one hand, we want to investigate how computer science can contribute to modern-day biology research, especially with respect to the human genome. On the other hand, we are also interested in applying biological principles to the development of next-generation computers.

Highlights

Oxygen Project

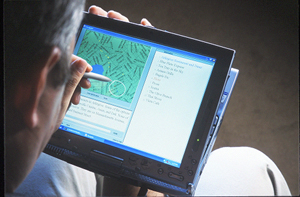

By combining speech and pen-based input (e.g., saying ���what kind of cuisines do they serve,��� while circling a cluster of restaurants displayed on a tablet computer), a user can explore the information space in an intuitive and natural manner. |

The Oxygen Project is aimed at inventing and developing pervasive, human-centered computers. It pulls together an abundance of technological resources toward creating a new breed of systems that cater to human-level needs rather than machine-level details. Oxygen is a collaborative project involving some 150 people from LCS and the Artificial Intelligence Laboratory (AI Lab), as well as researchers from the six companies that form the Oxygen Alliance: Acer, Delta Electronics, Hewlett Packard, Nokia, NTT, and Philips. During the reporting period, Oxygen achieved many notable results, including the successful demonstration of the RAW chip—utilizing a simple, tiled architecture to fully expose the interconnectivity to the compiler—for applications such as beam-forming microphone arrays, the development of location-aware networks to collect streaming data, the integration of speech and vision to support multimodal interactions, and the development and demonstration of the Oxygen operating system, which enables disparate applications and services to run under one umbrella.

Infrastructure for Resilient Internet Systems

The Infrastructure for Resilient Internet Systems (IRIS) Project is developing a novel decentralized infrastructure based on distributed hash tables (DHTs) that will enable a new generation of large-scale distributed applications. DHTs are robust in the face of failures, attacks, and unexpectedly high loads. They are scalable, achieving large system sizes without incurring undue overhead. They are self-configuring, automatically incorporating new nodes without manual intervention or oversight. They provide a simple and flexible interface and are simultaneously usable by many applications.

The approach advocated in IRIS is a radical departure from both the centralized client-server and the application-specific overlay models of distributed applications. This new approach will not only change the way large-scale distributed systems are built but could potentially have far-reaching societal implications as well. The main challenge in building any distributed system lies in dealing with security, robustness, management, and scaling issues; today each new system must solve these problems for itself, requiring significant hardware investment and sophisticated software design. The shared distributed infrastructure will relieve individual applications of these burdens, thereby greatly reducing the barriers to entry for large-scale distributed services.

The IRIS project is a joint project between MIT, the University of California at Berkeley, the International Computer Science Institute at New York University, and Rice University. Initial prototypes of the IRIS software are running on PlanetLab, a worldwide test bed. We hope to make these services open to the public later in the project. The intellectual impact of IRIS is considerable; for example, three of the five most cited papers in computer science from 2001 were written by the IRIS principal investigators.

Physical Random Functions

Silicon chips containing PUFs can be identified and authenticated. Above, the silicon chip Bob is authenticated by applying inputs and precisely measuring wire delays. |

Keys embedded in portable devices play a central role in protecting their owners from electronic fraud. Currently those keys are vulnerable to invasive attack by a motivated adversary, as available protection schemes are too expensive and bulky for most applications. We propose physical random functions (PUFs) as a more secure alternative to digital keys. Each device would be bound to a unique random function that serves as its identity. We have considered precise wire-delay measurement as a way to implement PUFs, and we have shown that manufacturing processes contain enough variability for wire delays to uniquely characterize an integrated circuit. Since these variations are not under control of the manufacturer, we have the unique property that not even the manufacturer can choose to produce indistinguishable devices. Finally, we have developed protocols that use controlled PUFs—PUFs that are physically bound to a control algorithm—as the basis for a flexible trust infrastructure.

Haystack: Personal Information Management

Vannevar Bush's famous "As we may think" sets forth a vision of each individual being able to capture, manipulate, and retrieve all the information with which one interacts on a daily basis. But information retrieval research has given relatively little attention to the individual, emphasizing instead the development of large, library-like search engines for huge corpora such as the web. The Haystack Project returns the focus to personalized information retrieval. A personalized information retrieval system must

- manage all of the digital information with which an individual interacts

- adapt to incorporate all the idiosyncratically selected properties of and relationships between information that matters to the individual user

- present the personalized data model with all of its novel attributes and relationships, adapting to the user's preferred way of looking at information, recording it, perceiving relationships, and browsing

- offer search tools that let users exploit their idiosyncratic mental models of their information in order to find what they need when they need it

The Haystack Project tackles all of these goals. We have designed a semistructured database grounded on semantic networks that is flexible enough to store arbitrary objects—including documents, web pages, and email to do items and appointments, tasks, projects, and images—and arbitrary user-defined properties and relationships. Atop that store is a user interface that introspects the data model and materializes a user interface specialized to the user's data model, one that adaptively arranges the information so as to maximize the user's ability to perceive, manipulate, and retrieve it. As with Bush's original vision, much of the focus is upon associative navigation: one can navigate from document to citation, to author, to institution, to city, to map, and so on. Haystack in its current form supports email management, calendaring, to-do management, web browsing, multiple categorization and browsing of categories, definition of projects and tasks, and association of information with them.

World Wide Web Consortium

W3C provides the vision to lead the web to its full potential and engineers the technologies that build the foundation for this vision. New work is motivated by the accelerating convergence of computers, telecommunications, and multimedia technologies. W3C has issued 60 technical standards to date. During FY2002, 15 standards were released, most based upon W3C's extensible markup language (XML) specification. SOAP (simple object access protocol) 1.2 , the foundational protocol for web services, became a standard. Other work in the web services and semantic web areas is facilitating global sharing of processes and semantically rich data between people and applications. Specifications such as XHTML, XForms, Voice XML, Cascading Style Sheets, and Scalable Vector Graphics are opening the web to a more diverse suite of devices, accessed via multiple modes of interaction. Possible new starts for the coming year include digital rights discovery, resource description framework query and rules, and binary XML. W3C's new Patent Policy provides the consortium with the most clear and comprehensive patent policy in the internet standards industry. The European Research Consortium for Informatics and Mathematics joined MIT and Keio University at W3C Hosts, thus opening access to research institutes in Europe.

Distinguished Lecture Series

Four distinguished speakers participated in this year's Dertouzos Lecturer Series. They were Brian Kernighan, professor of computer science, Princeton University; Monica Lam, professor of computer science, Stanford University; Alfred Spector, vice president of Services and Software, IBM Research Division; and Greg Papadopoulos, senior vice president and chief technology officer, Sun Microsystems.

Organizational Changes

Over the past five years, LCS faculty and researchers have increasingly collaborated with our counterparts at the AI Lab, in part facilitated by joint projects sponsored by NTT and the Oxygen Alliance. This has led to increased intellectual synergy, greater research opportunities, and a general lowering of boundaries. This climate, coupled with our impending move into the Stata Center in 2004, has offered us the opportunity to permanently remove the boundaries set up more than three decades ago. Members of the two labs have recently decided that LCS and the AI Lab will merge into a single entity. We take great pleasure in this generational change and look forward with anticipation to the birth of a new laboratory on July 1, 2003.

More information on the Laboratory for Computer Science can be found on the web at http://www.lcs.mit.edu/.