Generative Models of Images

Phillip Isola

3/26/23

This is a blog version of a 10-minute talk I gave at a "Generative AI roundtable" at CSAIL, MIT on March 20th 2023.

A recording of my talk, and the full roundtable, is here.

Credits:

Thanks to Daniela Rus, Jacob Andreas, and Armando Solar-Lezama for help on prepping the talk.

All graphics were made by me, using Keynote. The bird cartoons were based on the following photos:

1,

2,

3

Formatting and video editing was aided by ChatGPT and ffmpeg. The teaser and final figure were made using DALL-E 2.

This blog will give a brief, high-level overview of generative models of images. First we will see what these models can do and then I'll describe one way of training them.

Let's start with a model you might be more familiar with, a classifier. Classifiers are used all over the place in data science and if you've ever taken a machine learning class you will have seen them. They try to assign labels to data. Below we show an image classifier, which will output the label "bird" for the image of the left. If you understand what a classifier does, it can be easy to understand what a generative model does, because a generative model is just the inverse:

A generative model takes as input some description of the scene you want to create, say the label "bird", and synthesizes an image that matches that input. Things are a bit different operating in this direction compared to in the classifier direction. How does the model know which bird to generate? This robin does indeed match the label bird, but it also would have been fine to generate a different kind of bird, or a bird in a different pose.

The way generative models handle this ambiguity is by randomizing the output, which they achieve by inserting random variables into the model. You can think of these random variables as like a roll of dice. The dice control exactly which bird you should generate, they specify all the attributes that are left unspecified by the label. In our example, one roll of the dice will result in the robin, another roll will result in a blue bird, and rolling again we might get a parrot:

The technical term for the dice is "latent variables." We usually have a quite a few dice, maybe 100+ dice that we are rolling. That is, we have a vector of random variables (the dice) that are input to the generator. You can think of each dimension of this vector as a die, and each die controls one type of attribute. The first dimension might control the color of the bird, so randomizing that dimension, rolling that die, will change the color of the bird. Another dimension might control the angle of the bird, and another might control its size:

You can randomly roll these dice and get a random bird, but it turns out you can do something much more interesting. You can use these dice to control the generator's output by setting them to have the values you want. In this usage, the dice are actually more like control knobs. You can spin them randomly and get a random bird or you can set them manually and get the bird you want:

I want to show a real example too, not just cartoons. Below is a generative model, BigGAN, that came out in 2018. Already back then you could do the things I'm describing. On the left we have the vector of control knobs that are input to the generator, i.e. the latent variables. The circle is the whole latent space of settings of these latent variables. If we turn one knob it corresponds to walking along one dimension in the latent space; in the below example, the first dimension turns out to control the bird's orientation. Turning another knob corresponds to walking in another orthogonal direction in the latent space and here that controls the background color of the photo:

So that's how image generators work! The input is some set of controls that can be specified or randomized and the output is imagery.

Now let's turn to how to train these models: how does the generator learn to map from a vector of randomized inputs to a set of output images that look compelling? It turns out there are many ways and I'll describe just one: diffusion models [3]. This approach is really popular right now but next year a different approach might be more popular. The thing to keep in mind is that all the approaches are more alike than they are different. They all work around the same basic principle: make outputs that look like the training data. You show the generator tons of examples of what real images of birds look like, and then it can learn to make outputs that look like these examples.

Just like the generators we saw above, a diffusion model maps from random variables, which we will now call "noise", to images. Figuring out how to create an image from noise turns out to be really hard. The trick of diffusion is to instead go in the opposite direction, and just turn images into noise:

Diffusion is a very simple process, and it's just like the physical process of diffusion. Suppose these birds are not cartoons but are balloon animals, filled with colorful gas. If I pop the balloons, the gas will diffuse outward, it will lose its shape and being completely entropic; it will become "noise". Each gas particle takes a random walk. Diffusion models do this to an image. Each pixel takes a random walk. What this looks is an image getting noisier and noisier, as shown in the bottom row of the movie above.

We can create a lot of sequences like this, each showing a different image turning into noise. Diffusion models treat these sequences as training data in order to learn the reverse process: how to undo the effect of adding noise. Notice that the reverse process is an image generator! It is a mapping from pure noise to imagery. You can think of the reverse process as turning back time, and watching all the gas particles get sucked back into the shape of the balloon animals they came from. Diffusion models generate images by reversing time's arrow.

Once this system is all trained up, we can roll the dice, sampling a new noise image, and then apply the denoising process to congeal on a bird. If we roll the dice again we get different noise and that maps to a different bird.

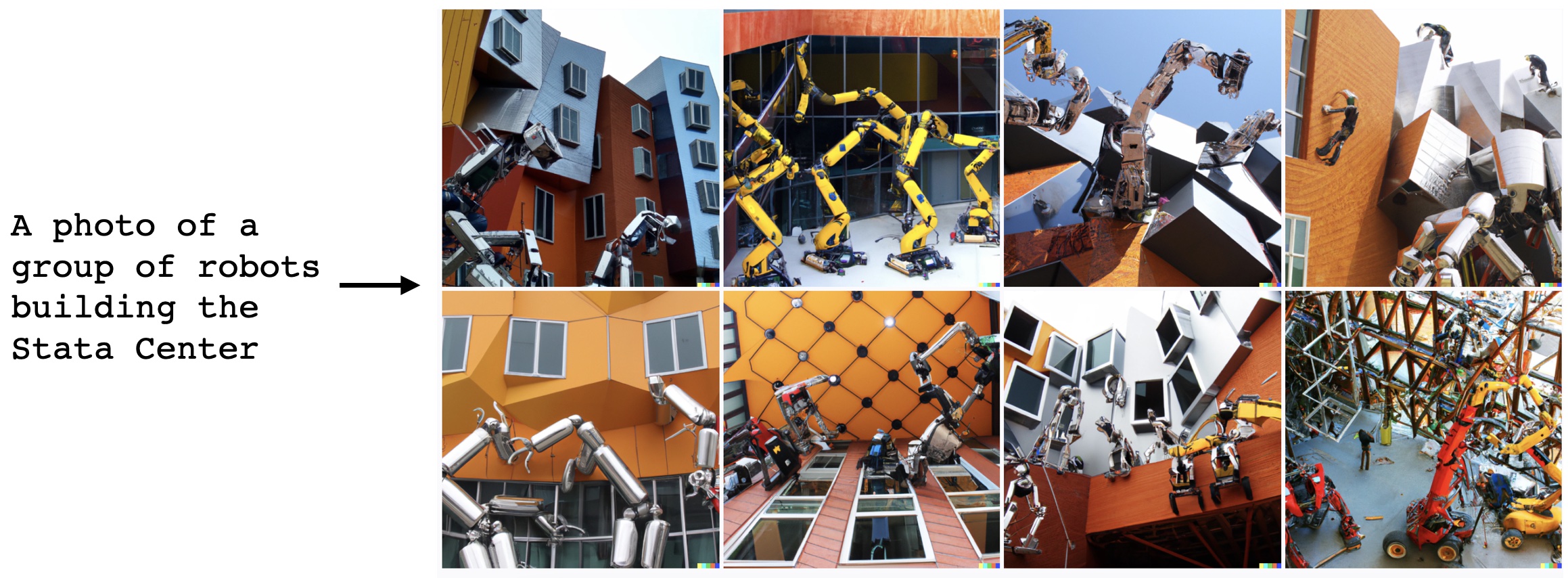

This is how popular models like DALL-E 2 [4] work. You write a sentence like "A photo of a group of robots building the Stata Center" and then the model will sample some noise and congeal it into imagery that matches that sentence, using the reverse diffusion process. The noise acts to specify everything that the text input leaves unspecified -- the camera angle, the type of robot, exactly what they are doing, etc:

Why does the output match the text? That's the job of a separate part of the model. A common strategy is to just collect tons of {text, image} pairs as training data, then learn a function that can score text-image alignment from that data. The generative model is then trained to satisfy this function. See [5] for more details on this approach.

To summarize, image generators map noise to data, and one way to train this mapping is via diffusion. After these models are trained, the mapping ends up having lots of structure. Rather than thinking of the input as "noise", think of it as a large set of control knobs. These knobs can be randomly spun to give random images, or can be steered to create the images a user wants. Text inputs are just a different set of control knobs, and you can add other kinds of controls too, like sketches and layout schematics [6, 7].

References:

[1] On the "steerability" of generative adversarial networks, Jahanian*, Chai*, Isola, ICLR 2020

[2] Large Scale GAN Training for High Fidelity Natural Image Synthesis, Brock*, Donahue*, Simonyan*, ICLR 2019

[3] Hierarchical Text-Conditional Image Generation with CLIP Latents, Ramesh*, Dhariwal*, Nicol*, Chu*, Chen, 2022

[4] Deep Unsupervised Learning using Nonequilibrium Thermodynamics, Sohl-Dickstein, Weiss, Maheswaranathan, Ganguli, ICML 2015

[5] Learning Transferable Visual Models From Natural Language Supervision, Radford*, Kim*, Hallacy, Ramesh, Goh, Agarwal, Sastry, Askell, Mishkin, Clark, Krueger, Sutskever, ICML 2021

[6] Image-to-Image Translation with Conditional Adversarial Networks, Isola, Zhu, Zhou, Efros, CVPR 2017

[7] Adding Conditional Control to Text-to-Image Diffusion Models, Zhang & Agrawal, 2023