| 11.520: A Workshop on Geographic Information Systems |

| 11.188: Urban Planning and Social Science Laboratory |

Due Monday, Sept. 27, 2010

(1) Merging, editing, and saving tables: To do this lab, we'll have to learn a few more ArcMap techniques for merging and saving tables. There are hundreds of census variables and any Cambridge blockgroup map is likely to include only a small subset of them. To use other census data, we'll have to load these data into ArcMap and "join" them with the attribute table of the Cambridge blockgroup theme using a common geographic reference variable (such as the state-county-tract-blockgroup identifier, Stcntrbg). We may also need to compute new fields that normalize or otherwise combine multiple columns of the original data. But the official class datasets are "read-only" and ArcMap requires that you have write access to attribute tables in order to create and calculate new fields. So we'll also need to learn how to save local "writeable" copies of (extracts of) our class datasets.

(2) Spatial Joins: Suppose we'd like to compare the sales prices of Cambridge homes with the socio-economic characteristics of their neighborhoods. For example, we might want to know whether the high-priced sales tended to be in neighborhoods with highly educated adults. By drawing a pin-map of high-priced 1989 sales locations on top of a Cambridge block group map, we may be able to see a pattern. The block group map can be thematically shaded by educational attainment levels. With this map, we can 'see' whether high/low-priced sales cluster in neighborhoods with, for example, more (or less) educational attainment. But the pattern may be misleading or hard to interpret and we may want to quantify the relationship to measure the degree of association and to tag the sales data with the some of the characteristics of their neighborhoods. Much of the benefit of using GIS software depends on its tools for cross-referencing and reinterpreting data based on spatial location. We'll defer until later labs the more general and advanced tools for tagging data based on spatial proximity and we'll focus in this lab on simpler spatial joins and buffer creation.

(3) Setting coordinate system: So far, the coordinate system of the data has already been preset for your use. However, in many cases, you may need to specify or change the coordinate systems associated with GIS datasets that you acquire. In this exercise, you will learn how to apply a suitable coordinate system to your datasets.

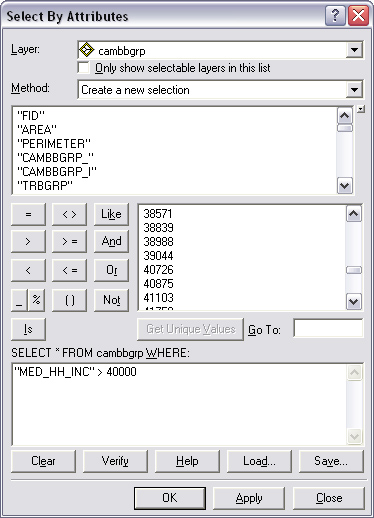

Now we will experiment with querying the data. Select "Select by Attributes" under the "Selection" menu. On the "Select by Attribute" window, make sure to choose Cambbgrp for the "layer" window. In this case, we don't need to worry about which layer to choose because we have only one layer. Otherwise, we have to pick up the correct layer from the drop-down list. In the method box, keep the default choice "create a new selection".

Now you can type your query manually into the box in the bottom left corner of the window, or you can use the tools at the top of the window.

Let's find the block groups with median household income in excess of $40,000 per year. In the "Fields" list, double-click on "Med_hh_inc". Then single-click on the ">" button. Next, you can type in 40000 or scroll down "Values" list until you can double-click on 40000 (or the next lowest value if no block group had a value of exactly $40,000). The query entry window should now show "Med_hh_inc"> 40000

|

The window finally looks like the Fig. 1. Note that attribute names are enclosed in double-quotes and numerical values do not use quotes (and text values would use single quotes). Click "Apply" button to run the query and 'select' these block groups. Notice that all block groups where the median household income is greater than $40,000 are now highlighted in the data display area. If you open the attribute table, you will find that the associated records are also highlighted. You may need to scroll up or down in the attributes window to see the highlighted records. If you click the Selected button, which is at the bottom of the attributes window, only the selected records will be visible. In our case, next to the Selected button, you will see that "28 out of 94 are selected".

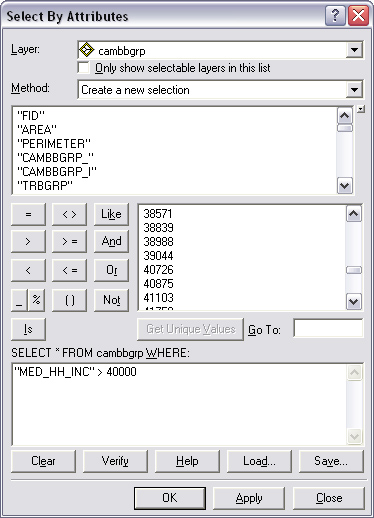

Now close the query window. Make sure the selected records are still highlighted. Select Statistics from the Selection menu. You should see a selection statistics window. Choose Med_hh_inc in the field drop down list (Fig. 2). You should see a window listing some common statistical measures describing the 28 selected records of the Med_hh_inc field. It also generates a frequency distribution diagram (although this frequency distribution may not be visible when you work in the 37-312 lab because of a bug in the software configuration of those machines).

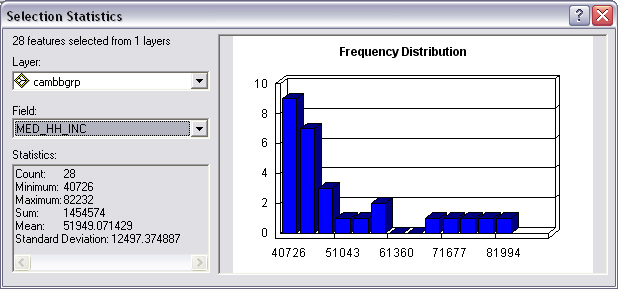

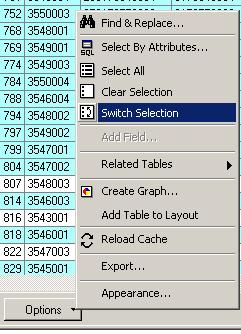

Now close the selection statistics window and return to the ArcMap window. Swap the selected and unselected block groups in the follow way: 1. Open the attribute table; 2. Click Options; 3. On the pop-up menu, select Switch selection.

|

|

|

Select Statistics from the selection menu again to summarize these 66 lower income block groups. Write the mean and standard deviation of these 85 block groups on your lab assignment. (There is more than one way to access the selection statistics. With the attribute table open, try right-clicking on the column heading of the attribute field whose statistics you wish to compute...)

Note from the statistics for these 66 block groups that at least one block group had a median household income of zero. Let's look at these block groups using the select by attributes function. We find that there are some with zero income. Two of these block groups are near Tech Square and one of them is near Harvard Square (where students in dorms count as people but not households). Let's exclude these block groups from consideration and recompute the statistics for the 63 block groups with a median income of $40,000 or less. Specify the selection criterion as "MED_HH_INC" > 0 and "MED_HH_INC"<=40000. Then use the Selection > Statistics menu to compute the mean and standard deviation for those block groups with median household income greater than zero and less than or equal to $40,000. Record these two numbers on your lab assignment. Since we consider the census data to be unreliable for the three block groups with '0' income, we may wish to exclude them from consideration entirely. One way to do this is to set the layer properties for Cambbgrp. Before doing this, however, be sure to clear all selected features (via Selection//Clear-Seleced-Features from the main menu). Double click on the layer's name to bring up the layer property window. Under the tab "definition query", type in "MED_HH_INC" > 0. Click OK. Notice that the Cambbgrp layer no longer shades the three block groups with zero income. They have been excluded from the layer and they are no longer shaded, but are 'donut holes' in the Cambridge map. The data are still there on disk but ArcMap is ignoring the three block groups that don't meet the selection criteria specified in the layer definition criterion. For the remainder of the exercise we will exclude the zero-population block groups from our analysis.

(Universe: Persons 25 years and over) |

|

| Column | Description |

|---|---|

| EDUTOTAL | Total Persons 25 years and over |

| EDU1 | Less than 9th grade |

| EDU2 | 9th to 12th grade, no diploma |

| EDU3 | High school grad (includes equivalency) |

| EDU4 | Some college, no degree |

| EDU5 | Associate's degree |

| EDU6 | Bachelor's degree |

| EDU7 | Graduate or professional degree |

Suppose we wanted to generate a thematic map of the percentage of people (aged 25 and older) who have less than a high school education. The EDU variables in the Attribute table of Cambbgrp table provides the required information. They indicate the 1990 census counts of persons (aged 25 years and over) with various degrees of education as in Table 1.

From the listing, we see that the desired percentage equals

100 * (EDU1 + EDU2) / EDUTOTALIf we have loaded Cambbgrp directly from the class data locker, then the attribute table is read-only so we can't edit the table in order to add a new column that computes this percentage. To overcome this problem, we'd like to create (and edit) a local copy of the table. But there are several dozen columns in the table and we are concerned only with the geographic identifiers and the EDU fields.

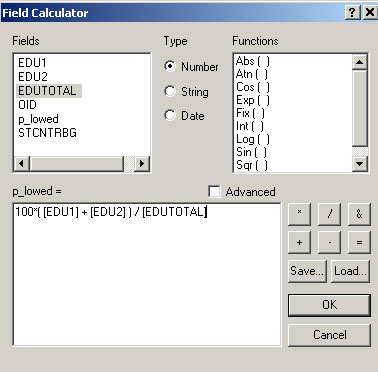

Open the layer properties window, go to the Fields Tab. Turn the 'visible' box off for all but the three needed EDU fields plus the Stcntrbg blockgroup identifier (so we can join this table back to the appropriate block group later on). To turn off a field, first select the field, then uncheck the visible box. When you click 'OK' the table will show only those four fields. Open the attribute table again to confirm this behavior.

Now you need to export the table. Click the Option Button, which

is on the bottom right of the attribute table window. On the pop-up menu, choose

Export. Save the table to a local working directory (such as C:\usertemp). If you have not

registered your working directory with ArcCatalog, you may need

to use the connect to folder![]() button to register it first (Your working directory might be I:\Private\11.520).

Save the table as a dBase-formatted table. Call the table education.dbf.

The *.dbf suffix will be a handy reminder of the data format for the table and

the dBase format is quite portable and easily read by Excel and many other packages. [NOTE: Go through these steps of saving education.dbf even if you are using a local, writeable copy of the original Cambbgrp shapefile, so you learn how to extract and save portions of the attribute table from a large shapefile.]

button to register it first (Your working directory might be I:\Private\11.520).

Save the table as a dBase-formatted table. Call the table education.dbf.

The *.dbf suffix will be a handy reminder of the data format for the table and

the dBase format is quite portable and easily read by Excel and many other packages. [NOTE: Go through these steps of saving education.dbf even if you are using a local, writeable copy of the original Cambbgrp shapefile, so you learn how to extract and save portions of the attribute table from a large shapefile.]

When asked whether you want to add the table to the current map, click Yes. Now this newly saved-to-disk education.dbf table will be included in your map document file. However, you may not be able to see it in the data frame if the bottom tab is set to Display instead of Source. (When the Display tab is active only the mapable layers will be shown.)Change the view of the data frame from Display to Source; and the education.dbf table will be listed. If you clicked the wrong button when asked to add the table to the map file, it won't be listed, but you can now add it in the same way as you add any other map layer or attribute table.

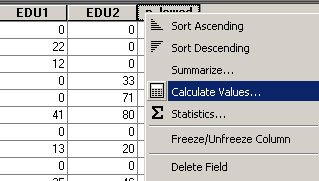

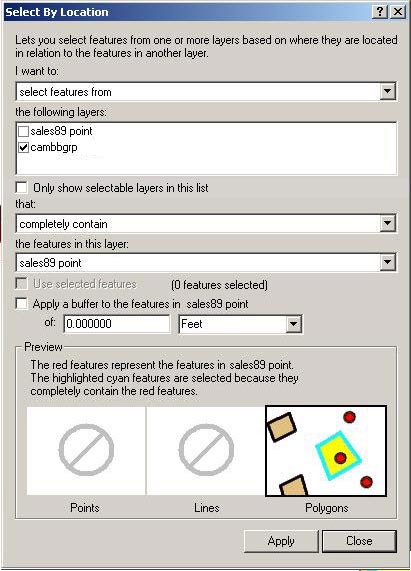

Since you now have write access to your own education.dbf table, you now be able to add a new column for computing your low-education percentages. Right click the table name in the data frame. On the pop-up menu, select Open Attribute Table. On the new window, click on options, and on the pop-up menu, select Add field. Call the new field p_lowed and change the data type to float. Click OK, and you will see a new field appear in the window. Next, right click on the new column name and, from the pop-up menu, select Calculate Values. Click "yes" when a warning message comes up (about not being able to undo the editing). Then set

p_lowed = (this top line should appear by default above the box with the cursor and you should not retype it)

100 * ([EDU1] + [EDU2]) / [EDUTOTAL]

|

|

Sort the table (in descending order) by p_lowed. (Right click on the column name and select Sort Descending.) The highest p_lowed value is 55.5%. What is the third highest p_lowed percentage? How many block groups have more than 40% of their adults lacking a high school diploma? Write the values on your lab assignment.

|

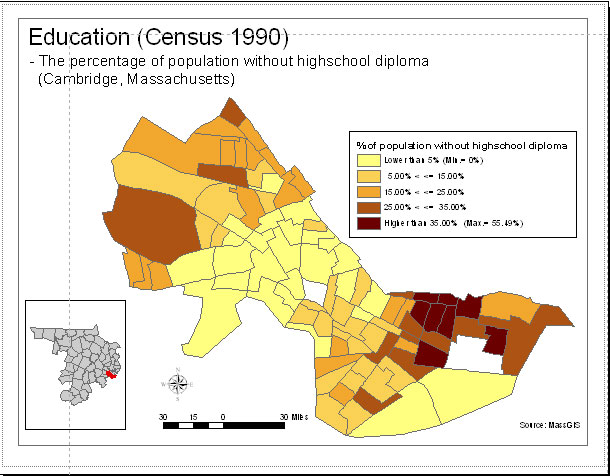

Apply the join and go back to the ArcMap window. Open the attribute table of Cambbgrp. We now find the columns of the education tables appended to the right side of the table following the attributes from the Cambbgrp shapefile. Now, we are ready to shade a thematic map of the newly computed p_lowed field. Open the layer definition window. Go to the Symbology tab. Classify your p_lowed field using 5 quantiles categories and eyeball the results. To save time, we won't ask you to print out this map. Just pick a readable graduated color scheme and take a little time to examine the thematic map: Do the patterns you see match your impression of the socio-economic patterns in Cambridge?

Please note that the table 'joins' are one-directional. If you join the tables in the reverse order, the Attributes of Cambbgrp table will disappear and you won't be able to map the selections from the expanded education table. Finally, note that these Joins are temporary and do not affect the tables that are stored on disk.

.

Suppose we wanted to use the map to examine a few Cambridge block groups near

MIT. This is easily done using the graphical selection tools and Statistics

command from the Selection menu, which we have learned in previous labs and in

Part I above. In the data display area, select the two block

groups along the southern-most edge of Cambridge that contain most of the MIT

campus. Now use the Selection > Statistics menu

item to determine the mean population for those two block groups.

It should be 2535.5.

.

Suppose we wanted to use the map to examine a few Cambridge block groups near

MIT. This is easily done using the graphical selection tools and Statistics

command from the Selection menu, which we have learned in previous labs and in

Part I above. In the data display area, select the two block

groups along the southern-most edge of Cambridge that contain most of the MIT

campus. Now use the Selection > Statistics menu

item to determine the mean population for those two block groups.

It should be 2535.5.

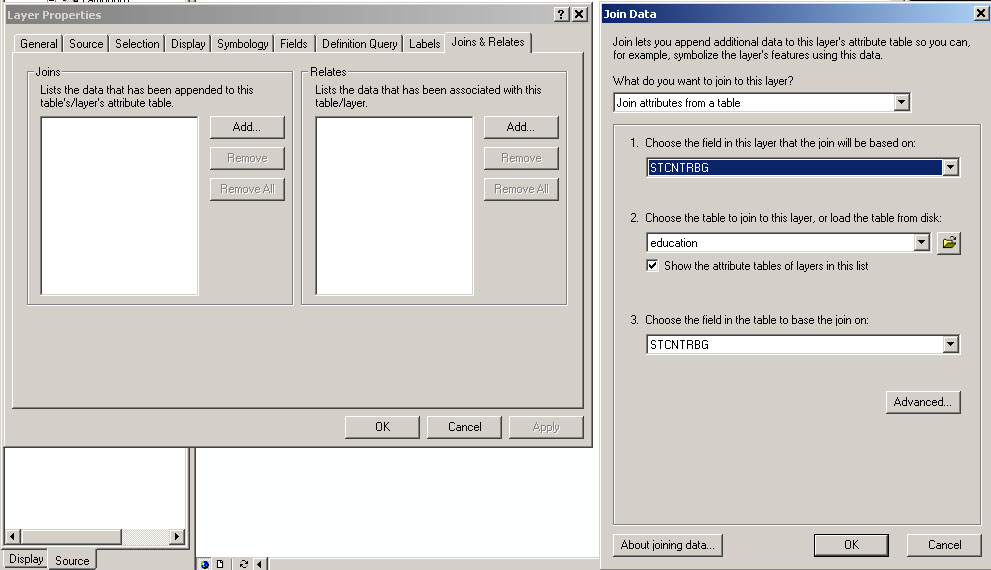

Add the sales89 coverage as a layer in your ArcMap window. Open the layer properties window and go to the definition query tab. Create a query definition query to include in your layer only those sales with a realprice less than $150,000. You should find that 29 of the 222 sales meet this criteria. Now select areas of the city (block groups) where these sales occurred. Go to menu Seletion > Select by location. Specify the criterion as in Figure 3.

|

Click the Apply button. Every block group that contains one or more of the low-priced sales will be highlighted. You have just done a basic "point-in-polygon" query to find the set of polygon features (block groups) which contain a set of point features (the low-priced sales). ]Note: The 29 low-prices sales were located in 21 of the Cambridge block groups.]

Analyzing the results:

These point-in-polygon queries are useful for quick exploration of the data but the summary statistics are only that -- a summary of the patterns that result. As we might suspect, the general trend suggests that low-priced housing tends to be in lower-income, lower-education neighborhoods. But there is quite a bit of variability and the tools we've used so far don't let us move the Attributes of Cambbgr data from the census table over to the appropriate sales89 rows. Doing that would permit us to examine the patterns more closely. In later labs, we'll look at other tools (in the ArcGIS Toolbox) that let us tag the sales89 table with the census data for the blockgroup that contains the sale.

Suppose we want to check whether the lower-priced housing tends to be closer to the major roads. Let's use ArcMap's buffering tools to create a buffer around Mass Ave and see whether the sales in the buffer are relatively high or low priced.

Add cambtigr coverage  to your

ArcMap project. Choose to add the 'arc' features from this coverage. The cambtigr

coverage is stored in the class data locker (M:\data).

This coverage is the Cambridge street coverage that we used in an earlier lab. cambtigr is one of those topologically encoded 'coverages' that we discussed in lecture, rather than a shapefile. Use the 'Select by Attribute' capability utilized earlier in this lab to select only those road segments with FNAME = 'Massachusetts'. (There is one street

segment on the Mass Ave bridge that has FNAME = 'Masssachusetts Ave'! Don't

bother including that link.) Beware of two issues when making this selection:

(1) the field name shows up as 'fname' in the 'definition query' window, but

is listed as 'fename' if you use the 'identify' cursor to click on the street

links display in the map window. That's because column names are allowed to

have aliases - take a look at the 'fields' tab in the layer properties window

and you'll see both names! (2) Also, note that some Mass Ave street segments

are not selected. That's because the FNAME for those segments are listed as

'State Hwy 2A' rather than 'Massachusetts'. This multiple-name issue could

be a problem. In this case, the 55 selected Mass Ave street segments are sufficient

to create a buffer that will enclose the others so we won't need to do extra

work to identify those Mass Ave segments that list the route number instead

of the street name. ArcGIS has some more elaborate database table schemas to

handle such naming and route numbering issues but we won't get into that level

of complexity in this exercise.

to your

ArcMap project. Choose to add the 'arc' features from this coverage. The cambtigr

coverage is stored in the class data locker (M:\data).

This coverage is the Cambridge street coverage that we used in an earlier lab. cambtigr is one of those topologically encoded 'coverages' that we discussed in lecture, rather than a shapefile. Use the 'Select by Attribute' capability utilized earlier in this lab to select only those road segments with FNAME = 'Massachusetts'. (There is one street

segment on the Mass Ave bridge that has FNAME = 'Masssachusetts Ave'! Don't

bother including that link.) Beware of two issues when making this selection:

(1) the field name shows up as 'fname' in the 'definition query' window, but

is listed as 'fename' if you use the 'identify' cursor to click on the street

links display in the map window. That's because column names are allowed to

have aliases - take a look at the 'fields' tab in the layer properties window

and you'll see both names! (2) Also, note that some Mass Ave street segments

are not selected. That's because the FNAME for those segments are listed as

'State Hwy 2A' rather than 'Massachusetts'. This multiple-name issue could

be a problem. In this case, the 55 selected Mass Ave street segments are sufficient

to create a buffer that will enclose the others so we won't need to do extra

work to identify those Mass Ave segments that list the route number instead

of the street name. ArcGIS has some more elaborate database table schemas to

handle such naming and route numbering issues but we won't get into that level

of complexity in this exercise.

Let's extract these Mass Ave road segments into a new shapefile before we create our buffer. By doing this, we can save the smaller Mass Ave file on a local drive for better performance. In addition, we can save it as a shapefile, which will be easier to handle locally that a 'coverage.' Make sure that the Mass Ave road segments are still highlighted and then right-click the camtigr layer and choose Data/Export-Data. Keep the default options to export only the selected features and to use the coordinate system of the layer's source data (i.e., the way it is saved on disk). Set the location for the saved shapefile to be in c:\usertemp (or some other local directory that is writeable) and name the shapefile to be massave.shp. Now you can click 'OK' to save a new shapefile on a local drive with only the Massachusetts avenue road segments. Also click 'OK' to add this new shapefile to your ArcMap data frame. Finally, Open the layer property window of one of the TIGER layers. Under the general tab, change the layer name to Mass Ave.

We are now almost ready to use the 'Buffer' tool in the ArcToolbox to create a half-mile buffer around our 55 Mass Ave road segments. As a final step before creating the buffer, make sure the Data Frame Properties have the map units set to meters and display units are in miles. (Note, the Data Frame coordinate system was set to a Mass State Plane projection based on the save coordinate system information in the first shapefile that we added to our Data Frame. Hence, the map units should already be set and should not be editable within the Data Frame Properties window.)

Open the ArcToolbox window from the Window menu. Then choose the Buffer option from the 'Proximity' listing under 'Analysis Tools'. Choose Mass Ave for the input feature and enter a path and shapefile name for the output feature that will store it in your writeable sub-directory on the local C: drive (e.g., C:\usertemp\[Your_athena_name]\Buffer_of_MassAve.shp. In the 'Distance' portion of the buffering window, set the linear unit to be 0.5 and be sure the units are set to miles. Accept the defaults for the 'side type' and 'end type' (regarding how the shape of the buffer is computed) and change the 'dissolve' setting to 'all' so that overlapping buffers around each street segment are merged. When you've adjusted all these settings, click "Finish" and wait for ArcMap to do the computations. You should end up with a curved sausage-shaped area something like the one in Fig. 6.

|

|

Since this newly created buffer is a shapefile, we can use it just like any other theme. In particular, we can do an exercise similar to "point-in-polygon" example we did earlier in this exercise in order to determine which 1989 housing sales are located within the new buffer. Highlight the sales89 theme (with all the sales not just the low-priced ones) and choose Selection > Select by Location. Select all the sales89 cases that intersect the Buffer of Mass Ave layer. (We used "completely contain" before and "intersect" this time. Do you understand why?) You should find that 140 of the 222 sales are located within the half-mile buffer. What are the mean and standard deviation of sale prices for the sales within the buffer the buffer? Write the values on your lab assignment.

The sales prices in and out of the buffer aren't all that different (compared with their standard deviation). Also, some parts of the buffer falls outside Cambridge and we don't know about home sales prices in those areas. Before reaching any conclusions, we would want to do further analysis. But, this quick tour of spatial selection is enough for today. In subsequent labs, we'll examine lots more of the spatial analysis capabilities of ArcMap.

In this part, you will simply apply a suitable coordinate system to a Middlesex county city boundaries layer for a map you will create. Suppose you would like to create a map layout shown below. As you can see, there is a small map in the left bottom corner. It shows cities in Middlesex county, Massachusetts and it highlights in red the area that is in Cambridge.

|

To create this map, you have to create a new dataframe as you did in lab2.

Rename the new dataframe to legend map and then copy the Cambbgrp

layer and paste it into the legend map dataframe. Add a new layer

middle_county to the legend map dataframe

from M:\data\lab3_county\.

(This new map layer shows all the towns within Middlesex County in Massachusetts).

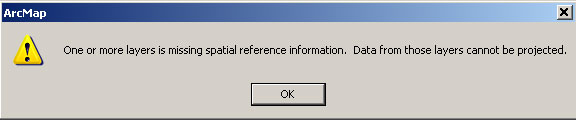

When you add the shapefile, you will get a warning message something like

this:

|

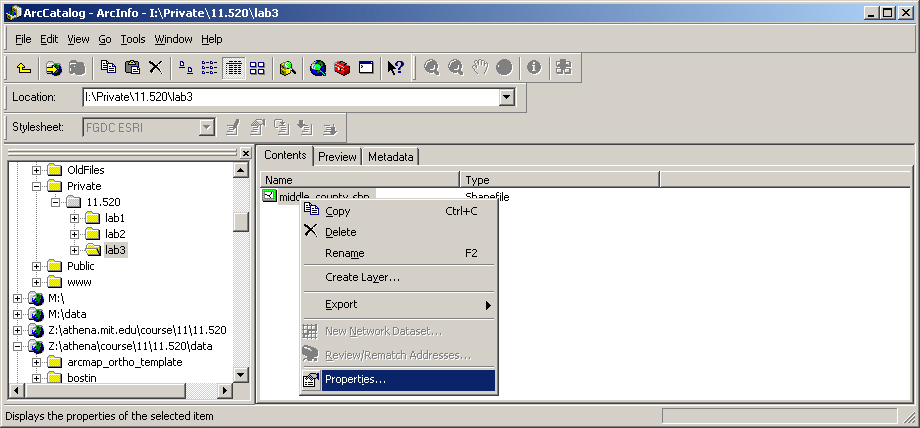

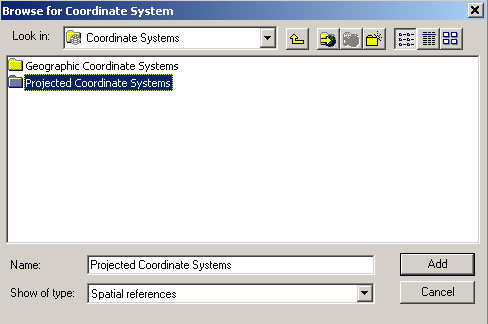

This message notifies you that the spatial reference information is missing from the county layer and may cause problems for further use. In some cases, you may not get an error message but that does *not* mean that all is O.K. Let's check it out. Open the middle_county layer's layer properties window and select the source tab. It shows that the coordinate systems of this layer is "Undefined". Let's fix this. Close the layer properties window and remove county layer from the legend map dataframe. You cannot change the coordinate system of a layer while it is open in ArcMap. Now launch the ArcCatalog program (Start > All Programs > ArcGIS > ArcCatalog).

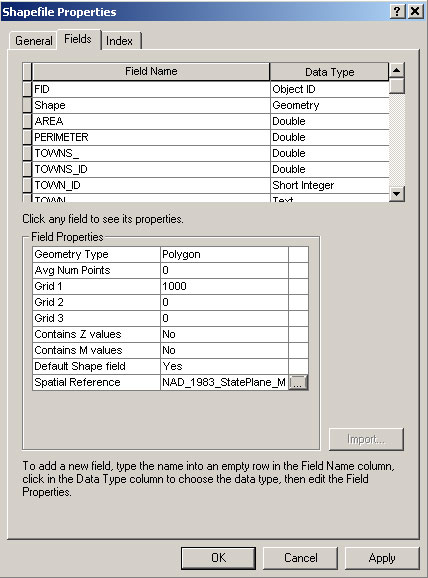

1) Copy and paste the middle_county layer from M:\data\lab3_county\ into your local writeable temporary space (E.g., C:\usertemp\[your_athena_name]\mcounty) You will need a writeable copy of the shapefile in order to change the coordinate system information associated with a shapefile. When you copy and paste the layer, please use ArcCatalog so that all the files that are associated with the layer are properly copied. A GIS layer consists of several files, such as .shp, .shx, .dbf, .sbn, .sbx, and you may miss necessary files when you use windows explore to copy the layer. Click your right mouse button while putting the cursor on the name of the layer. The drop-down menu will show up. Click properties from the list. Then the Shapefile Properties window will show up.

|

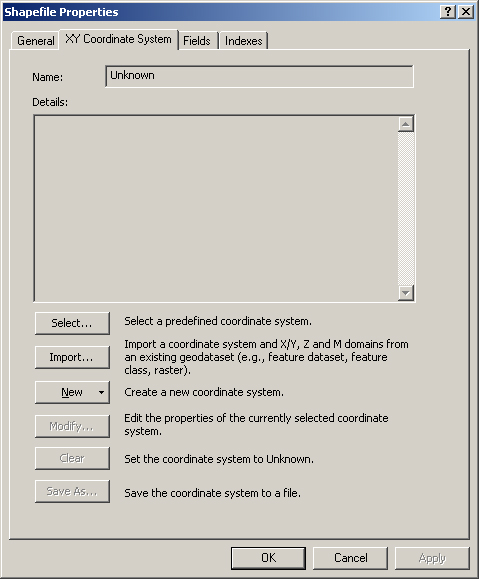

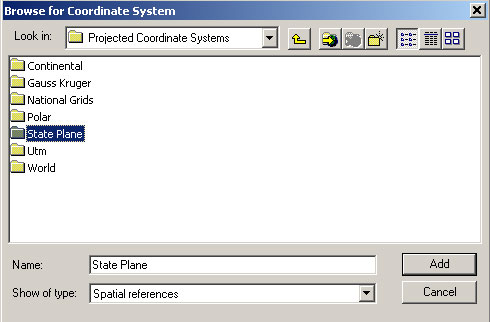

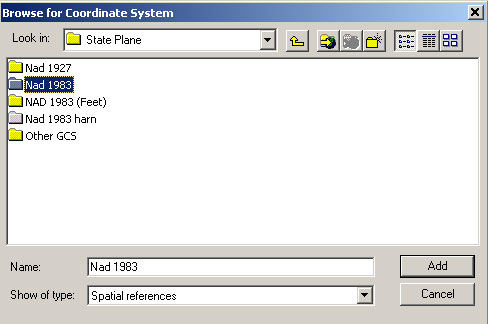

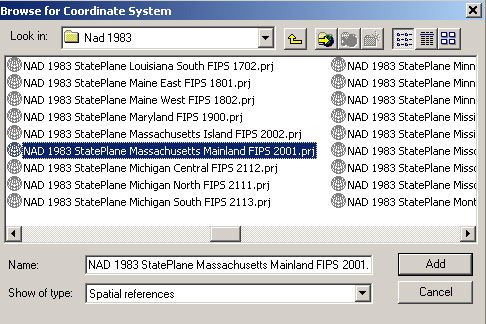

2) Choose the "XY Coordinate System" tab. It will bring up the spatial reference properties window

|

3) Select the appropriate spatial reference system from the list. In this case NAD1983 Stateplane Massachusetts Mainland FIPS 2001.prj is the right one. Select the spatial reference system and click ADD.

|

|

|  |

4) Now the spatial reference for the mcounty layer has been set. Click OK. Now go to the ArcMap and add the mcounty layer to the legend map dataframe. This time no warning message will show up.

|

Knowing how to examine and reset the projection associated with data frames and layers is often handy when integrating data layers that come form different different agencies and sources.

Created by Thomas H. Grayson

and Joe Ferreira.

Modified Sept. 16 2003-08 by Jeeseong Chung,

Jinhua

Zhao,

Xiongjiu Liao, Mi Diao, Yang Chen, Yi Zhu.

Last modified 20 September, 2010, by Joe Ferreira

.

Back to the 11.520

Home

Page.

Back to the CRON

Home

Page.