Masachusetts Institute of Technology - Department

of Urban Studies and Planning

| 11.521 |

Spatial

Database Management and Advanced Geographic Information

Systems

|

| 11.523 |

Fundamentals

of Spatial Database Management

|

Lab 4: Mapping Boston Zoning Variances

26 February 2018

Due: Tuesday, March 6, 2018, at 4:00 pm on Stellar

Purpose:

In this exercise, we'll use our ArcGIS database connections to Postgres to

access the Boston zoning variance database that we discussed in lecture last

week and we will create a 'pin-map' showing the location of various subsets

of zoning variances on the Boston maps that we have used in earlier

exercises. We will also generate a pin-map in Google Earth that

overlays some of the zoning variances as a 'map mashup' on top of the Boston

images that are viewable in Google Earth. On the Postgres side, we will run

queries and prepare views to geocode the variances that zero in on

particular zoning characteristics of interest. On the ArcMap side, we will

symbolize zoning variance locations depending upon their characteristics and

we will 'spatially join' them to the neighborhood statistical areas and

related census data in order to examine the demographics of neighborhoods

surrounding the zoning variances. Note that, since the zoning database is

small, we could use MS-Access database (11.521_lab3.mdb) in place of

Postgres to process the queries and views. However, we want to be sure that

you have some practice pulling Postgres data into ArcMap. [Link to in-class

notes: lab4_inclass.txt]

Needed Info:

- Clark Broida's database of Boston Zoning variances (stored in the ZONING table within

the 'bzoning' schema of our 'class521' database on the Postgres

server. These zoning data are explained further in the 'zoning

database' portion of the SQL

Notes:

- Assorted Boston maps, including Massachusetts Town boundaries,

Boston MSA Census Tract, Boston Neighborhoods, and the East Boston

parcel data.

Data Processing Reminder:

- Double check via Geoprocessing / Environments... that your scratch

work space is set to C:\TEMP when you start working in ArcMap.

- To improve performance and avoid data corruption due to network

latency, copy all shapefiles to a truly local drive, C:\TEMP before

opening and adding datasets into ArcMap

(1) Preparing the ZONING data

Before proceeding with this exercise, get familiar with the zoning

variance data and the associated lookup tables. Review the 'zoning

variances' and 'SQL

examples using zoning variances' portion of the SQL

Notes pages that are linked to the main class webpage. Examine the

various tables and try some of the SQL query example.

In order to map the zoning variances, we need to determine a parcel ID

or some X/Y point location for each zoning variance request. The

original data have a street address but no parcel ID. To save time,

we've already 'geocoded' the addresses of each request in the zoning

variance database in order to get approximate latitude/longitude

locations (expressed as decimal degrees). The geocoding results are

saved in a table in Postgres called lonlat4. However, the

longitude values need to be multiplied by -1 to properly represent

longitude values in the Western Hemisphere (which are negative).

We can use the following SQL query to create a table that makes this

change:

CREATE TABLE public.jf_lonlat5 AS

SELECT casenumber, -1.0 * longitude as longitude, latitude,

streetname, quality

FROM bzoning.lonlat4;

We could have avoided creating a new table by using the following query

to create a 'view' that makes this change:

CREATE VIEW public.jf_lonlat5 AS

SELECT casenumber, -1.0 * longitude as longitude, latitude,

streetname, quality

FROM bzoning.lonlat4;

We prefix the name of the table or view with initials (or Athena

username) so multiple users can avoid unique name conflicts.

Note that the lonlat4 table is prefixed by its schema name

'bzoning'. Since the view name has no prefix, it will be written

into the default schema - which is usually 'public' since the default

search path contains the schema 'public'. However, you can set

your search path to specify the order of consideration of

schemas. SET search_path TO bzoning,public; will look

first in 'bzoning' and then in 'public'. Beware that you have

read-only permission in 'bzoning' but you can save tables and views in

'public'. You will get a 'cannot save' message if your

search_path started with 'bzoning' and you tried to create a new table

or view without prefixing the new name with 'public.'

The use of a decimal place and '0' when multiplying by -1.0 forces

Postgres to treat the second field as a floating point number when

computed 'on the fly' by the view. Beware, however, that some ODBC

drivers have problems guessing the right data types when bringing

Postgres views into ArcMap tables. In this case, we could either

move the table from Postgres into Access and then into ArcMap, or we

will export the Postgres table into a plain text CSV file and read that

into ArcMAP. Alternatively, we could use QGIS, an open source GIS

package that is 64-bit, and connect to Postgres using the same 64-bit

driver that Access is using. (We will make some use of QGIS later in the

semester.)

(2) Connecting ArcGIS to Postgres

2.1) Connect to a Postgres Database from ArcMap

(1) From ArcMap or ArcCatalog, you can construct a database connection

that lets you add tables from Postgres. From ArcMap, click on Add

Data, and navigate to the Database Connections level. From

ArcCatalog, the Database Connections level is also a the top level

(along with 'folder connections', 'toolboxes', etc.). Double-click

'Add Database Connection'.

(2 ) In the pop-up window, select PostgreSQL as the Database Platform,

write the virtual machine cronpgsql.mit.edu as the instance, and leave

Database Authentication as the Authentication Type.

(3) Type your usename and password, deselect the

'save username and password' and select the Database you want to connect

to. In this case, choose zoning.

(4) You will now see a connection called 'Connection to

cronpgsql.mit.edu.sde (or a similar name) appear as one of your

'database connections' in the ArcCatalog pane. You can now click on it

and open any table (or view) stored in that database to which you have

access. Make sure you rename the connection, so that you can identify it

in the future. To do so, click on the ArcCatalog symbol on ArcMap, go to

Database connections, right click on the database you want to rename and

select rename in the drop down menu. We suggest you rename the

connection 'CR-username-class521' where you substitute your MIT user ID

in place of 'username'.

2.2) Import your Postgres table, t_lonlat5, into ArcMap

We have already discussed how to create a temporary table you_lonlat5

using phpPgAdmin or PgAdminIII in your Postgres account. If you have not

already done this, use a 'create table you_xxxx as select ...' command

in Postgres to save your view into a temporary table - and remember

to 'drop table you_xxxx' later on.

Alternatively, instead of connecting ArcMap

to Postgres directly, you could export a table into plain text (CSV)

format and read that text table into ArcMap. We will use the

direct connection but, for the record, here is the way to create a CSV

file from phpPgADMIN.

1) Run a query using your phpPgAdmin

connection to Postgres to obtain all columns and rows of

you_lonlat5: You can use the following query.

SELECT *

FROM you_lonlat5;

2) Export the table in .csv format:

After you run the query, scroll all the way down to the bottom of the

table and click on download. Select the format to be 'CSV', click again

on download, and then export it. Save it to your local working directory

(C:\temp) using the same name you_lonlat5.csv

3) Now, import the table into ArcMap: You

may want to start ArcMap by running the local copy of the map document

file that we used for Lab #2 (eboston05_lab2start.mxd

in C:\temp\eboston05). That way, several useful layers will already be

available and you will remember to start with map documents and

shapefiles on a truly local drive (C:\temp). Click on the Add Data

symbol and navigate to the location of you_lonlat5.csv. Then add the

file to your ArcMap document.

Similarly, we could move Postgres tables into

MS-Access (and then into ArcMap). Just for the record, here are

the instructions to import data from Postgres into Access.

1) In an open MS-Access database (that uses

the 2000-2003 MS-Access format and has a filename ending in .mdb), click

the 'External Data' tab and click on ODBC Database. Select 'Import the

source data into a new table...'

2) In the pop-up 'Select Data Source'

window, click the 'Machine Data Source' tab. You should see

PostgreSQL35W64 as a possible data source name. (If a Postgres

choice is not available, then a 64-bit ODBC postgres driver has not yet

been installed on your machine. See the instructors if you need

help installing such a driver on your personal machine.)

3) Next, a 'postgresql connection' window

pops up and you need to specify the database, user, and password for

accessing the Postgres instance. In our case, the database will be

'zoning', and the server should already be listed as cronpgsql.mit.edu

using port 5432. Type your (athena) username and your personal postgres

password and click 'OK'. After a moment, a new 'import objects'

window pops up with a list of tables that you may be able to

access. Note that the table names are prefixed with the schema

name - in our case 'public'. Select your t_lonlat5 table.

4) Be sure your database is saved as an .mdb

file (not the newer .acdb format), so that ArcMap will be able to access

the tables in this MS-Access database. From ArcMap, click the 'add

data' button to navigate your file system and find the MS-Access

database, and import your table. Check that numeric fields are

properly shown.

(3) Mapping the Zoning Variances

To map the zoning variances, you'll need to convert the

latitude/longitude values in the you_lonlat5 table into 'points'

on your map. Since all the Boston area maps we've been using are in

Massachusetts State Plane coordinates (not latitude/longitude), we'll

have to worry about map projections. There is more than one strategy for

mixing and matching layers with different projections. Which way works

best depends upon whether your datasets have their spatial reference

system identified, what variations you want to combine, and the spatial

reference system with which you want to view the data onscreen. We'll

explain one approach here:

Loading an existing shapefile into an empty Data Frame in ArcMap will

set the coordinate system of the Data Frame to match that of the

shapefile - if the coordinate system of the shapefile is known to ArcMap

(i.e., if there is an *.prj file saved on disk with the name of the

shapefile in place of '*'). Since we will be working today with

shapefiles saved in *different* coordinate systems, it will avoid

confusion to set the coordinate system of the Data Frame explicitly at

the outset. Then when we create a new shapefile from our lat/lon values

for the zoning variances, we can be sure that the lat/lon values are

converted into an appropriate and known projected coordinate system -

namely, the one used by MassGIS for most Mass data layers (and a

slightly different one from what Boston uses!).

(a) Before you add any data layers to an empty Data Frame in

ArcMap, right click on the data frame name, and choose properties. In

the Coordinate System tab, choose "Predefined / Projected Coordinate

Systems / State Plane / Nad 1983(meters) /

NAD_1983_StatePlane_Massachusetts_Mainland_FIPS_2001". This step

identifies the spatial reference system (Mass State Plane) in which you

wish to view the data. Note that there are several NAD 1983 choices.

(For example, NAD 1983 HARN stands for 'high accuracy reference network'

and is a regional update of NAD 1983 (meters) coordinates using GPS

observations). Both NAD 1983 and NAD 1983 HARN should work well but,

since most state datasets are based on NAD 1983, we suggest using that

one.

(b) Now, use the 'add data' button to navigate your file system

to wherever you save the CSV file. Click on t_lonlat5.csv

to add this table to your data frame. Note that your Data

Frame should already have its coordinate system select - either by the

steps above in step (a) or by adding a shapefile with a known projection

into the Data Frame.

(c) Right click on the you_lonlat5 table, and choose Display X,Y

data. In the pop-up window, select the proper fields for X field and Y

field then press OK. This 'Display X-Y Data' window must also indicate

the appropriate coordinate system for these X-Y values. At this point,

the 'Description' of the coordinate system of input coordinates is

likely to be wrong - the system guesses incorrectly that they are the

same as what we set for the new Data Frame. Choose 'Edit' and select

'geographic coordinate systems' using the World WGS84 datum. This

specification lets ArcMap know that the numbers in your X/Y columns are

lat-lon decimal degree values and they must be converted to Mass State

Plane coordinates (using the appropriate datum). [Using WGS84 for the

datum is consistent with what Google Earth and many online mapping

services use as their model of the Earth's surface. It is a slightly

different datum from what Mass State Plane coordinates use (NAD 1983)

and may lead to occasional messages from ArcMap that you have some

coordinate system inconsistencies. Since the earth models are not very

different around Boston, you need not worry about these

inconsistencies.]

When you click 'okay', you may get a message saying, "The table you

specified does not have an Object-ID field so you will not be able to

... " Click okay and just remember that you will need to

save the new layer as a shapefile (and add it back in to ArcMap).

before you can do any spatial operations (such as 'join by location')

with your new mappable zoning variances.

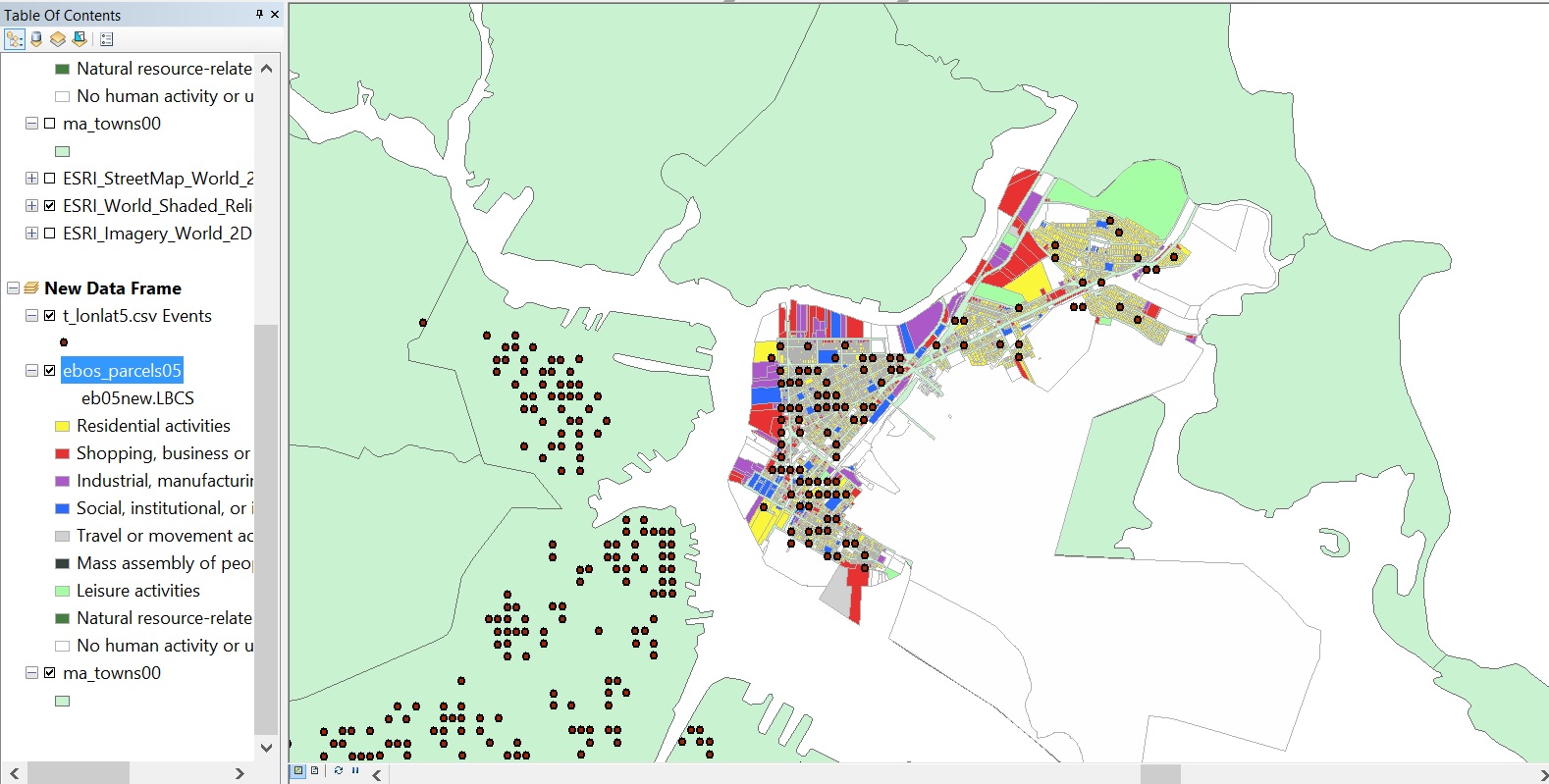

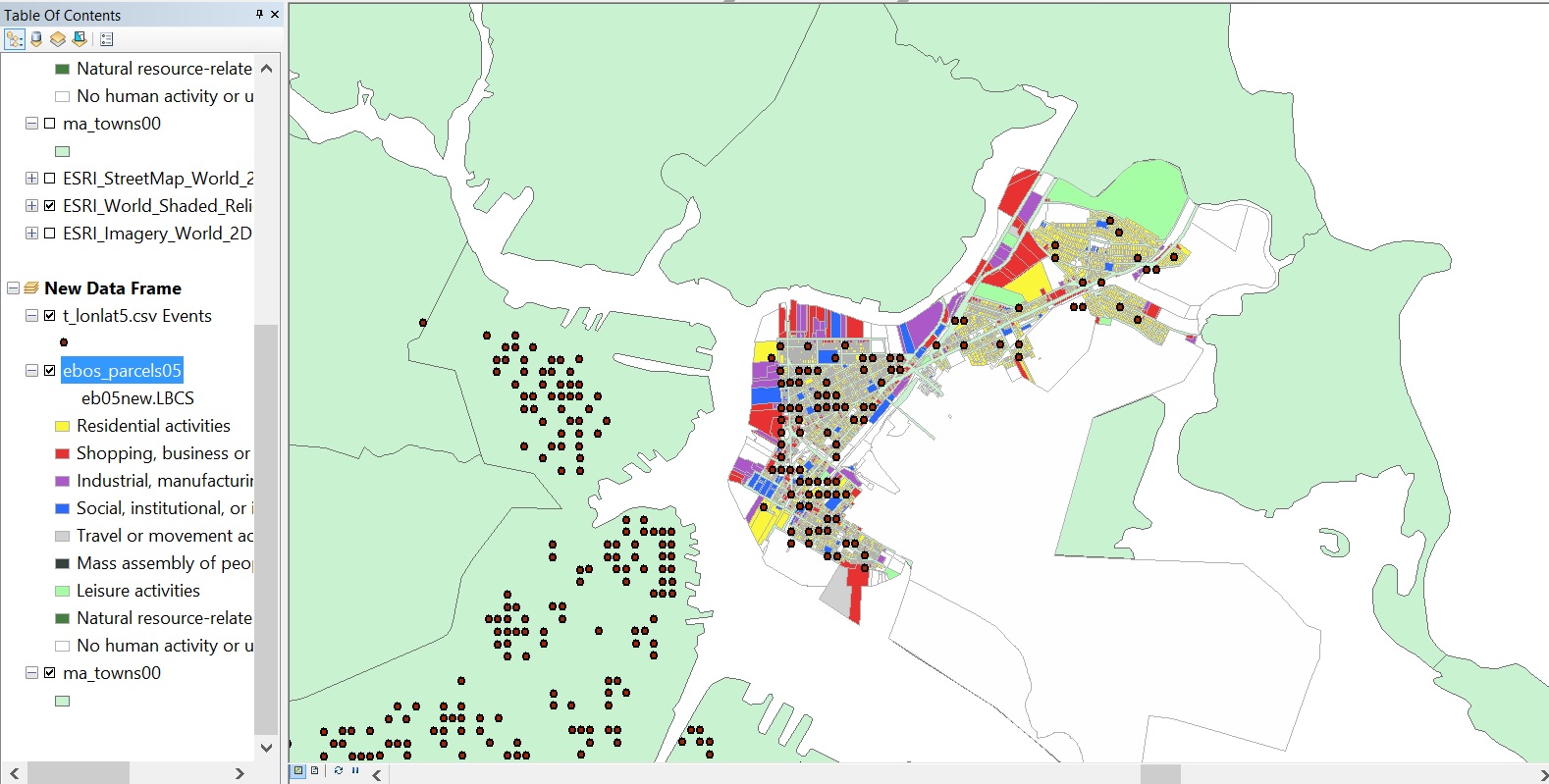

After you click 'okay', ArcMap will generate and display a pinmap (in

Mass State Plane coordinates, NAD83 meters) of the X-Y locations stored

in the lon-lat columns of t_lonlat5. [If the pinmap is not showing,

ArcMap may be mixed up because this XY pinmap is the only layer in the

data frame and ArcMap is confused about coordinates. In this case, add

in a shapefile such as the ma_towns00 or ebos_parcels05

and both that shapefile and your you_lonlat5 events will be displayed.]

(d) Next, be sure to add the Massachusetts Town Boundary layer

to your Data Frame. One place to find this is the ma_towns00 shapefile

in the data sub-directory of the class locker:

//afs/athena.mit.edu/course/11/11.521/data. Since this shapefile

includes a ma_towns00.prj file indicating the spatial reference system

(Mass State Plane, Mainland, NAD83 meters), ArcMap won't complain about

not knowing the coordinate system and the Town map should display

correctly. If the *.prj file were missing, the display would still work

since we have already set the Data Frame to be the same as the projected

coordinate saved on disk in the ma_towns00 shapefile. Also, add the ebos_parcels05

shapefile that we have used in recent labs. (If you started ArcMap using

c:\temp\eboston05\eboston05_lab2start.mxd

from lab #2, then the town boundaries and East Boston 2005 parcels will

already be loaded into the initial Data Frame and you can copy and paste

them into a second Data Frame for lab #4 work.

(e) Although we can now see the zoning variances on our map, we will

have trouble using them in various ArcToolbox operations because they

have not yet been associated with feature IDs and coordinate systems.

Right-click on the 'lonlat5 Events' layer and choose 'data/export-data'

to export the events as a shapefile (with a proper feature ID). Before

clicking 'ok' notice the coordinate system choice. Select 'the data

frame' so that the event locations are saved in Mass State Plane

coordinates rather than lat/lon. Also, pay attention to the location

where you are saving the shapefile. The default is a generic name

(Export_Output) in the default file geodatabase (which is

C:\temp\scratch.gdb if your ArcMap settings match those recommended in

an earlier lab.) We suggest changing the name to something like,

'lonlat5_sp' to indicate that is has the variance locations converted to

Mass state plane coordinates.

If you want to use this shapefile later on, you should explicitly save

it either (a) outside the scratch.gdb geodatabase as we have done with

other shapefiles, or (b) within the scratch.gdb. In either case, you

will need to remember what you have done and, before logging out, copy

the entire shapefile (all 6 or 7 parts) to your flash drive or network

locker, or copy the entire scratch.gdb. If the scratch.gdb has been

sitting in c:\temp and accumulating random datasets for anyone using the

machine, that may not be a good option. However, if you start with a

fresh scratch.gdb file geodatabase (or a personal one that you saved

previously) this choice is a good option. Finally, add your newly saved

shapefile back into ArcMap.

Now (finally!) you have a 'pin map' of the projected zoning variances

showing up within the town boundaries of Boston where they belong. The

data type conversions, coordinate issues, and geocoding (which we did

for you!) do complicate pulling data into GIS. However, if you

understand the data conversion issues and check each step along the way,

there are substantial amounts of mapable data available on the net.

(4) Analyzing Spatial Patterns of the Zoning Variances

(e) Now let's add a few other map themes to ArcMap to give more

meaning to the zoning variance map. Add the msa5_tr90 and planning_districts

shapefiles (showing Eastern Mass 1990 census tracts and Boston

neighborhood boundaries in Mass State Plane coordinates NAD83. (Beware

that msa5_tr90 is in NAD83 [meters] whereas planning_districts

is in NAD93 [feet] - which is used by Boston and has a different origin

[0,0] from NAD83 [meters]). The data files are located in the class

locker //afs/athena.mit.edu/course/11/11.521/data and planning_districts

is in the 'Boston' sub-folder. Since the two shapefiles have a *.prj

projection file, ArcMap knows how to display them properly in the Data

Frame.

(f) Next, let's examine and map various subsets of the zoning

variance cases -- for example, all approved variances. We're not quite

ready to do this since the t_lonlat5 table has none of the zoning

variance characteristics -- we'll have to join it to the ZONING

table using 'casenumber'. We could do the match in Postgres and

then import the resulting table into ArcGIS, or we could import the

zoning table using our connection to Postgres and do the join in ArcMap.

However, due to the 32-bit vs 64-bit issue we are experiencing, we will

have to export the zoning table from Postgres into a csv file and

then import it into ArcMap in the same way we imported t_lonlat5.

Alternatively, we could use the MS-Access database (11.521_lab3.mdb)

that contains the zoning table and pull the rows we want from that

table.

(f) After joining the zoning data to your projected-and-mapped you_lonlat5

data, generate a pin-map showing the location of all variances (that

were geo-referenced) which proposed to convert vacant land

to housing. Your map should be zoomed in to just fit

Boston onscreen and should show city boundaries and census tracts as

well as the zoning variance locations.

(g) Now change the symbols used to draw the variances (involving

vacant land converted to housing) so that a different symbol is used for

those which were APPROVED (code 1 or 2). You will also want to exclude

the missing value cases so the two symbols apply only to

approved/not-approved variances. (There are several ways to distinguish

and symbolize the approved/not-approved variances. For example, you can

copy/paste a second zoning pin-map layer and display approved variances

in one and not-approved variance in the other with different symbology

in each case.)

(h) Next let's examine whether the approval rate of

vacant-land-to-housing variances appears to be different across parts of

Boston that have high/medium/low housing value -- as measured by the

1990 census data. Selected 1990 Census tract data are stored in a table

in the class data locker called msa5tr.dbf in

//afs/athena.mit.edu/course/11/11.521/data. Add this table to your

ArcGIS project. You will have to join (or link) this data table to the msa5_tr90

theme in order to map the census data. The column names in the msa5tr.dbf

table are the official Census Bureau names. The technical documentation

for 1990 STF3 census data is available at: http://mit.edu/11.520/data/census90/census90stf3td.pdf

and contains the data dictionary for these variables. But we'll save you

some time: the H061A001 variable contains the median value of

owner-occupied housing units. Join the msa5tr.dbf

table to msa5_tr90 and use the H061A001 variable to

generate a thematic map of the housing values across the Boston census

tracts. The STCNTYTR column contains the STate+CouNTY+TRact code for

each census tract and will allow you to join the census tract data table

with the census tract shapefile. (For further information about US

Census data and mapping, see the lecture notes from the Fall GIS class,

11.520. For example, http://mit.edu/11.520/www10/lectures/lec6_census.html).

Now zoom in a bit on a part of town of particular interest to you and

fiddle with the map so that the variances (with approved/not-approved

symbols) are readable on top of your thematic map.

(i) Finally, let's ask some questions about the zoning variances

that fall within high/medium/low value tracts. We might like to ask: How

many vacant-to-housing variances are in above-average value tracts (in

the 1990 census)? What fraction are approved? From the map,

we can see which variances fall within the high/medium/low value tracts.

But we can't easily compute the counts and fraction-approved because the

zoning variances aren't 'tagged' with the number of the census tract

that contains them. Here's an opportunity to use some of the spatial

data processing capabilities of our GIS. We can do a spatial join -- in

this case a so-called 'point-in-polygon' operation -- to find out which

zoning variances (points) are contained within which census tracts

(polygons). The spatial join will add the appropriate census tract row

from the msa5_tr90 attribute table to the attribute

table row for each (mapped) zoning variance. Since we have already

joined msa5tr.dbf to msa_tr90, the

spatial join allows us to determine the 1990 median housing value for

the census tract containing each zoning variance.

We still have a few steps to go to answer the questions. First use

ArcGIS's query tools to examine the distribution of housing value across

1990 census tracts and estimate the number of vacant-to-housing

variances in above-average housing value

(1990) tracts and the fraction of them that were approved. Then

calculate the same two numbers for the tracts that have a below-average

housing value and compare them with those of the above-average housing

value tracts.

In addition to computing these numbers, turn in a printout of a table

showing the casenumber, board decision, 1980 nsa number, and 1990 census

tract number for all vacant-to-housing variances that are within

ROSLINDALE (i.e., NSA subnghbrd 49, 50 and 51).

(5) Overlaying the Zoning Variances onto Google Earth

Instead of overlaying our selected zoning variances on top of our

ArcGIS map, we might want to display our selected zoning variances in,

say, Google Earth. The ArcGIS Toolbox provides conversion tools that

allow you to write out an ArcMap layer or ArcMap document in a format

that can be read by Google Earth, Google Maps, and other map viewing

applications that adhere to the 'KML' protocol for representing mapable

3D data. (KML stands for Keyhole Markup Language. Keyhole was the

company that originally developed what became Google Earth. KML is a

variation of a more general geographic markup language (GML) developed

by the Open GeoSpatial Consortium and KML has become a de facto standard

for many map mashups. KMZ is the compiled version of KML.)

Before we try to save a KMZ file containing our zoning variances, we

need to be careful about coordinate systems and in-memory vs.

written-to-disk shapefiles. Your KMZ file needs to use lat/lon

coordinates, not the Mass State Plane coordinates. Let's try to overlay

in Google Earth all the zoning variances that proposed converting vacant

land to housing. Just to be sure we have the desired data and

coordinates, let us save the results from part (4g) above as a layer

file. (Right-click the layer and choose 'save as a layer file') Call

this layer file myzoning.lyr. Now open up a new empty Data Frame (via

Insert/Data Frame from the ArcMap menus), right-click the data frame and

use the properties tab to set the coordinate system to geographic

coordinates using the GCS_WGS_1984 datum. (Actually, it would be better

to use the GCS north american datum 1983 entry since we know that

MassGIS also used the NAD83 datum for its Mass State Plane projection,

but WGS84 is a safe bet for any lat/lon data and it is the datum used by

Google Earth, so we have used that in labs that visualize results in

Google Earth). Now, add the myzoning.lyr layer that you just saved into

this data frame.

We are now ready to export the layer in the KMZ format that Google

Earth can read for the map mashup. In ArcMap, open the Search

pane (by mousing over the 'Search' tab located along the right side of

the ArcMap window). Search for 'kml'. Double click on the 'layer to KML'

choice, set the layer name to be 'myzoning' and the output to be

myzoning.kmz within a writeable directory. Set the 'layer output scale'

to be '1' so the layer is always turned on. (This 'layer to KML' tool is

one of several data conversion tools that fit within the ArcToolbox

hierarchy.) Finally, open Google Earth and click File/Open to navigate

to whereever you saved myzoning.kmz and open the file. If you saved the

KMZ file to a web-readable directory such as the 'www' sub-directory of

your Athena locker, then you could also open myzoning.kmz in Google

Earth via 'Add/Network-link' using this URL:

http://mit.edu/your-athena-id/www/myzoning.kmz

Finally, save and turn in a screen shot of the Google Earth screen once

you have zoomed in to the Boston area and can see the pin-map of your

variances.

Lab Assignment

The only parts of this exercise that you need turn in are:

(a) The cutoff 1990 median housing value that

you used to distinguish above-average value and below-average value

census tracts. (Besides listing the median housing value, add a sentence

or two of explanation regarding how you determined your value. The

results are not sensitive to the precise value - we just want to divide

census tracts into high and low groups with about the same number of

tracts in each group.) Also, turn in the two numbers

(zoning variance count and fraction approved) from both the high income

and low income tracts, plus the Roslindale table from

Part (4-i), and

(b) A PDF copy of your ArcMap layout showing your two

maps of Boston from Parts (g) and (h) with the location of

the vacant-to-housing variances highlighted in various ways, and

(c) A JPG or PNG version of the screen shot showing

your Google Earth map mashup of vacant-to-housing

zoning variances from Part 5.

Try to put this files together in a PDF file. Be sure to put your name and

Athena username within each separate file that you turn in. Upload your work

to the class Stellar site by the start of class on Tuesday, March 6,

2018.

OPTIONAL Question (just for fun...)

For all those variances whose board decision is known, what is the

approval rate (code 1 or 2) broken down by your institutional/landuse

categories from Lab #3? What fraction are in high income neighborhoods

and tracts (as defined earlier) by each institutional/landuse category?

What about for the vacant-to-housing subset? Map the locations of the

variances in the various categories. Do you see any patterns worth

pursuing? Are they closer to major roads, downtown, in more/less dense

residential neighborhoods,...? Is it the ownership type or landuse type

(e.g., single family, two-family, triple-deckers, or apartments) that

account for different approval rates (to the extent that you find

differences)?

Home

| Syllabus

| Lectures

| Labs

| CRON

| MIT

Created by Joe Ferreira, modified

2002-2018 by thg, jinhua, jf, josemg