January-March 1999 Issue

Nuclear Power Safety Regulation:

Reducing Risk and Expense

January-March 1999 Issue

Nuclear Power Safety Regulation:

Reducing Risk and Expense

![]() n 1997, the Nuclear Regulatory Commission announced that safety regulation for nuclear power plants would be changing. In the past, plant operators had to deal with long checklists of tests and inspections. In the future, they would instead set goals for overall plant performance and undertake procedures focusing on specific components and systems whose failure would most threaten plant safety. However, a period of transition is required; and the ultimate success of the new system is not assured. A new Energy Laboratory study demonstrates the practicality and potential economic and safety benefits of the new "risk-informed, performance-based" regulation and points to several obstacles now preventing its full implementation. A major portion of the study focuses on the example of the emergency diesel generator, a back-up electricity-generating device whose failure could pose a serious risk to the reactor core. Based on surveys of experts and data from an operating nuclear plant, the MIT researchers and their industrial collaborators determined that many of the current requirements do not increase safety and some may actually reduce it. They developed a different inspection and maintenance plan that would--according to probabilistic risk assessments--yield the same or greater level of safety with less effort. However, their work suggests that complete implementation of performance-based regulation requires better data bases and analytical models, the integration of expert opinion into the regulatory process, and improved procedures for testing the reliability of components. They conclude that performance-based regulation, properly implemented, could be critical to the future viability of nuclear power and that it might be the source of important safety and economic gains for other regulatory agencies as well.

n 1997, the Nuclear Regulatory Commission announced that safety regulation for nuclear power plants would be changing. In the past, plant operators had to deal with long checklists of tests and inspections. In the future, they would instead set goals for overall plant performance and undertake procedures focusing on specific components and systems whose failure would most threaten plant safety. However, a period of transition is required; and the ultimate success of the new system is not assured. A new Energy Laboratory study demonstrates the practicality and potential economic and safety benefits of the new "risk-informed, performance-based" regulation and points to several obstacles now preventing its full implementation. A major portion of the study focuses on the example of the emergency diesel generator, a back-up electricity-generating device whose failure could pose a serious risk to the reactor core. Based on surveys of experts and data from an operating nuclear plant, the MIT researchers and their industrial collaborators determined that many of the current requirements do not increase safety and some may actually reduce it. They developed a different inspection and maintenance plan that would--according to probabilistic risk assessments--yield the same or greater level of safety with less effort. However, their work suggests that complete implementation of performance-based regulation requires better data bases and analytical models, the integration of expert opinion into the regulatory process, and improved procedures for testing the reliability of components. They conclude that performance-based regulation, properly implemented, could be critical to the future viability of nuclear power and that it might be the source of important safety and economic gains for other regulatory agencies as well.

Traditionally, the regulation of nuclear power has relied on long, fragmented checklists of requirements that safety-related systems in a plant must satisfy. Most of the requirements were adopted as nuclear safety regulation matured in response to some mishap or newly appreciated concern, with no attention paid to the coordination or coherence of the list or the consistency of its effectiveness at plants of differing design. To identify areas of concern, regulators used an approach called deterministic analysis. They reviewed diagrams of nuclear systems and decided which component failures or combinations of failures would lead to serious problems such as core damage. They then required that the components involved have one or more backups that can switch on if the primary component fails. Athough deterministic analysis is used in the regulation of most hazards in society today, it has shortcomings. In particular, examinations of nuclear plants were not done systematically and comprehensively in a formal, explicit way. As a result, some important potential failures were missed. Nevertheless, the list of regulatory requirements that resulted is so extensive that power plant owners typically have no time or money to pursue innovations and improvements that might further increase safety.

Recognizing such problems, in the late 1990s the Nuclear Regulatory Commission (NRC) announced that it would be moving to a performance-based approach to nuclear power regulation--a change that Professor Michael W. Golay and his colleagues believe could significantly improve both the safety and economics of nuclear power. Under the new regulatory regime, the goal is not to fulfill a checklist of tasks but to define and achieve goals for system performance. Regulatory requirements are motivated not by the possibility but the probability that things will go wrong. That probability is quantified using "probabilistic risk assessment" (PRA), a systematic method for analyzing a complex system to determine the risk that certain events will occur. Developed in the 1970s, PRA calculates the probability that each component in the system will fail or will operate outside its "expected" range of behavior. It then calculates how likely that behavior is to affect other components and their operation. Taking all the pieces together, the PRA analysis finally calculates the probability that the overall system will fail.

According to Professor Golay, such assessments improve the basis for regulation compared to the use of traditional deterministic analyses alone. PRA predictions of the absolute probability that an overall system will fail are substantially uncertain--a shortcoming that has precluded the use of PRAs as a regulatory tool for two decades. Yet PRAs are excellent at examining all identified combinations and sequences of component failures and determining which are most likely to occur and to cause serious damage. Based on those insights, regulators can focus requirements for maintenance, safety testing, and upgrades on components and systems where improvements will most benefit public safety and where additional information will clarify now-uncertain behavior. As new information reduces uncertainty, PRA analysis can redefine where the greatest potential for improvement lies; and regulations can change accordingly.

Risk-informed performance-based regulation may yield the safety and cost improvements needed to keep nuclear power a viable energy source in the future. Yet such regulations have not been implemented widely. To find out why not, Professor Golay and graduate students Sarah Abdelkadar, Jeffrey D. Dulik, Frank A. Felder, and Shantel M. Utton performed a set of case studies on important components. One focused on the emergency diesel generator (EDG), a device that provides a nuclear plant with electricity in an emergency. Also involved were collaborators from Northeast Utilities Services Corporation and the Idaho National Engineering and Environmental Laboratory.

The research team identified the EDG as a primary target for regulatory improvement for two reasons. First, EDGs are critical to safety. If other sources of electricity are cut off, the EDG must quickly switch from stand-by mode to providing electricity for functions that range from lighting the control room to operating the pumps that provide cooling water to the reactor core to prevent damage. Second, under current regulations, EDGs require frequent and extensive testing and maintenance; yet the requirements were established at a time when there was little relevant operational history for EDGs. A revision of EDG operations reflecting accumulated experience could lead to the replacement of unproductive procedures with more productive ones, yielding a higher level of safety with a lower expenditure of resources.

The case study focused on the EDGs used at Unit 3 of the Millstone Nuclear Power Station, operated by Northeast Utilities. The researchers used several approaches to understand the effectiveness of today's regulations. They surveyed employees who operate, inspect, and evaluate the EDGs at Millstone-3 as well as at other utilities that use the same type of EDGs. They also examined the inspection, maintenance, and overhaul requirements for EDGs used in hospitals, the US Navy, and the Federal Aviation Administration. The message was clear and consistent: the NRC requirements are excessively demanding. In the other settings, EDG inspections and maintenance are less frequent and less intrusive to the diesel, yet the performance and reliability of the EDGs have been satisfactory.

Working with historical data and PRA analyses of the EDGs at the Millstone-3 plant, the researchers recommended specific changes in the NRC requirements. For example, one NRC requirement is to start, load, and operate the EDG for an hour every month (or more frequently if the EDG malfunctions). But when needed, EDGs often must run for dozens of hours; and NRC data show that significant failures can occur throughout the first 17 hours of operation. (Later failures are much less frequent.) The proposed plan therefore replaces the monthly, one-hour test with a 24-hour test performed every 12 months and whenever the reactor is refueled (typically every 18 months).

The current NRC requirements also call for a major inspection every 18 months that requires partially disassembling the EDG. Industry data show that these intrusive procedures rarely reveal failures. Moreover, operators can introduce new defects as they inspect and reassemble the EDG, thereby actually decreasing the reliability of the device. Such intrusive procedures are not part of the inspection in the other EDG-using industries.

The proposed plan replaces such tasks with more thorough visual inspections and extensive monitoring during the testing procedures. Such monitoring has been made possible by advances in computer technology during the past ten years. The proposed monitoring can replace certain inspection and maintenance tasks. For example, rather than periodically checking the tightness of bolts in the exhaust manifold, operators would continuously monitor exhaust pressure and leakage--an early warning of loose bolts. Operators also now must check the alignment of the crankshaft and the condition of the bearings--tasks that are very intrusive and difficult to perform and have never revealed problems at Millstone-3. Vibration monitoring and oil analysis would achieve the same goal. According to the researchers' analysis, the proposed monitoring system would provide information about the occurrence of about 90% of the events that together contribute about half of the current risk of EDG failure. (Because such monitoring methods are not yet in use, a period of learning would be required to develop the expertise needed for interpreting the data obtained.)

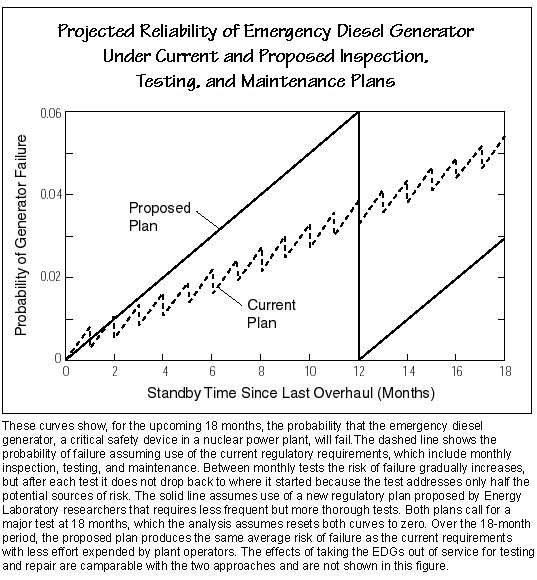

To see how their proposed changes would affect the risk of plant failure, the researchers used PRA techniques to calculate the probability of EDG failure for the upcoming 18 months under the two plans. In the figure below, the dashed line assumes use of current testing procedures, while the solid line assumes use of the researchers' proposed plan, including monitoring during tests. The jagged shape of the dashed line reflects the increase in the probability of failure between one monthly test and the next. The tasks undertaken in the monthly test address only half of the potential risk of failure. Therefore, after each monthly test the probability of failure drops down only half of the past month's increase rather than to zero. The gradual upward climb continues until the 18-month point, when operators will perform the major (intrusive) procedures. Subsequently, the probability of failure drops back to zero.

The solid line reflecting the probability of failure under the proposed plan behaves quite differently. Because there are no monthly tests, it climbs continuously until the annual test, when it drops back to (at least approximately) zero. The major test performed at 18 months (during the refueling outage) also resets the probability of failure back to approximately zero.

For the first 12 months, the probability of failure is generally higher with the one-year test; for the next six months, it is lower. But over the 18-month span, the average probability of failure is about the same with the two approaches. The results for the proposed plan can be varied by changing the duration and frequency of the extensive tests.

These results do not take into account several factors. One is the increased probability of failure due to having only one of two EDGs in service during the testing. At first glance, it may appear that shutting down one EDG for a full 24 hours would be worse on that score. But every test requires time for disconnecting and then reconnecting the EDG. Therefore, the total "out-of-service" time with the annual 24-hour test is probably comparable to that with the one-hour tests performed every month. The analysis also does not account for the increase in the probability of failure immediately after the major intrusive procedures required every 18 months under the current plan--an increase thought to be far greater than that associated with the non-intrusive tests of the proposed plan.

A final, hard-to-quantify effect involves employee morale. Plant operators now perform their job "by the book," simply to meet written requirements. Knowing that the tasks they undertake provide a valid contribution to plant safety would likely improve employee morale and performance and hence plant safety. Moreover, because the proposed plan would involve fewer required activities--and probably fewer repair operations--labor costs and other expenses would drop.

The researchers conclude that their proposed plan would reduce both risks and expenses--and performing the 24-hour test more frequently would reduce risks still further. While performance-based regulation thus looks promising, the EDG case study points to three issues that must be addressed before it can be implemented confidently. First, researchers need more data and better analytical techniques (both PRA and deterministic). Especially important is an improved understanding of the types of failures that specific components experience, how often each type occurs, and whether failures become more likely as the interval between tests is increased.

Accomplishing those tasks will take some time; and even then conclusive information may not be available for phenomena such as human performance, management quality, and common cause failures. Therefore, objective analytical evidence must be supplemented by the subjective judgment of experts in the field. Researchers need to develop more formal methods for integrating such judgment into the regulatory process; and society must accept the premise that advice from a panel of "wise men" can be a sound basis for regulation, even though the practice--like trial by jury--cannot be defended quantitatively.

Finally, procedures for testing components must be revised. Today, the acceptability of a component is often determined not by testing but by examining its pedigree. (Was it made using high-quality manufacturing processes and machines?) This approach can be improved by following the lead of the manufacturing and electronics industries, where statistical quality-control standards are high and techniques to meet them are well developed. Of particular importance is adopting a clearly defined program of repair and subsequent testing for components that fail in service.

Professor Golay stresses that the results of this study may have implications for other regulatory bodies, including the Environmental Protection Agency, the Occupational Safety and Health Administration, and the Federal Aviation Administration. The NRC is in a good position to implement performance-based regulation because the nuclear industry has been the subject of many more systematic analyses of sources of risk than has any other industry. The results of those analyses provide a foundation for the switch to performance-based regulation. Given the projected improvements in safety and cost, other agencies may be advised to follow the NRC example, investigating sources of risk in their fields and subsequently moving toward performance-based regulation.

Michael W. Golay is a professor of nuclear engineering. Shantel M. Utton and Jeffrey D. Dulik received their MS degrees from the Department of Nuclear Engineering in February 1998. Sarah Abdelkadar received her MS degree from MIT's Technology and Policy Program in 1998. Frank A. Felder is a PhD candidate in that program. This research was supported by the University Research Consortium of the Idaho National Engineering and Environmental Laboratory. Further information can be found in references.