Deep Learning

Overview: We have developed algorithms for neural architecture search and for training neural networks with limited labeled data. Together, these methods aim to reduce the need for human expertise and labor when designing deep learning systems.

Neural Architecture Search with Reinforcement Learning

Paper Code

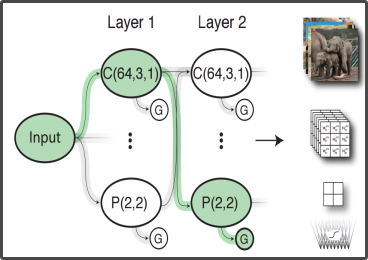

At present, designing convolutional neural network (CNN) architectures requires both human expertise and labor. We developed a reinforcement learning agent to automatically generate high-performing CNN architectures for a given learning task. On image classification benchmarks, the agent-designed networks (consisting of only convolution, pooling, and fully-connected layers) beat human-designed networks created from the same layer types.

Designing Neural Network Architectures using Reinforcement Learning

Baker, Gupta, Naik, and Raskar

International Conference on Learning Representations (ICLR) 2017

Accelerating Neural Architecture Search using Performance Prediction

Paper Data

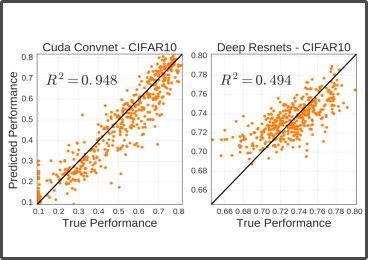

Hyperparameter optimization and metamodeling methods are computationally expensive due to the need to train a large number of neural network configurations. We obtain significant speedups for these methods with an early-stopping strategy based on prediction of final validation accuracy of partially trained neural network configurations using a frequentist regression model trained with features based on time-series validation accuracies, architecture, and hyperparameters.

Accelerating Neural Architecture Search using Performance Prediction

Baker*, Gupta*, Raskar, and Naik

International Conference on Learning Representations (ICLR) Workshops 2018

Also presented at the Neural Information Processing Systems (NIPS) Workshop on Meta-Learning 2017

Learning with Limited Labeled Data

Paper Code

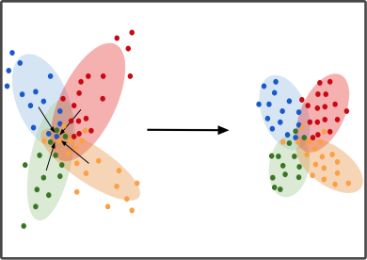

We propose two additional loss terms for end-to-end neural network training for Fine-grained visual classification (FGVC) tasks: (a) a loss term that tries to maximize the entropy of the output probability distributions, (b) a loss term that tries to minimize the Euclidean Distance between output probability distributions. These methods obtains state-of-the-art performance on all six major fine-grained classification datasets and also demonstrate improved localization ability.

Improving Fine-Grained Visual Classification using Pairwise Confusion

Dubey, Gupta, Guo, Farrell, Raskar, and Naik

European Conference on Computer Vision 2018

Maximum-Entropy Fine Grained Classification

Dubey, Gupta, Raskar, and Naik

Neural Information Processing Systems (NIPS) 2018

Machine Learning for Social Good

Overview: We have developed computer vision algorithms that harness Street View imagery to evaluate perceptual preferences for street scenes for people across the globe, along with measurements of populations and the economy at street-level resolution and global scale.

Streetchange: Measuring Physical Urban Change at Scale

Website Paper

Streetchange is a computer vision algorithm that calculates a metric for change in built infrastructure of a street block using two images of the same location captured several years apart. Streetchange is based on semantic segmentation and a machine learning model that predicts human perceptual preferences for street scenes. Streetchange data for 1.5 million street blocks from 5 US cities showed that a dense, highly-educated population was the most important predictor of urban growth.

We used Streetchange to create an interactive visualization which has been visited by thousands of users till date.

Computer Vision Uncovers Predictors of Physical Urban Change

Naik, Kominers, Raskar, Glaeser, Hidalgo

Proceedings of the National Academy of Sciences (PNAS) 2017

Do People Shape Cities, or Do Cities Shape People?

Naik, Kominers, Raskar, Glaeser, and Hidalgo

National Bureau of Economics Research (NBER) Working Paper 2015

Media Mentions: Citylab, Fast Company, Forbes, Harvard Gazette, HBS Working Knowledge/Quartz, MIT News, New York Times, Yahoo! News.

Quantifying Global Urban Perception with Deep Learning

Paper Code Data

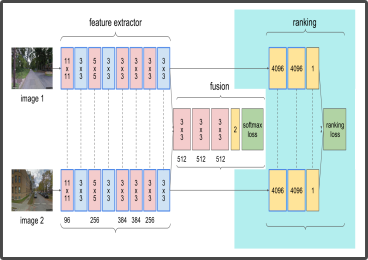

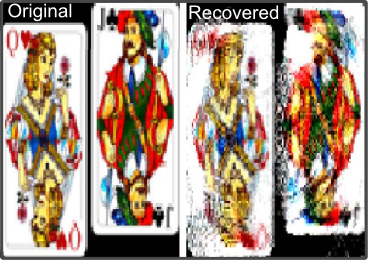

We extended Streetscore with an efficient neural network-based method that is capable of computing several perceptual attributes of the built environment from hundreds of cities from all six inhabited continents. Our method is based on a Siamese-like convolutional neural architecture, which learns from a joint classification and ranking loss, to predict human judgments of pairwise image comparisons.

Deep Learning the City: Quantifying Urban Perception At A Global

Scale

Dubey, Naik, Parikh, Raskar, and Hidalgo

European Conference on Computer Vision 2016

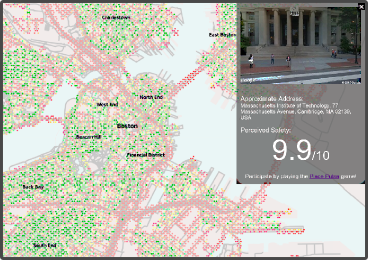

Streetscore – Quantifying Urban Appearance

Website Paper Talk Blog Post

Streetscore is a computer vision algorithm that predicts how safe the image of a street looks to a human observer. We used Streetscore to generate the largest dataset of urban perception

to date covering more than 1 million street blocks from 21 American cities. Social scientists have used Streetscore to study the relationship between visual appearance of cities and mobility, migration, social cohesion, health outcomes, urban upkeep, and zoning policies

We used Streetscore to create an interactive visualization which has been visited by thousands of users till date.

Streetscore – Predicting the Perceived Safety of One Million Streetscapes

Naik, Philipoom, Raskar, and Hidalgo

IEEE Computer Vision & Pattern Recognition Workshops 2014

Cities Are Physical Too: Using Computer Vision to Measure the Quality and Impact of Urban Appearance

Naik, Raskar, and Hidalgo

American Economic Review: Papers & Proceedings 2016

Are Safer Looking Neighborhoods More Lively?: A Multimodal Investigation into Urban Life

De Nadai, Vieriu, Zen, Dragicevic, Naik, Caraviello, Hidalgo, Sebe, and Lepri

ACM Multimedia Conference 2016

Media Mentions: Atlantic Citylab, Daily Mail, The Economist, Fast Company, MIT News, New Scientist.

Predicting Household Income from Street-level Imagery

Paper

We developed a computer vision model to predict the median household income at block group level from Street View images in New York and Boston. We are now extending this work to developing countries, where high quality data on socioeconomic characteristics is often unavailable. This work is a part of a journal article that discusses the promises and limitations of new digital sources of data for studying cities.

Big Data and Big Cities: The Promises and Limitations of Improved Measures of Urban Life

Glaeser, Kominers, Luca, and Naik

Economic Inquiry 2017

Media Mentions: The Atlantic, Chicago Policy Review, Forbes/HBS Working Knowledge.

Time-of-flight and 3D Imaging

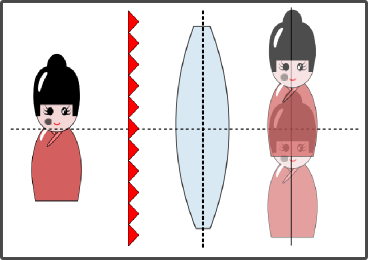

Overview: Time-of-flight (TOF) cameras enable three-dimentional imaging and the study of interaction of light and the physical environment. I have utilized continuous-wave TOF imaging (e.g., Microsoft Kinect v2) and Ultrafast TOF imaging (e.g., streak cameras) to develop methods for multipath interference correction, reflectance capture, and imaging through scattering media, among other applications. For a summary of this work, see the invited paper at SPIE Defense+Security 2016.

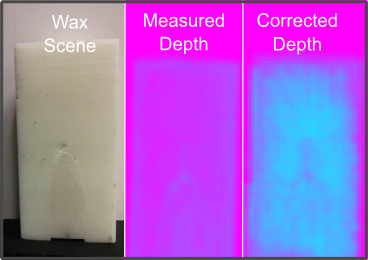

Multipath Interference Correction in TOF Cameras

Paper Supplement

This was my internship project at Microsoft Research with

Sing Bing Kang. We proposed a method to correct for

multipath interference in TOF cameras

using a closed-form solution enabled by the separation of direct and

global components of light transport. We implemented this method

using a Microsoft Kinect v2 sensor and an infrared projector.

A Light Transport Model for Mitigating Multipath Interference in Time-of-flight Sensors

Naik, Kadambi, Rhemann, Izadi, Raskar, and Kang

IEEE Computer Vision & Pattern Recognition 2015

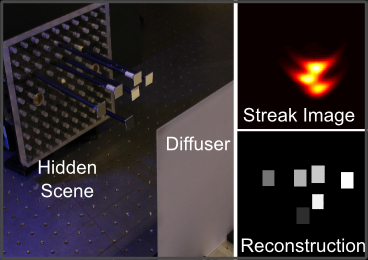

Imaging Through Diffuser

Paper

We proposed a method to estimate the reflectance of a scene hidden

behind a diffuser by analyzing the backscattered light with an ultrafast TOF camera.

Unlike coherence based methods, our method—which utilizes optimization techniques—is

suitable for imaging large objects. This work has potential applications in imaging

through biological tissue.

Estimating Wide-angle, Spatially Varying Reflectance using Time-resolved Inversion of Backscattered Light

Naik, Barsi, Velten, and Raskar

Journal of the Optical Society of America A 2014

Selected by Editors to appear in a Special Issue of Virtual Journal of Biomedical Optics.

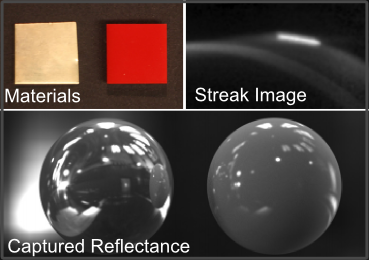

BRDF Capture With Ultrafast TOF Imaging

Paper Video

We proposed the first method to rapidly capture the BRDF of multiple materials simultaneously

using Ultrafast TOF imaging. Our method can capture BRDFs over long distances

from a single viewpoint without encircling equipment. BRDF capture is useful for photorealistic rendering, image relighting, and material identification.

Single View Reflectance Capture using Multiplexed Scattering and Time-of-flight Imaging

Naik, Zhao, Velten, Raskar, and Bala

ACM SIGGRAPH ASIA 2011

Depth Sensing using Microprism Arrays

This was my internship project at Samsung Research America with Pranav Mistry's Think Tank Team. I proposed a novel, passive depth sensing architecture using microprism arrays

and validated its performance using optical simulations and optics bench experiments. I also developed template matching algorithms to improve raw depth estimates and implemented gesture recognition algorithms. US patent pending.

Applications of Ultrafast TOF Imaging

Pose Estimation using Time-resolved Inversion of Diffuse Light

Raviv, Barsi, Naik, Feigin, and Raskar

Optics Express 2014

Frequency Analysis of Transient Light Transport with Applications in Bare Sensor Imaging

Wu, Wetzstein, Barsi, Willwacher, O'Toole, Naik, Dai, Kutulakos, and Raskar

European Conference on Computer Vision 2012