Understanding the intrinsic memorability of images

Phillip Isola, Devi Parikh, Antonio Torralba, Aude Oliva

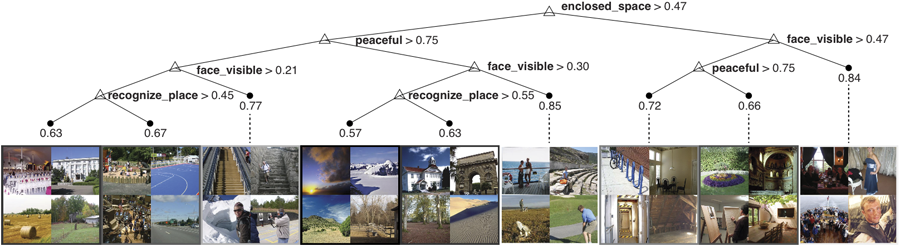

Images clustered in 'memorability space'. Number above each set of four images gives the memorability of that cluster.

Abstract

Artists, advertisers, and photographers are routinely presented with the task of creating an image that a viewer will remember. While it may seem like image memorability is purely subjective, recent work shows that it is not an inexplicable phenomenon: variation in memorability of images is consistent across subjects, suggesting that some images are intrinsically more memorable than others, independent of a subjects' contexts and biases. In this paper, we used the publicly available memorability dataset of Isola et al., and augmented the object and scene annotations with interpretable spatial, content, and aesthetic image properties. We used a feature-selection scheme with desirable explaining-away properties to determine a compact set of attributes that characterizes the memorability of any individual image. We find that images of enclosed spaces containing people with visible faces are memorable, while images of vistas and peaceful scenes are not. Contrary to popular belief, unusual or aesthetically pleasing scenes do not tend to be highly memorable. This work represents one of the first attempts at understanding intrinsic image memorability, and opens a new domain of investigation at the interface between human cognition and computer vision.

Paper preprint

Isola, P., Parikh, D., Torralba, A., Oliva, A. Understanding the intrinsic memorability of images. To appear in Advances in Neural Information Processing Systems (NIPS), 2011.

Data

CVPR2011 Image memorability dataset. Includes target and filler images, precomputed features and annotations, and memorability measurements from our "Memory Game". Described in Isola et al. 2011.

Feature annotations -- Matlab structure with all 923 annotation types we considered during feature selection.

Bibtex

@inproceedings{IsolaParikhTorralbaOliva2011,

author="Phillip Isola and Devi Parikh and Antonio Torralba and Aude Oliva",

title="Understanding the intrinsic memorability of images",

booktitle="Advances in Neural Information Processing Systems",

year="2011"

}

Acknowledgments

We would like to thank Jianxiong Xiao for providing the global image features. This work is supported by the National Science Foundation under Grant No. 1016862 to A.O., CAREER Awards No. 0546262 to A.O and No. 0747120 to A.T. A.T. was supported in part by the Intelligence Advanced Research Projects Activity via Department of the Interior contract D10PC20023, and ONR MURI N000141010933.