|

|

About me

I am an associate professor in EECS at MIT studying computer vision, machine learning, and AI.

Previously, I spent a year as a visiting research scientist at OpenAI, and before that I was a postdoctoral scholar with Alyosha Efros in the EECS department at UC Berkeley. I completed my Ph.D. in Brain & Cognitive Sciences at MIT, under the supervision of Ted Adelson, where I also frequently worked with Aude Oliva. I received my undergraduate degree in Computer Science from Yale, where I got my start on research working with Brian Scholl. A longer bio is here.

⭐

• Quanta magazine published a very nice, general audience article covering the platonic representation hypothesis: link

• We have released a free online copy of our computer vision textbook here: visionbook.mit.edu

We have released a free online copy of our computer vision textbook here: visionbook.mit.edu

News

⭐• Quanta magazine published a very nice, general audience article covering the platonic representation hypothesis: link

•

We have released a free online copy of our computer vision textbook here: visionbook.mit.edu

We have released a free online copy of our computer vision textbook here: visionbook.mit.eduResearch Group

The goal of our group is to understand fundamental principles of intelligence. We are especially interested in human-like intelligence, which to us means intelligence that is built out of neural nets, is highly adaptive and general-purpose, and is emergent from embodied interactions in rich ecosystems.Questions we are currently studying include the following, which you can click on to expand:

Our goal in studying these questions is to help equip the world with the tools necessary to bring about a positive integration of AI into society; to understand intelligence so we can prevent its harms and to reap its benefits.

The lab is part of the broader Embodied Intelligence and Visual Computing research communities at MIT.

|

PhD Students Caroline Chan Hyojin Bahng Akarsh Kumar Shobhita Sundaram Sharut Gupta Ishaan Preetam-Chandratreya Kaiya (Ivy) Zhao Yulu Gan Adam Rashid Ching Lam Choi |

Postdocs Prafull Sharma Undergraduates Sophie Wang Cheuk Hei Chu |

|

Former Members and Visitors |

|

| Interested in joining the group? Please see info about applying here. | |

Recent Courses

6.s058: Advances in Computer Vision (Spring 2025)6.7960: Deep Learning (Fall 2024)

6.s953: Embodied Intelligence (Spring 2024)

New papers (All papers)

| Digital Red Queen: Adversarial Program Evolution in Core War with LLMs Akarsh Kumar, Ryan Bahlous-Boldi, Prafull Sharma, Phillip Isola, Sebastian Risi, Yujin Tang, David Ha arXiv 2026. [Paper (web)][Paper (pdf)][Code][Blog] | |

| Words That Make Language Models Perceive Sophie L. Wang, Phillip Isola, Brian Cheung arXiv 2025. [Paper][Website][Code] | |

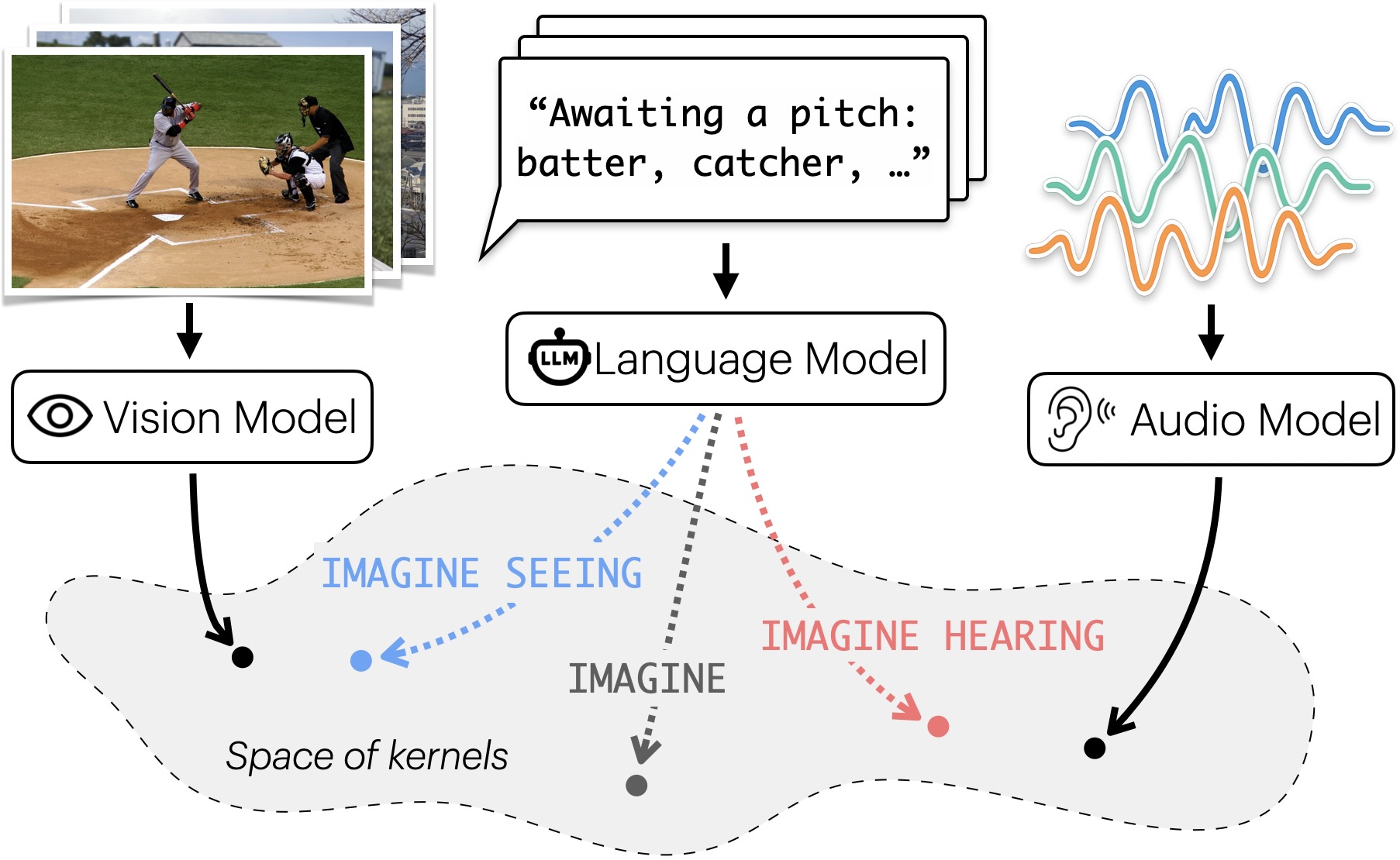

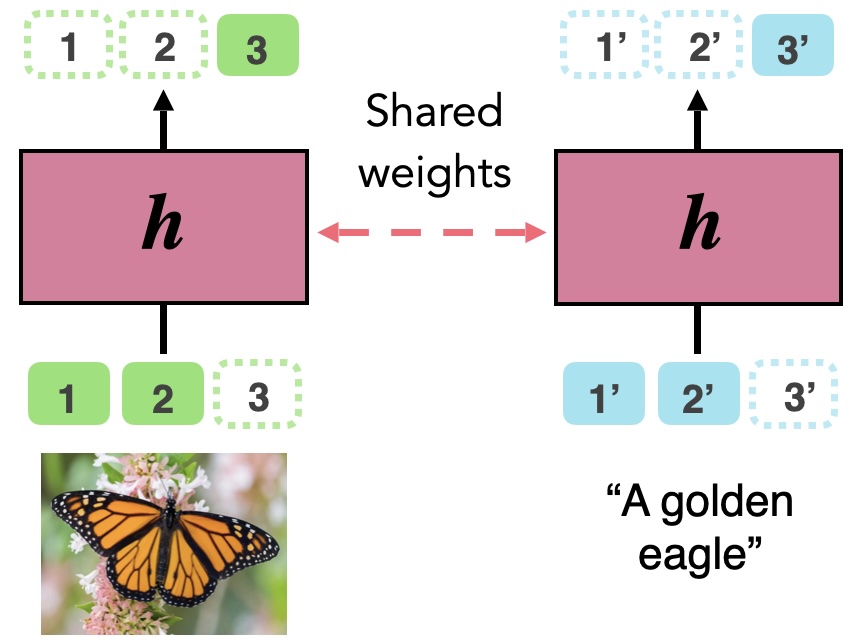

| Better Together: Leveraging Unpaired Multimodal Data for Stronger Unimodal Models Sharut Gupta, Shobhita Sundaram, Chenyu Wang, Stefanie Jegelka, Phillip Isola arXiv 2025. [Paper][Website][Code] | |

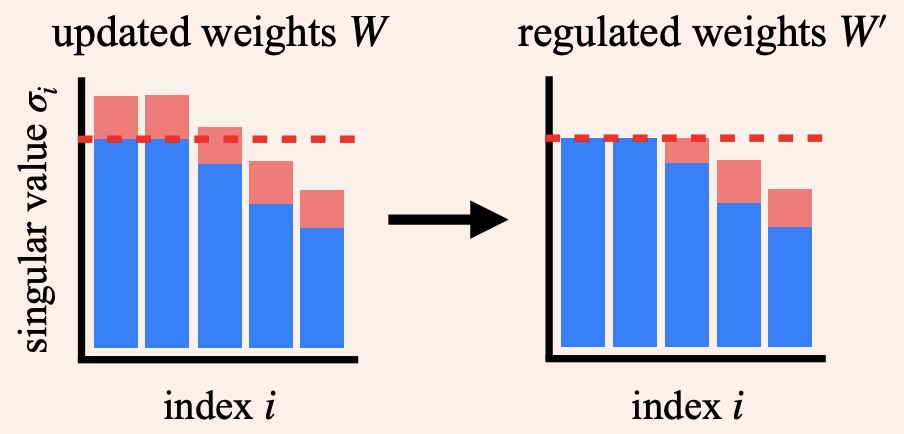

| Training Transformers with Enforced Lipschitz Constants Laker Newhouse, R. Preston Hess, Franz Cesista, Andrii Zahorodnii, Jeremy Bernstein, Phillip Isola arXiv 2025. [Paper][Code] | |

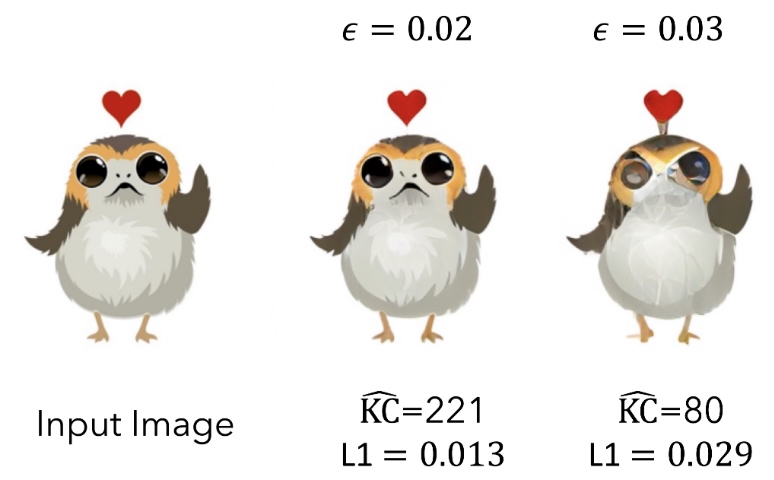

| Single-pass Adaptive Image Tokenization for Minimum Program Search Shivam Duggal, Sanghyun Byun, William T. Freeman, Antonio Torralba, Phillip Isola NeurIPS 2025. [Paper][Code] | |

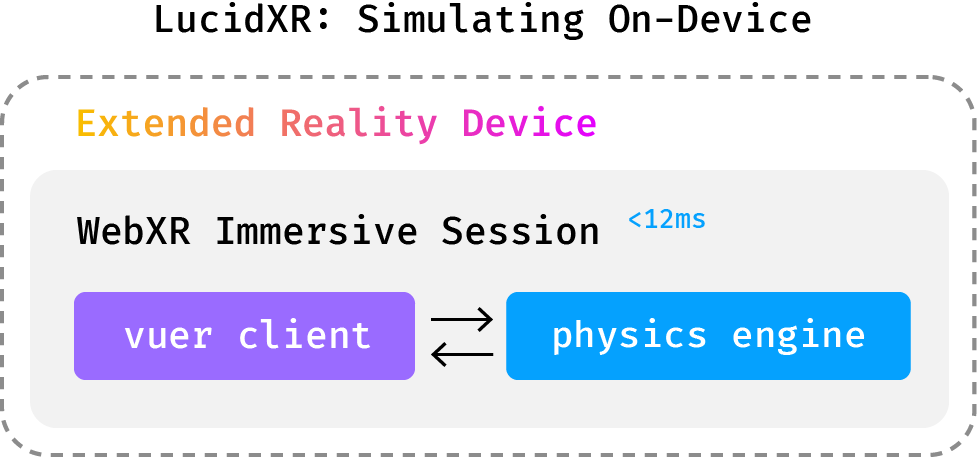

| LucidXR: A Framework for Learning Dexterous Manipulation from Human Demonstrations Yajvan Ravan*, Adam Rashid*, Alan Yu, Kai McClennen, Gio Huh, Kevin Yang, Zhutian Yang, Qinxi Yu, Xiaolong Wang, Phillip Isola, Ge Yang CoRL 2025. [Paper][Website][Video] | |

| Automating the Search for Artificial Life with Foundation Models Akarsh Kumar, Chris Lu, Louis Kirsch, Yujin Tang, Kenneth O. Stanley, Phillip Isola, David Ha Artificial Life 2025. [Paper (web)][Paper (pdf)][Code][Blog] | |

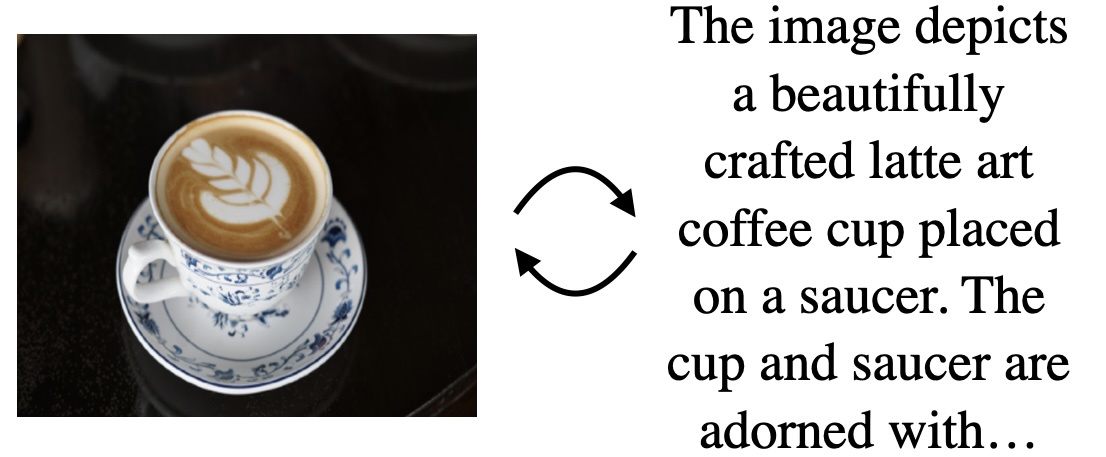

| Cycle Consistency as Reward: Learning Image-Text Alignment without Human Preferences Hyojin Bahng, Caroline Chan, Frédo Durand, Phillip Isola ICCV 2025. [Paper][Website][Code] | |

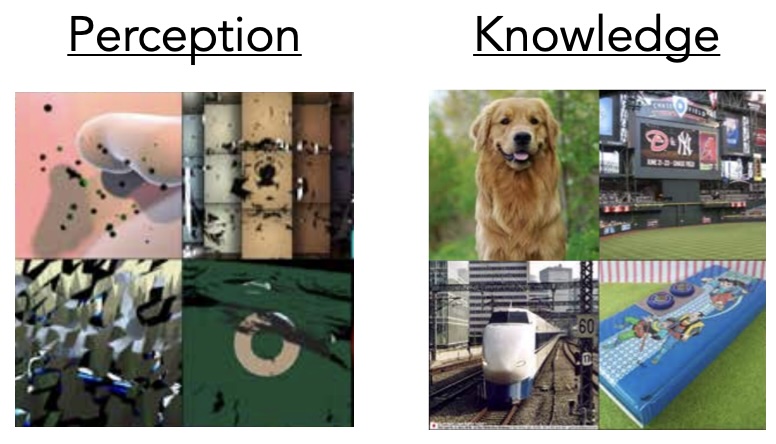

| Separating Knowledge and Perception with Procedural Data Adrián Rodríguez-Muñoz, Manel Baradad, Phillip Isola, Antonio Torralba ICML 2025. [Paper][Website][Code] | |

| Intrinsically Memorable Words Have Unique Associations With Their Meanings Greta Tuckute, Kyle Mahowald, Phillip Isola, Aude Oliva, Edward Gibson, Evelina Fedorenko Journal of Experimental Psychology: General 2025 (Editor's Choice article). [Paper] |