Understanding Semantics

Semantics is the meanings of words or sentences, not only determined by direct definition, but also by connotation and context. To gain a computational understanding of semantics without external representation, one would use an associative approach.

Denotative Meaning

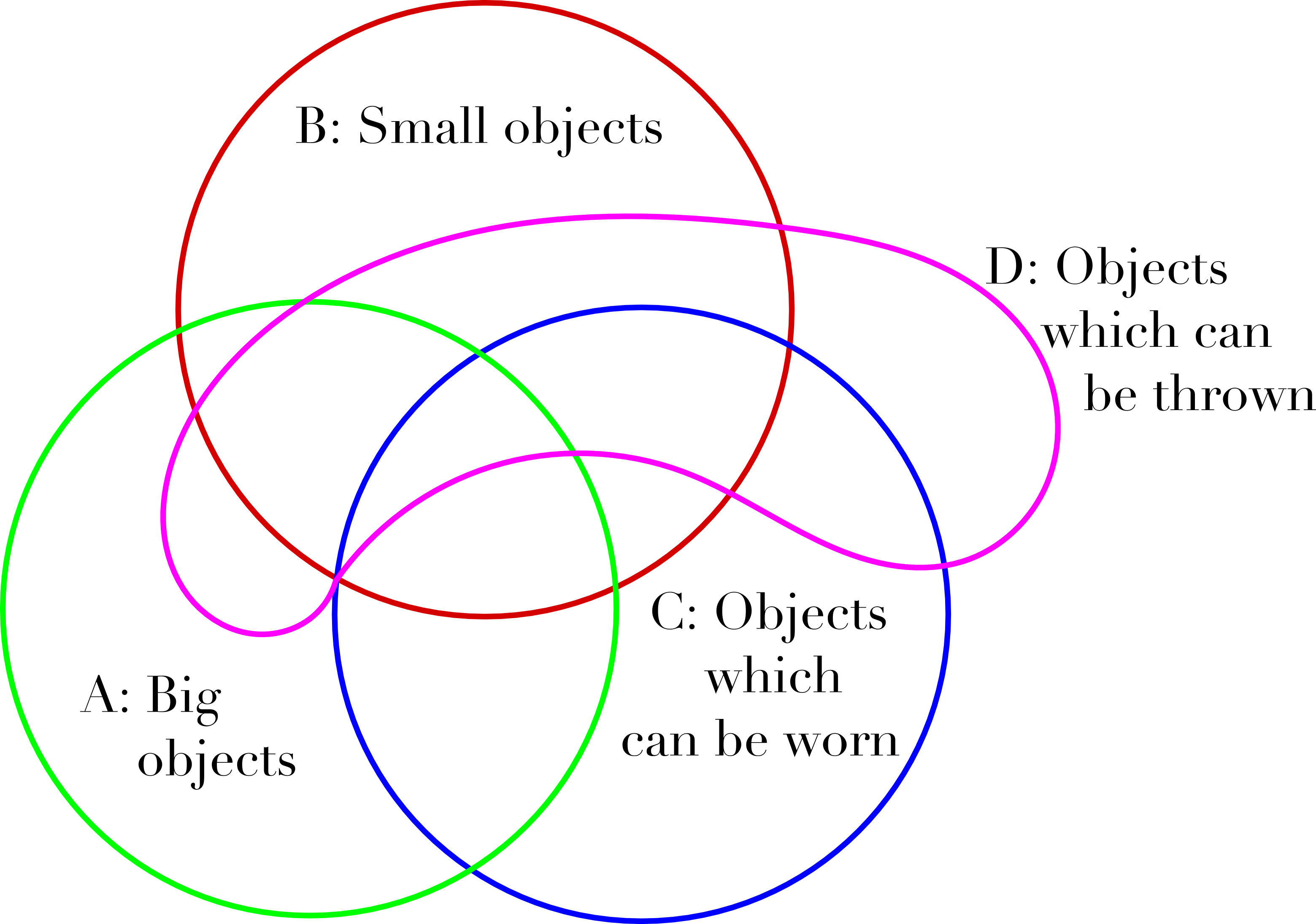

When analyzing denotative meaning, a straightforward associative approach is used. For a noun, different adjectives and verbs can be used when employing the noun in a sentence. The particular set of adjectives and verbs for that noun are representative of the nature of the object. A second noun will have some shared verbs and adjectives, and the degree to which this is true will indicate the similarities of the two objects. Conversely, a verb would be understood based on the different objects associated with it, especially the order of the nouns, i.e the subjects and objects used with that noun. From a set of sentences, a threshold of similarity could be experimentally determined, which would result in the categorization of the different nouns and verbs. For example:1. The man wears the big hat.

2. The man throws the small hat

("hat: is known to be something that can be "big", "small", "worn", and "thrown")

3. The man throws the big ball.

4. The man bounces the small ball.

("ball" is like "hat" in having the ability to be "big" or "small" and can be "thrown", however it has not yet be found that it can be "worn", and "hat" has not be shown to be able to be "bounced")

5. The man wears the big shoes.

6. The man throws the small shoes.

("shoes" is then understood as the same type of object as "hat")

Using these characteristics of nouns, a complex associative web would be formed, where objects have meaning based on their relation to a set of other objects, which also have meaning in relations to another set objects that the first may not. This web may be represented visually by a venn diagram similar to the one below.

For the verbs "wear", "throw", "bounce" etc., the program would interpret the above sentences as suggesting that only "man" can conduct these actions, but cannot be the recipient of these actions, while certain nouns can be the recipient of these actions. The training sentences do not require a strict order, nouns or verbs would simply be stored until another word was found to have the same characteristic(s). Nevertheless, this method is limited by the requirement of large amounts of sentences in order to gain any significant understanding of a given word.

To understand a concept such as time, the program would require some initial specification, as well as using a time indicator in all sentences referring to the past or present. It would start with the conditional parameter that if a sentence has certain words, then a time different from the current moment is being referenced, and the verb used is similar to another verb (the present form, which is the first form the program learns), but with a slightly different morphology. However, some words ("last", "next" cannot be used strictly as indicators of time, as they can be used in other contexts. The same applies for the use of negation, as in the sentence "The man is not big", such that negating words would have to be explained prior and would result in the noun or verb being categorized as different from other nouns and verbs with shared associative terms.

Context

To allow a computer to have an understanding of context, a system could be implemented to keep track of previously learned information. Cognitive scientist Marvin Minsky suggests that a series of "frames" which represent generalized situations can be used to represent cognition computationally. These general frames could then be modified with situation-specific variables. For example, if a series of sentences read "John is playing soccer. He kicked the ball," the program would be able to select a general frame which it could use to keep track of relevant variables, such as "John" being the subject of this action - hence linking this to the "he" in the next sentence.

Connotation

Connotation and symbolism could prove difficult for a computer to understand. Here, a method similar to the associative approach above could be implemented after the initial denotative associations were formed. The training sentences would be ones using symbolism rather than literal ones as above. If a word is suddenly associated with a word which is not within the proper categorization, such as "a heart of stone," it could be interpreted by the computer as a connotative association. This would allow the computer to examine characteristics related only to one or the other, hence gaining an understanding of the symbolic associations of words.