| Vol.

XXII No.

3 January / February 2010 |

| contents |

| Printable Version |

NRC Doctoral Rankings: The Weighting Game

Rankings of U.S. doctoral programs by the National Research Council (NRC) are widely anticipated. The new ranking approach, producing ranges of rankings rather than a single ranked list for each discipline, has added an additional layer of complexity to an already widely discussed project. The long awaited results are based on data collected for the 2006 academic year. While the timing of the release of the rankings is, as of this writing, unknown, the NRC recently shared the methodology it is using.

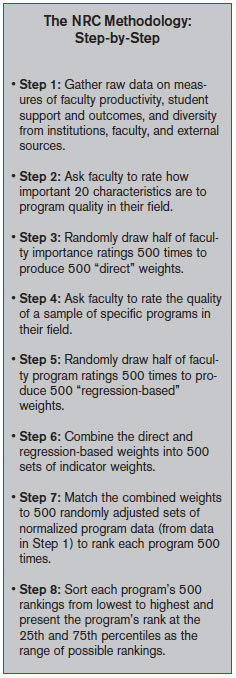

The methodology of the current study was refined to rely more heavily on quantitative, objective data and to better reflect the uncertainty associated with measuring program quality, in response to criticisms of previous NRC assessments of PhD programs.. Instead of calculating a single rank per program, the NRC is using a re-sampling statistical technique (similar to a Monte Carlo method) to produce a range of rankings that account for statistical error, year-to-year variations in metrics, and the variability of faculty ratings.

The methodology used by the NRC is considerably more complicated than the approach used by other ranking bodies, such as U.S. News & World Report. Though the actual rankings are derived from objective data on 20 program characteristics, the weights applied to these data were developed through faculty surveys gathering faculty’s direct statements about the relative importance of various attributes, as well as weights inferred from faculty’s rankings of a sample of actual programs.

To gather data on the importance of the 20 indicators, faculty members in each field were asked to directly rate which characteristics were the most important aspects of a quality PhD program. A second set of weights were created as well, using a sample of faculty in each discipline who were asked to rate a sample of specific programs. Statistical techniques were used to infer the weights that best predicted the stated estimates of program quality.

These two sets of weights were then combined and applied to the program data to prepare the rankings. Finally, statistical re-sampling techniques were used to generate the range of rankings to be published in the final report.

As soon as the NRC rankings are released, MIT’s Office of Institutional Research will disseminate the results to departments.

A more detailed presentation on the NRC rankings, including sample tables, can be found here: web.mit.edu/ir/rankings/nrc.html

A Guide to the Methodology of the National Research Council Assessment of Doctorate Programs can be found here: www.nap.edu/catalog.php?record_id=12676.

| Back to top | |

| Send your comments |

| home this issue archives editorial board contact us faculty website |